Limitations of Spark

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Memory Consumption

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Let's start with the first limitation of Spark: its memory consumption. Apache Spark utilizes in-memory processing, which allows for faster computation, but it does require a significant amount of RAM to do so. Why do you think this might be an issue?

It sounds like it could be expensive if you need more memory, especially for large datasets.

Exactly! Organizations might face challenges in scaling their infrastructure due to high RAM requirements. Can anyone recall how this compares to Hadoop's approach?

Hadoop stores intermediate data on disk, so it doesn't need as much memory as Spark does.

Great point! Hadoop's efficiency with storage can be beneficial when memory resources are limited.

Cluster Tuning

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now, let’s move on to the second limitation: the necessity for cluster tuning. Spark's performance can vary greatly depending on how the cluster is set up. What are some aspects that might need tuning?

Maybe the number of executors or memory allocated to each task?

Yes! Adjusting executor memory, number of cores, and shuffle settings can really affect performance. Is this process straightforward?

I guess it could get complicated, especially for beginners.

Absolutely! It requires a good understanding of Spark's architecture to optimize it effectively.

Data Governance

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now we will discuss Spark's limited built-in support for data governance. What do you think that means for organizations?

It sounds like it would be hard for companies to ensure their data is secure and comply with regulations.

Precisely! Poor data governance could lead to compliance issues, especially when handling sensitive data. Can anyone think of specific scenarios where this might be important?

In industries like finance or healthcare, there are strict data regulations that need to be followed.

Exactly right! Ensuring data privacy is critical in those fields, which can make Spark's limitations a considerable concern.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

While Apache Spark delivers fast and flexible big data processing capabilities, it has several limitations including high memory usage compared to Hadoop, the need for meticulous performance tuning of clusters, and a lack of comprehensive built-in data governance features.

Detailed

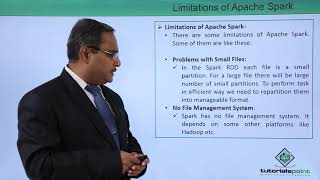

Limitations of Spark

Apache Spark, despite its advantages in speed and versatility in handling big data, does have notable limitations that users should be aware of. Understanding these limitations is crucial for effectively leveraging Spark in various processing scenarios.

- Memory Consumption: One of the biggest drawbacks of Spark is its higher memory consumption compared to Hadoop. The in-memory computing approach, while boosting performance, necessitates significantly more RAM. This can lead to challenges for organizations with limited resources.

- Cluster Tuning: Achieving optimal performance in Spark often requires careful tuning of the cluster. Several parameters can be adjusted to achieve better results, but the process can be complex and time-consuming, especially for those unfamiliar with the platform or big data architectures.

- Data Governance: Spark offers limited built-in support for data governance. Organizations dealing with sensitive or regulated data may find it considerably challenging to implement adequate governance and compliance measures within Spark's environment. This can result in concerns regarding data security and integrity.

In summary, while Spark is a powerful tool for big data processing, potential users must understand its limitations concerning memory use, performance tuning, and data governance. These considerations are essential in the decision-making process when planning big data workflows.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Memory Consumption

Chapter 1 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

• Consumes more memory than Hadoop

Detailed Explanation

Apache Spark, while being a powerful tool, requires significant memory resources. This means that when running Spark, especially with large datasets, the system might use more RAM compared to Hadoop. This higher memory usage can lead to increased costs if you're using cloud services, as many cloud providers charge based on memory usage.

Examples & Analogies

Think of Spark like a high-performance sports car that needs premium gasoline. While it can go faster than a regular car (like Hadoop), it also requires more fuel to run efficiently. If you don’t have a big enough gas tank (memory), you may find it hard to take full advantage of Spark’s speed capabilities.

Cluster Tuning Requirements

Chapter 2 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

• May require cluster tuning for performance

Detailed Explanation

To achieve optimal performance with Spark, you often have to fine-tune your cluster settings. This involves configuring different parameters, such as the number of executors, memory allocation, and the number of CPU cores each executor uses. Without these adjustments, Spark might not run as efficiently as it could, which may lead to slower performance or even system failures under heavy loads.

Examples & Analogies

Consider tuning a musical instrument. Just as a piano might need specific adjustments to ensure it produces the best sound, Spark applications require adjustments to perform at their best. If the instrument (or Spark cluster) isn’t tuned right, the performance (or sound) may suffer.

Data Governance Limitations

Chapter 3 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

• Limited built-in support for data governance

Detailed Explanation

Data governance refers to the overall management of data availability, usability, integrity, and security. As of now, Spark has limited features for data governance, meaning that while it can process data quickly, managing who can access the data, and ensuring it is handled correctly, might require additional tools or frameworks. This lack can be a concern for organizations that must comply with data regulations or maintain strict data control.

Examples & Analogies

Think of data governance like the rules of a library. If there are no clear guidelines on who can borrow what and when, it could lead to chaos. Similarly, if a data processing tool lacks governance features, organizations might struggle to manage their data appropriately, leading to potential misuse or data breaches.

Key Concepts

-

Memory Consumption: Refers to the significant amount of RAM required by Apache Spark for its in-memory processing, which can lead to higher infrastructure costs.

-

Cluster Tuning: The need to meticulously adjust the settings of Spark clusters for optimal performance, which can complicate deployment and management.

-

Data Governance: Spark's limited built-in capabilities for ensuring data security and compliance, posing risks for organizations working with sensitive data.

Examples & Applications

A financial institution utilizing Spark for real-time analytics may struggle with compliance due to inadequate data governance mechanisms.

A startup may face challenges in scaling their operations due to high memory consumption when using Spark with large datasets.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

For Spark to shine bright, it needs RAM's might, for without it in sight, performance takes flight.

Stories

Imagine a company using speedboats (Apache Spark) for a race but needing to constantly refuel (memory) and adjust their sails (tuning) to win, while also ensuring their journey (data governance) doesn’t cross any regulatory waters.

Memory Tools

Remember 'MCD' for Spark's limitations: Memory consumption, Cluster tuning, Data governance.

Acronyms

Use 'MCD' to recall Spark's three key limitations

Memory

Cluster tuning

Data governance.

Flash Cards

Glossary

- Cluster Tuning

The process of optimizing the configuration of a computing cluster to improve performance and resource allocation.

- Memory Consumption

The amount of RAM used by a computing process, which affects its speed and efficiency.

- Data Governance

The management of data availability, usability, integrity, and security in an organization, particularly concerning regulation compliance.

Reference links

Supplementary resources to enhance your learning experience.