BERT (Bidirectional Encoder Representations from Transformers)

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Introduction to BERT

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today, we are diving into BERT, which stands for Bidirectional Encoder Representations from Transformers. What makes BERT unique compared to earlier models?

I think it's the way it processes text? Maybe it looks at the whole context?

Exactly! BERT processes text bidirectionally, meaning it considers the context from both directions. This is crucial for understanding the meanings of words in context. Can anyone give me an example of how context affects meaning?

Sure! The word 'bank' can mean a riverbank or a financial institution, depending on context.

Great example! That’s where BERT shines. It captures these subtle nuances effectively.

Masked Language Modeling

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

BERT is trained using a technique called masked language modeling. Can someone explain what that means?

Is it about hiding some words in a sentence and having the model guess them?

Exactly! By masking words, BERT learns to predict them based on the surrounding context. This approach allows it to build a deep understanding of language. What do you think the advantage is of this method?

It helps the model understand different usages and meanings by seeing how a word fits in various sentences!

Correct! This bidirectional context is what sets BERT apart.

Next Sentence Prediction (NSP)

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Another important aspect of BERT's training is the next sentence prediction task. Does anyone know how this works?

Is it about predicting if two sentences are following each other logically?

Yes! This ability helps BERT grasp the relationship between sentences, enhancing its application in tasks like question answering and reading comprehension. Why do you think this is important in NLP?

Because in real-world scenarios, understanding context isn't just about single sentences but how they connect!

Exactly! That connection is vital for understanding dialogue and structured information.

Fine-tuning and Applications

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now let’s talk about fine-tuning BERT for specific tasks. How can BERT be adapted for things like sentiment analysis?

I think it can be trained on datasets specific to sentiment tasks. Like, using movie reviews?

Absolutely! By fine-tuning BERT with labeled data, it learns the nuances of the task at hand, significantly improving performance. What other applications can you think of?

How about using it for chatbots or customer support? It could handle queries more effectively!

Yes, BERT can enhance the depth and accuracy of responses in chatbots!

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

BERT (Bidirectional Encoder Representations from Transformers) is a state-of-the-art NLP model that is pre-trained on masked language modeling and next sentence prediction tasks. Its design allows it to capture the context of words more effectively than previous models, enabling it to be fine-tuned for various downstream NLP tasks, improving accuracy and performance markedly compared to earlier methodologies.

Detailed

Overview of BERT

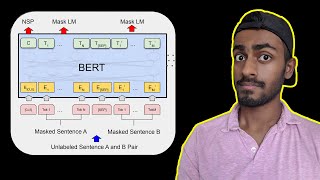

BERT, which stands for Bidirectional Encoder Representations from Transformers, is a sophisticated Neural Network architecture introduced by Google in 2018. Unlike traditional models that process text in one direction (left-to-right or right-to-left), BERT processes words in both directions simultaneously, allowing it to understand the context surrounding words within a sentence.

Key Features of BERT

- Bidirectionality: This means it considers context from both sides of a given token in the text, which enhances the model’s understanding of nuances in language.

- Masked Language Modeling: BERT is trained by masking a percentage of the words in a given sentence and learning to predict them based on their context, enabling deeper understanding and representation of language patterns.

- Next Sentence Prediction (NSP): This to-be-trained approach enhances BERT's ability to understand relationships between sentences, which is crucial for various applications like question answering.

Fine-tuning for Downstream Tasks

BERT is not just a model; it can be adapted or fine-tuned for specific tasks such as sentiment analysis, entity recognition, and more, by training it on task-specific data. This flexibility makes it highly valuable for applications in various domains of Natural Language Processing.

Significance in NLP

BERT represents a significant advancement in the NLP field, setting the stage for a new era of contextual understanding in language models. It has elevated the performance benchmarks across a wide range of natural language tasks, aligning with the goals of extracting insights and understanding from unstructured textual data effectively.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Introduction to BERT

Chapter 1 of 2

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

• Pretrained on masked language modeling and next sentence prediction.

Detailed Explanation

BERT, which stands for Bidirectional Encoder Representations from Transformers, is a model specifically designed to understand the context of words in a sentence. It is pretrained using two main tasks: masked language modeling and next sentence prediction. In masked language modeling, some words in a sentence are hidden, and the model learns to predict these missing words based on the context provided by the surrounding words. For next sentence prediction, the model learns to determine if two sentences are consecutive in a text or not, enhancing its understanding of relationships between sentences.

Examples & Analogies

Imagine a person reading a book, but some words are hidden. By understanding the context of the words around the hidden ones, the person can guess what the missing words are. Similarly, BERT can predict missing words in a sentence and understand the flow between sentences.

Fine-Tuning BERT

Chapter 2 of 2

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

• Fine-tuned for specific downstream tasks.

Detailed Explanation

Once BERT has been pretrained, it can be fine-tuned for specific tasks such as sentiment analysis, question answering, or text classification. Fine-tuning involves taking a pretrained model like BERT and training it further with a smaller, task-specific dataset. This process ensures that BERT understands the unique nuances of the new task while leveraging the foundational knowledge it gained during pretraining.

Examples & Analogies

Think of fine-tuning like a chef who has learned the basics of cooking (pretraining) but then takes a specialized course to learn how to make desserts (fine-tuning). The chef already has the foundational skills but needs to adapt to the new focus area.

Key Concepts

-

Bidirectional Processing: BERT processes text from both directions, enhancing context understanding.

-

Masked Language Modeling: BERT predicts missing words in a sentence based on context.

-

Next Sentence Prediction: BERT identifies the relationship between sentences.

-

Fine-tuning: BERT can be adapted for various specific NLP tasks by training on smaller, related datasets.

Examples & Applications

BERT can identify the contextual meaning of 'bark' in the phrases 'the bark of the tree' and 'the dog's bark'.

BERT's ability to predict masked words enables it to understand subtleties in phrases like 'She went to the bank to see the ___'.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

BERT is the best at understanding words, processing them forward and back like birds.

Stories

Imagine a detective with two eyes, looking both ways down the street for clues. That's how BERT sees words, gathering context from all directions.

Memory Tools

BERT: Bidirectional Exists, Really Thinking; Explaining Relationships in Text.

Acronyms

BERT = Bidirectional Encoder Representations Transformers.

Flash Cards

Glossary

- BERT

A pre-trained language model that uses bidirectional attention mechanisms to understand context in NLP.

- Masked Language Modeling

A training method where random words in a sentence are replaced with a mask, and the model predicts these words based on the surrounding context.

- Next Sentence Prediction (NSP)

A task in which the model predicts whether a given pair of sentences are consecutive or not.

- Finetuning

The process of adjusting a pre-trained model to suit specific tasks using a smaller, task-specific dataset.

Reference links

Supplementary resources to enhance your learning experience.