Advanced Hardware-Software Co-design Principles

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Unified Concept of Co-design

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today we're diving into the unified concept of Hardware-Software Co-design. Traditionally, hardware teams would finalize their designs and hand them off to software teams, often resulting in integration headaches. Can anyone guess why this approach might be inefficient?

Maybe because the hardware could limit software performance?

Exactly! By taking a concurrent approach, we can tackle integration challenges head-on. This method allows us to optimize the entire system, not just its parts. What do you think is a benefit of such integration?

It probably makes the whole system work better together.

That's right! This holistic view enhances performance levels and minimizes risk. Let’s always remember that effective co-design leads not just to optimized components but an optimized system. How about we summarize this? What are two main benefits of co-design?

System optimization and reduced integration issues!

Advantages of Co-design

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now, let’s shift our focus onto the advantages of co-design. One significant benefit is accelerated time-to-market. Can anyone explain what that means?

It means we can get the product out faster, right?

Exactly! By doing hardware and software concurrently, we spot potential issues earlier. Another big advantage is cost reduction. How does co-design help keep costs down?

It allows designers to choose the cheapest option between hardware and software for each task?

Great point! This strategic allocation results in not just cost savings, but also enhanced flexibility in upgrades and performance. Let's wrap up—what are three advantages of co-design we discussed today?

Faster time-to-market, reduced costs, and improved flexibility!

Hardware-Software Partitioning

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Next, let's dive into the critical task of hardware-software partitioning, a fundamental aspect of co-design. Why do you think it's essential to make decisions about function allocation?

Because it affects how efficiently the system will run?

Exactly! Factors like computational intensity play a role here. Can someone give me an example of a task that might be suitable for hardware implementation?

Maybe a cryptographic algorithm since it needs to be fast and secure?

Spot on! Crypto tasks often require quick execution, making hardware a natural fit. Let’s summarize: What three criteria should we consider for effective partitioning?

Computational intensity, timing criticality, and data throughput!

Co-simulation and Co-verification Techniques

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now onto co-simulation and co-verification techniques. These are essential for validating hardware-software interactions early in the design cycle. Can anyone explain why early validation is beneficial?

It helps us catch errors before hardware is even built, saving time and costs!

Exactly! Techniques like Transaction-Level Modeling allow fast architectural exploration. What do you think is the drawback of using conventional methods instead?

They would be slower and risk errors showing up later, making it more expensive?

Right again! So, how does using co-simulation improve our overall design process?

It speeds up the process and helps find issues at the design stage rather than after building!

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

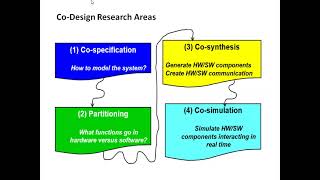

The section discusses the unified approach to hardware-software co-design, highlighting system-level optimization, advantages of concurrent development, the critical task of hardware-software partitioning, advanced co-simulation techniques, and the importance of refining performance through iterative design. It stresses that holistic optimization leads to better overall system performance in embedded designs.

Detailed

Advanced Hardware-Software Co-design Principles

Unified Concept of Co-design

Hardware-Software Co-design represents a revolutionary shift from traditional sequential development, where teams worked separately, often resulting in integration issues and performance bottlenecks. This section emphasizes the importance of viewing embedded systems as cohesive units from the onset, aiming for a unified design where both hardware and software influences are considered together.

Advantages of Co-design

- System-Level Optimization: Ensures that decisions made for one component do not negatively impact another, enabling smooth performance enhancements, such as shifting workloads to hardware to bolster software limitations.

- Accelerated Time-to-Market: Facilitates earlier interface identification and performance bottleneck detection, resulting in a shorter development cycle.

- Cost Reduction: Enables a strategic balance between hardware and software functions to ensure cost-effectiveness.

- Enhanced Performance: Identifying critical tasks that can be accelerated through dedicated hardware proves essential for real-time applications.

- Power Efficiency: By examining how functions can be offloaded to hardware, co-design helps minimize overall energy consumption.

- Increased Flexibility: Incorporating software functionality enhances the ability to adapt to new requirements or perform updates easily.

Hardware-Software Partitioning

Co-design's central focus is the effective allocation of functionalities between hardware and software. This involves considering factors like computational intensity, timing criticality, data throughput, and costs to decide the most appropriate platform for each component. Furthermore, attention to interface design during partitioning is crucial, as it greatly influences overall system performance and communication efficiency.

Co-simulation and Co-verification Techniques

Advanced methods such as Transaction-Level Modeling (TLM) and FPGA Prototyping allow developers to simulate functionality and validate performance early in the design cycle before hardware production. These techniques facilitate the early identification of errors and enhance the verification process.

Overall, this section asserts that through rigorous co-design principles, engineers can achieve a robust balance of performance, cost, efficiency, and flexibility, significantly improving the development process and outcomes in embedded systems.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Unified Concept of Co-design

Chapter 1 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Historically, hardware teams would design a board, then hand it off to software teams. This often led to significant integration problems, as hardware choices might inadvertently complicate software development or prevent performance goals from being met, and vice-versa. Co-design tackles this "chicken-and-egg" problem by promoting a unified design methodology from the very beginning. Designers consider the impact of hardware choices on software performance and resource usage, and how software algorithms can best leverage or compensate for hardware characteristics. The goal is to optimize the entire system, not just its individual components.

Detailed Explanation

In traditional design workflows for embedded systems, hardware and software were developed in sequence. First, hardware would be designed, then passed to software development teams. This often created issues related to integration. For example, if the hardware did not suit the software needs, adjustments became labor-intensive and costly. The co-design approach remedies this by having both hardware and software teams collaborate from the start. This means they can jointly consider how their design choices affect each other. If hardware changes could improve software efficiency, or if software requirements could refine hardware selection, these interactions are identified and adjusted immediately. The ultimate aim is to create a more effective system as a cohesive unit rather than optimizing only parts of the system independently.

Examples & Analogies

Think of co-design like planning a big event, such as a wedding. If the venue is selected without consulting the catering team, they may find that their kitchen equipment won't fit the available space, or they may need more time to cook than the schedule allows. By having the venue and catering teams plan together from the beginning, they can create a harmonious event that satisfies the needs of all parties involved.

Advantages of Co-design

Chapter 2 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Co-design enables global optimization, preventing sub-optimal local decisions. For instance, a function implemented purely in software might be too slow; moving parts of it to hardware can dramatically improve performance. Concurrent development reduces the overall design cycle. Early detection of interface mismatches or performance bottlenecks through co-simulation avoids costly redesigns later. By carefully partitioning, designers can choose the most cost-effective implementation for each function. Critical, time-sensitive tasks can be identified and accelerated in dedicated hardware for superior speed and determinism. Functions consuming significant power in software can often be implemented in specialized hardware. Strategic placement of functionality in software allows for easier upgrades, while hardware provides fixed performance.

Detailed Explanation

The advantages of co-design are many. By allowing both hardware and software to be developed together, it promotes a holistic optimization of the system. For example, if a software process runs too slowly, it might indicate that part of it could be better handled by hardware, greatly enhancing speed and efficiency. Additionally, developing both elements concurrently can shorten the design cycle significantly since issues can be identified and solved early on, such as when software and hardware don’t communicate effectively. This collaboration means that not only can designers choose the appropriate method for task implementation, but they can also consider power consumption, ensuring that high-power functions are run on efficient hardware. Lastly, because some components can remain in software, designers maintain flexibility for future updates, while foundational tasks utilize the speed of hardware for consistent performance.

Examples & Analogies

Consider the process of creating a smartphone app. If the UI design team only worked in isolation, they might create a visually appealing interface that loads slowly because it’s too data-heavy. But if they collaborated with backend developers from the start, they could optimize data flow, ensuring the app runs smoothly while looking great. This teamwork saves time, effort, and ultimately leads to a better product.

Hardware-Software Partitioning

Chapter 3 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

This is the central task of co-design, involving the precise allocation of system functions to either hardware (e.g., custom logic on an FPGA or ASIC) or software (e.g., code running on a CPU). The process is typically iterative, often starting with a tentative partition and refining it through analysis and simulation. Refined criteria for partitioning include computational intensity and parallelism, timing criticality, data throughput, control flow vs data flow, flexibility & upgradeability, power budget, cost vs volume, and IP availability.

Detailed Explanation

Partitioning is a core function within co-design that helps determine each system's role in hardware or software. It typically begins with a rough outline—where certain functions are flagged for hardware while others are kept for software. As designers analyze performance data and conduct simulations, this distribution becomes more refined. For example, if a task is computation-heavy and needs to achieve results quickly, it may be designated for hardware. Conversely, features that require frequent changes or flexibility might better suit software. Other considerations, like how much data needs to move between components and the associated costs, are also evaluated throughout this iterative process.

Examples & Analogies

Imagine a perfect pizza shop where the chef decides that pizza bases should be prepared using a traditional oven (hardware), while the toppings can be changed based on customer preferences through a digital menu (software). The initial idea might be that all processes happen in the kitchen, but through trial and error—and customer feedback—the chef learns that some toppings should be pre-prepared for faster service, reflecting a better allocation of tasks for efficiency.

Advanced Co-simulation and Co-verification Techniques

Chapter 4 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

These techniques are indispensable for validating hardware-software interfaces and overall system behavior early in the design cycle. Transaction-Level Modeling (TLM) allows fast simulation of complex systems, Virtual Platforms enable early software development, FPGA Prototyping allows real-time emulation, and Mixed-Signal Simulation verifies interactions in systems with analog and digital components.

Detailed Explanation

Co-simulation and co-verification techniques play a crucial role in embedded system development. They allow designers to validate that hardware and software will work correctly together before any physical prototypes are made. Transaction-Level Modeling provides an abstract view that simplifies the simulation of component interactions, enabling quicker assessments. Virtual platforms provide a software model of the embedded hardware, allowing developers to start working on software even when the hardware is not yet available. FPGA prototyping allows for testing on hardware in a real-time capacity to verify performance under actual load conditions. Tools for mixed-signal simulations enable the evaluation of systems incorporating both analog and digital signals. These practices collectively reduce integration issues and identify potential problems that might arise when the hardware and software interact—ensuring a more reliable end product.

Examples & Analogies

Think of the process like rehearsing a play before it goes live. The cast can practice their lines and movements on a stage that mimics the real setting (like a virtual platform) before using elaborate scenery. They can also practice with the full production setup (like FPGA prototyping) to see how everything comes together, ensuring that the final performance is seamless.

Key Concepts

-

Concurrent Design: Simultaneous hardware and software development to enhance integration and performance.

-

Holistic Optimization: Focusing on the entire system rather than isolated components to improve overall efficiency.

-

Cost-Effectiveness: The balance achieved by selecting the appropriate implementation for various functions to minimize costs.

Examples & Applications

A cryptographic function optimized in dedicated hardware can significantly outperform a software-only approach.

Using TLM allows developers to explore system behaviors rapidly, making critical design decisions early.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

Co-design leads the way, for faster products every day.

Stories

Imagine a team building a bridge. If the engineers (hardware) and painters (software) work separately, the bridge might look great but fail to stand. If they work together, they’ll ensure both stability and an appealing finish—the essence of co-design.

Acronyms

COST

Co-design Optimizes System Tasks.

Flash Cards

Glossary

- HardwareSoftware Codesign

An integrated approach to development that coordinates hardware and software design to optimize overall system performance.

- Partitioning

The process of allocating specific functions or tasks to either hardware or software based on various criteria.

- Cosimulation

A method allowing for the simultaneous simulation of hardware and software components to validate interactions and performance.

- TransactionLevel Modeling (TLM)

An abstraction technique to model inputs and outputs between components at a high level, improving simulation speed.

- SystemLevel Optimization

An approach focused on improving the performance of the entire system rather than just its components.

Reference links

Supplementary resources to enhance your learning experience.