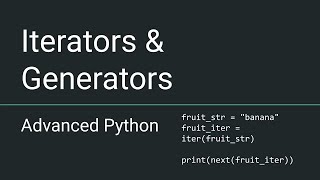

Generators and Iterators

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Introduction to Iterators

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Alright class, today we are diving into the world of iterators. Can anyone tell me what an iterator is?

Is it an object that allows us to loop through a sequence of data?

Exactly! An iterator represents a stream of data, returning one element at a time when requested. It's defined by two methods: `__iter__()` and `__next__()`. Can anyone explain what these methods do?

`__iter__()` returns the iterator object itself, and `__next__()` returns the next value.

Great! And when there are no more items left, `__next__()` raises a `StopIteration` exception. This is crucial for managing loops. Remember: `I` for Iterator and `I` for Internal state, as iterators maintain an internal state.

So, that's how the for loop internally works, right?

Spot on! The for loop calls `iter()` to get the iterator and `next()` to get each item until `StopIteration` occurs. Let’s summarize: iterators provide a manageable way to handle data sequences.

Understanding Generators

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now, let’s explore generators. What do you think a generator is?

Could it be a function that yields a sequence of values?

Exactly right! A generator is a special type of iterator defined using a function that yields values one at a time. Can anyone tell me how we define a generator function?

We use the `yield` statement inside a function.

Correct! And what's special about `yield` compared to return?

`Yield` pauses the function's state for future calls instead of terminating it.

Well done! This means generators are memory efficient, only producing values on demand. That's why they simplify iterator code, saving us from implementing those two methods manually. Remember: THINK `YIELD` for `Yes, I hold my state`!

Using `yield` and `yield from`

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Let’s now look at how we can use `yield` in practice. Can someone provide an example?

You can create a function that yields numbers one by one.

Absolutely! Now, `yield from` was introduced to make it easier to delegate processing to another generator. Why do you think that is useful?

It can simplify nested loops.

Precisely! Let me share an example. If we want to yield from both a simple list and a generator expression, we can use `yield from` for cleaner code. Remember: `YIELD` is for data production, while `YIELD FROM` is delegation.

Practical Applications of Generators

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Finally, let’s discuss practical applications. How can we use generators in projects?

For lazy evaluation, generating values only when needed.

Exactly! This is crucial for memory management. Can you think of a scenario where this could be beneficial?

Creating an infinite sequence, like counting numbers?

Yes! Generators can handle such scenarios without consuming memory. Additionally, they can facilitate data processing pipelines, allowing us to filter and transform data smoothly. Remember: 'G' for 'Generators create efficiency'.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

The section delves into iterators and the iterator protocol, elucidating how generators, defined by the yield statement, facilitate memory-efficient data handling. It explains the advantages of using generators, the syntax for defining them, and introduces the concept of coroutines, including sending values to generators. Practical applications, like lazy evaluation and data processing pipelines, are also discussed.

Detailed

Generators and Iterators in Python

This section presents the intricacies of Python’s generators and iterators, essential constructs for handling data sequences efficiently.

Key Concepts:

Iterators and the Iterator Protocol

An iterator is an object representing a stream of data, returning one element at a time. To be considered an iterator, an object must implement two methods:

- __iter__() returns the iterator object itself.

- __next__() returns the next element, raising StopIteration when no more items exist.

Generators and Generator Functions

A generator is a specialized iterator that yields values using a function containing the yield keyword. This yields values only when requested, simplifying iterator creation.

yield and yield from

The yield keyword pauses the function execution to return a value, while yield from delegates part of a generator's operation to another generator or iterable, making it easier to work with multiple levels of generators.

Generator Expressions

Similar to list comprehensions, but yield generators instead of lists, allowing for lazy evaluation and memory efficiency.

Coroutines and Two-Way Communication

Generators can also receive values, making them capable of functioning as coroutines that pause and resume execution with data exchange. An example demonstrates this concept using a simple accumulator function.

Practical Applications

Generators are particularly useful for lazy evaluation, enabling the creation of infinite sequences without consuming memory, and for building data processing pipelines that efficiently chain operations.

Understanding these concepts is crucial for effective and pythonic coding, particularly when dealing with large datasets or infinite data streams.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Introduction to Generators and Iterators

Chapter 1 of 8

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Python’s power in handling data sequences efficiently comes largely from iterators and generators. These constructs enable elegant, memory-efficient looping over potentially large or infinite data streams.

Detailed Explanation

This introduction emphasizes the importance of iterators and generators in Python. Iterators provide a way to loop over data one element at a time, which is especially useful when dealing with large datasets that may not fit into memory. Generators, as a special type of iterator, allow for an even more streamlined approach to data iteration by yielding values on demand, thus saving memory and enabling the processing of potentially infinite sequences.

Examples & Analogies

Consider a restaurant that serves a multi-course meal. Instead of having all the food prepared and waiting on the table (like loading all data into memory), the kitchen prepares each dish as it's ordered, serving it when requested. This ensures that the kitchen is not overwhelmed and can serve the meal efficiently—similar to how generators produce values one by one.

Understanding Iterators

Chapter 2 of 8

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

An iterator is an object that represents a stream of data; it returns one element at a time when asked. Python’s iterator protocol is a standard interface for these objects. An object is an iterator if it implements two methods:

- iter() — Returns the iterator object itself.

- next() — Returns the next item in the sequence. Raises StopIteration when no more items exist.

Detailed Explanation

An iterator in Python is a construct that allows us to iterate through a sequence of data. The iterator protocol consists of two key methods: 'iter()' returns the iterator object itself, while 'next()' fetches the next item from the sequence. When there are no more items to return, 'next()' raises a StopIteration exception, signaling that the sequence is exhausted. This mechanism underpins many looping constructs in Python, allowing seamless traversal of data.

Examples & Analogies

Imagine a printing press that can only print one copy of a document at a time. Each time the printer is asked to print (like calling the 'next()' method), it prints one document. When all copies are printed, it indicates that no more documents are left to print—similar to how an iterator works until it runs out of items.

Introduction to Generators

Chapter 3 of 8

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

A generator is a special type of iterator defined with a function that yields values one at a time, suspending its state between yields. Generators simplify creating iterators without needing classes. Use the yield keyword inside a function to define a generator.

Detailed Explanation

Generators are a user-friendly way to create iterators in Python, employing the 'yield' keyword to produce values one at a time. When the generator function is invoked, it doesn't execute immediately; instead, it returns a generator object. Each time we call 'next()', the function resumes its execution up to the next 'yield' where it then pauses and yields the value. This process allows the generator to maintain its state between yields, enabling efficiencies in memory usage and performance.

Examples & Analogies

Consider a chef cooking a dish who only prepares a portion when a guest orders it—the chef doesn't prepare the entire meal at once. Instead, they pause after preparing each serving to wait for the next order (yielding). This approach means the chef isn't overwhelmed making all meals simultaneously, similar to how generators yield values on demand.

Benefits of Generators

Chapter 4 of 8

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Benefits of Generators:

- Memory efficient: Values are produced on demand, not stored in memory.

- Lazy evaluation: They generate values only when requested.

- Simplify iterator code: No need for iter() or next() methods manually.

Detailed Explanation

One of the primary benefits of using generators is their memory efficiency. Since they yield values only as needed rather than storing a complete set of data in memory, they enable the handling of large datasets with reduced memory footprint. Additionally, generators implement lazy evaluation, meaning calculations or iterations occur only when explicitly requested, further enhancing resource management. Moreover, with generators, developers can avoid the complexities of manually defining 'iter()' and 'next()' methods, making code cleaner and easier to write.

Examples & Analogies

Imagine a library that only brings out books on request rather than displaying all of them simultaneously. This not only saves space but also keeps the reading area uncluttered. Like this library, generators only provide data when requested, making the process more efficient and manageable.

Using Yield and Yield From

Chapter 5 of 8

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

yield suspends the function, returning a value, and resumes later to continue. yield from delegates part of a generator’s operations to another generator or iterable, simplifying nested loops.

Detailed Explanation

The 'yield' statement in a generator allows the function to return a value and suspend its execution until the next 'next()' call. This makes it possible for the generator to produce a series of results over time rather than all at once. The 'yield from' expression, introduced in Python 3.3, allows a generator to yield all values from another iterable or generator, simplifying the code needed for nested loops and making it easier to delegate operations to other generators.

Examples & Analogies

Think of a music playlist that plays songs one after another. When a song finishes, it automatically moves to the next track. Similarly, 'yield' gives back one piece of data and pauses, while 'yield from' allows a playlist to play songs from another playlist without manual intervention.

Generator Expressions

Chapter 6 of 8

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Generator expressions are similar to list comprehensions but produce generators instead of lists. Advantages:

- They are lazy, producing items on demand.

- More memory-efficient for large data compared to list comprehensions.

Detailed Explanation

Generator expressions offer a concise way to create generators using a syntax similar to list comprehensions. They allow for on-the-fly generation of values without storing the entire result in memory, making them an ideal choice when dealing with large datasets. By yielding items one at a time as they are requested, generator expressions optimize memory usage and improve performance.

Examples & Analogies

Imagine a vending machine that serves drinks when requested rather than preparing all drinks at once. The vending machine is efficient and only provides one drink at a time, which is similar to how generator expressions produce data only when asked for, conserving resources.

Coroutines Basics and Sending Values to Generators

Chapter 7 of 8

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Generators can also receive values, allowing two-way communication. This feature enables coroutines — functions that can pause and resume with data exchange.

Detailed Explanation

Coroutines extend the functionality of generators by allowing them to not only yield values but also receive input. Using the 'send()' method, a caller can send a value to a generator at its yield point, hence enabling interactive communication between the generator and the caller. This capability turns generators into coroutines, which are versatile constructs that can manage states and workflows more efficiently.

Examples & Analogies

Think of a conversation between two people. Each person takes turns speaking and can respond to what the other has said. In coroutines, the generator can yield a value and pause, waiting for input before continuing the conversation. This back-and-forth interaction reflects the flexible nature of coroutines.

Practical Applications of Generators

Chapter 8 of 8

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Generators allow computation of values only when needed, improving memory and CPU efficiency. Example: Generating infinite sequences.

Detailed Explanation

Generators are practical for representing infinite sequences or streams of data that may otherwise cause memory overflow if computed all at once. By yielding values as they are needed, generators help in efficiently handling ongoing data processing tasks, such as managing user inputs or processing large datasets incrementally.

Examples & Analogies

Imagine a factory assembly line that produces widgets only when an order comes in. The factory doesn’t create a giant backlog of widgets all at once but rather makes them on-demand. This not only conserves space but also keeps production efficient, just like generators do with data.

Key Concepts

-

Iterators and the Iterator Protocol

-

An iterator is an object representing a stream of data, returning one element at a time. To be considered an iterator, an object must implement two methods:

-

__iter__()returns the iterator object itself. -

__next__()returns the next element, raisingStopIterationwhen no more items exist. -

Generators and Generator Functions

-

A generator is a specialized iterator that yields values using a function containing the

yieldkeyword. This yields values only when requested, simplifying iterator creation. -

yieldandyield from -

The

yieldkeyword pauses the function execution to return a value, whileyield fromdelegates part of a generator's operation to another generator or iterable, making it easier to work with multiple levels of generators. -

Generator Expressions

-

Similar to list comprehensions, but yield generators instead of lists, allowing for lazy evaluation and memory efficiency.

-

Coroutines and Two-Way Communication

-

Generators can also receive values, making them capable of functioning as coroutines that pause and resume execution with data exchange. An example demonstrates this concept using a simple accumulator function.

-

Practical Applications

-

Generators are particularly useful for lazy evaluation, enabling the creation of infinite sequences without consuming memory, and for building data processing pipelines that efficiently chain operations.

-

Understanding these concepts is crucial for effective and pythonic coding, particularly when dealing with large datasets or infinite data streams.

Examples & Applications

An example of an iterator is a class implementing __iter__() and __next__(), like a countdown timer.

A generator function example is count_up_to() that yields values from 1 to a given maximum.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

If you want to count down without a frown, use an iterator, don't let the memory drown.

Stories

Once upon a time in Python land, a generator smiled as it held your hand, yielding values, so light and grand, it paved the way for clever code that's planned!

Memory Tools

Remember: G for Generator, M for Memory-efficient, L for Lazy evaluation.

Acronyms

I.Y.G

Yield Generators for efficient coding.

Flash Cards

Glossary

- Iterator

An object that represents a stream of data, returning one element at a time.

- Iterator Protocol

A standard interface requiring the implementation of two methods:

__iter__()and__next__().

- Generator

A type of iterator defined by a function that uses

yieldto produce values lazily.

- Yield

Used in a generator function to suspend execution and return a value.

- Yield from

Delegates part of a generator's operations to another generator or iterable.

Reference links

Supplementary resources to enhance your learning experience.