Scalability and Power Efficiency

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Introduction to Scalability

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today, we are diving into the concept of scalability. In AI circuits, scalability refers to the ability of a system to handle increasing amounts of work or load. As AI models grow, why do you think scalability is becoming a crucial topic?

Because larger models need more power and faster processing capabilities, right?

Exactly! And with that growth, we must address power efficiency too. Can anyone explain why power efficiency is important?

Power efficiency helps in reducing costs and is better for the environment!

Great points! Remember, balancing power and performance is key. Think of the acronym 'PEP' — Power, Efficiency, Performance. Let's keep that in mind!

Challenges in AI Scalability

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now let's dig deeper into the challenges of scalability. What specific technical issues do you think arise as we scale AI circuits?

One issue could be heat generation from increased processing power.

And there might be a limitation in the hardware. Like, traditional circuits might not handle the load well.

Right! Heat management is a huge factor and inadequate hardware can cause bottlenecks. So, what innovations might help overcome these challenges?

Energy-efficient architectures like low-power FPGAs or neuromorphic circuits?

Exactly! These innovations are essential for efficient scaling. Let's remember 'EAP' — Energy, Architecture, Performance.

Energy-Efficient Architectures

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now, let's explore energy-efficient architectures. Why are they critical for scalability in AI circuits?

Because they help manage power usage while still meeting the demands of processing!

Great insight! For instance, low-power FPGAs can adapt to specific needs dynamically. Can anyone tell me about neuromorphic circuits?

They mimic the brain's structure and function, allowing for efficient processing by only using power when needed?

Absolutely! This leads to significant power savings and improved efficiency. Remember the acronym 'NEM' — Neuromorphic Energy Management for ease of recall.

Future Directions

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Looking ahead, what future innovations do you think will play a critical role in overcoming challenges in AI scalability?

I think quantum computing could make a difference, as it has potential for high-speed processing.

And further advancements in neuromorphic computing can lead to better energy efficiency.

Exactly! As we develop these technologies, we often refer to them with the reminder 'FAN' — Future Architecture Needs. Always be aware of the upcoming potential!

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

This section emphasizes the difficulties in scaling AI circuits as their complexity increases and outlines the importance of developing energy-efficient hardware architectures to address these challenges.

Detailed

Scalability and Power Efficiency

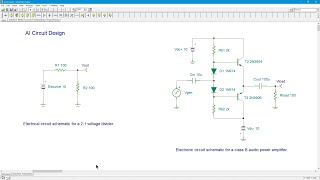

As AI models become larger and more intricate, scaling AI circuits while preserving power efficiency presents significant challenges. Efficient hardware development is crucial to ensure that AI systems can handle increasing complexity without incurring excessive power consumption. This section highlights the importance of innovations in energy-efficient architectures such as low-power FPGAs, neuromorphic circuits, and quantum computing in achieving this goal. By focusing on these advancements, the field can ensure that future AI systems are both scalable and sustainable.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

The Challenge of Scaling AI Circuits

Chapter 1 of 2

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

As AI models grow in size and complexity, scaling AI circuits while maintaining power efficiency becomes increasingly difficult. Developing hardware that can scale effectively without consuming excessive power is a key challenge for future AI systems.

Detailed Explanation

This chunk discusses the difficulties faced in AI circuit design as AI models become larger and more complex. When we talk about 'scaling', we are referring to the need for hardware to support growing models without using too much power. For instance, a software application that processes large datasets requires hardware that can handle these large tasks without overheating or consuming excessive energy. This is important because many AI systems are deployed in environments where power supply is limited, like in mobile devices or remote sensors. Therefore, finding a balance between power consumption and the necessary computational power is crucial.

Examples & Analogies

Imagine trying to fit a larger engine in a small car. You want the car to go faster (like an AI model running complex algorithms), but if the engine is too big, it will use too much fuel (analogous to power consumption). Designers must find an engine that performs well without needing too much fuel, just as engineers must create AI hardware that scales up while keeping energy use in check.

Energy-Efficient Architectures

Chapter 2 of 2

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Energy-Efficient Architectures: Innovations in energy-efficient computing, such as low-power FPGAs, quantum computing, and neuromorphic circuits, will be essential in ensuring that AI systems can scale while minimizing energy consumption.

Detailed Explanation

This chunk emphasizes the importance of developing energy-efficient computing architectures. It highlights specific technologies like low-power Field Programmable Gate Arrays (FPGAs), quantum computing, and neuromorphic circuits. Each of these innovations is aligned with reducing energy consumption while still providing adequate computing power. For instance, low-power FPGAs are adaptable circuits that can be programmed for specific tasks and usually consume less power than general-purpose processors. Quantum computing takes advantage of quantum bits to perform complex calculations more efficiently, potentially requiring less energy for the same tasks. Neuromorphic circuits replicate brain-like processing, which can also be more energy-efficient in certain AI applications.

Examples & Analogies

Think of energy-efficient architecture like using LED light bulbs instead of traditional incandescent bulbs. Both provide light (computing power), but LED bulbs use significantly less energy to do so. Just as switching to LEDs helps reduce electricity usage in homes, adopting energy-efficient architectures helps reduce power consumption in AI hardware, allowing it to perform complex tasks without draining resources.

Key Concepts

-

Scalability: The ability of AI systems to grow with increasing complexity and workload without losing performance.

-

Power Efficiency: The designed capability to maximize performance while minimizing energy consumption.

-

Neuromorphic Circuits: Hardware architectures that simulate the neural structures of the brain to increase efficiency.

-

FPGAs: Flexible hardware platforms that can be tailored to specific computational tasks.

-

Quantum Computing: An advanced computational technique that leverages quantum mechanics for faster problem-solving.

Examples & Applications

Using low-power FPGAs, organizations can scale their AI systems to handle more data without significantly increasing power consumption.

Neuromorphic circuits can only engage power when processing data, leading to substantial energy savings.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

To scale and not to fail, keep power efficiency on sale!

Stories

Imagine a factory that builds products. If the machines (AI circuits) can grow with orders without consuming too much power (energy efficiency), it would run smoothly without causing environmental harm.

Memory Tools

Think 'PEP' for Power, Efficiency, Performance when talking about AI scalability.

Acronyms

Use 'EAP' for Energy, Architecture, and Performance while addressing scalability.

Flash Cards

Glossary

- Scalability

The capability of a system to handle a growing amount of work or ability to enlarge itself to accommodate growth.

- Power Efficiency

The effective use of energy, ensuring that a system consumes minimal power while still delivering performance.

- Neuromorphic Circuits

Circuits designed to mimic the operations of the human brain, aiming for efficient computation.

- FPGAs

Field-Programmable Gate Arrays, a type of hardware that can be programmed to perform specific tasks efficiently.

- Quantum Computing

A type of computation based on quantum-mechanical phenomena, which promises to solve problems much faster than classical computers.

Reference links

Supplementary resources to enhance your learning experience.