Evaluation of Recommender Systems

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Offline Evaluation of Recommender Systems

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today, let's explore the concept of offline evaluation. Can anyone tell me what we mean by offline evaluation in the context of recommender systems?

Is that when we use past user data to see how a recommender would have performed?

Exactly! We're using historical interactions to simulate performance. Key metrics we use are precision and recall. Remember, 'Precision is about recommendations, while Recall is about retrieval.'

So, how does precision differ from recall?

Good question! Precision answers how many recommended items were actually relevant, while recall answers how many relevant items were actually recommended. Think of them as two sides of the same coin. Can anyone give me an example of where we might use these metrics?

In an online shopping scenario, if I recommend five items but only three are bought, that affects precision.

That's right! Your example highlights the importance of accurately measuring performance. Today, remember to focus on these metrics as foundational tools for evaluating recommender systems. Let’s wrap up—offline evaluation uses historical data and essential metrics like precision and recall to measure performance effectively.

Online Evaluation Techniques

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now, let’s transition to online evaluation methods. Can anyone explain what A/B testing is?

It's when we compare two versions of a web page or app to see which performs better.

Correct! A/B testing helps us evaluate how users interact with different recommendations in real-time. What metrics can we use here?

I remember click-through rate, how often users click on recommendations presented to them.

Spot on! CTR is crucial, but we also look at conversion rates and dwell time. Dwell time measures how long users engage with the recommendations. Can someone summarize why these evaluations are essential?

It’s crucial for improving user experience and ensuring system recommendations are effective based on real interactions.

Excellent summary! Online evaluation techniques, like A/B testing, employ metrics such as CTR, conversion rates, and dwell time to ensure our recommender systems adapt to users' preferences.

Integrating Offline and Online Evaluations

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Let’s discuss the integration of both evaluation methods. Why might we want to combine offline and online evaluations?

Combining them gives a well-rounded view of performance since offline can guide changes before online testing.

Exactly! Also, offline evaluations can help refine algorithms before we risk with real-time users. Together, they create a feedback loop for improvement. Can anyone list some key metrics we could evaluate offline?

Precision, recall, RMSE, and MAE!

Great memory! And for online evaluation? What metrics stand out?

CTR, conversion rate, and dwell time!

Fantastic! Bringing together both sets of metrics ensures a progressive approach, improving our recommender systems effectively!

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

In evaluating recommender systems, various metrics and methodologies are employed to assess performance effectively. Offline evaluations typically utilize historical data, while online evaluations rely on real-time A/B testing. Key metrics include precision, recall, and click-through rate, among others.

Detailed

Evaluation of Recommender Systems

The evaluation of recommender systems is vital for understanding their effectiveness and improving their performance. Evaluations are divided into two main categories: offline and online evaluation.

Offline Evaluation

Offline evaluation involves using historical data to simulate how the recommender system would have performed. This allows developers to assess potential changes without impacting current users. Key metrics include:

- Precision: Measures the ratio of true positive recommendations to the total number of recommended items.

- Recall: Indicates how many true positive items were retrieved out of the total actual positive items.

- F1-Score: The harmonic mean of precision and recall, providing a single metric for performance evaluation.

- Mean Absolute Error (MAE): Calculates the average of the absolute errors between predicted ratings and actual ratings.

- Root Mean Squared Error (RMSE): A quadratic scoring method that penalizes larger errors more significantly than MAE.

- AUC-ROC: Area Under the Receiver Operating Characteristic curve, indicating the ability of the model to distinguish between classes.

- Mean Reciprocal Rank (MRR): Reflects the rank position of the first relevant recommendation across multiple queries.

These metrics provide a comprehensive view of system strengths and weaknesses, helping to tune performance.

Online Evaluation

Online evaluation involves real-time assessments, primarily using techniques like A/B testing to monitor user interaction with the recommender system. Metrics examined during online evaluations typically include:

- Click Through Rate (CTR): Monitors the ratio of users who click on a recommendation versus those who view it.

- Conversion Rate: Tracks how many clicks on recommendations resulted in a desired outcome, such as a purchase.

- Dwell Time: Measures how long users engage with the recommended content, providing insight into user satisfaction and recommendation relevance.

Incorporating both offline and online evaluation methods creates a robust framework for continuous improvement, ensuring the recommender systems evolve with user needs.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Offline Evaluation

Chapter 1 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

• Use historical data to simulate performance.

Detailed Explanation

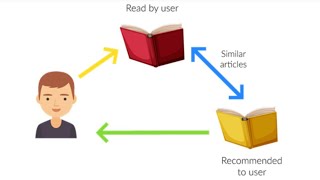

Offline evaluation refers to assessing how well a recommender system would perform using historical data, which means utilizing past interactions between users and items. This approach allows researchers and developers to understand the effectiveness and accuracy of their algorithms before deploying them in real-world scenarios. It helps to analyze how well the system makes predictions based on previously collected data without needing to involve users at that moment.

Examples & Analogies

Think of offline evaluation like rehearsing for a play. Actors read through their lines and practice their scenes based on scripts written beforehand. This preparation, without an audience, allows them to identify and fix potential issues before they perform in front of live viewers.

Evaluation Metrics

Chapter 2 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Metrics:

• Precision & Recall

• F1-Score

• Mean Absolute Error (MAE)

• Root Mean Squared Error (RMSE)

• AUC-ROC

• Mean Reciprocal Rank (MRR)

Detailed Explanation

This section lists various metrics used to evaluate recommender systems. Precision measures the proportion of correctly recommended items out of all recommended items. Recall assesses the proportion of correctly recommended items out of all relevant items. The F1-Score is the harmonic mean of precision and recall, providing a balance between the two. Mean Absolute Error (MAE) and Root Mean Squared Error (RMSE) quantify the difference between predicted ratings and actual ratings, helping to measure accuracy. AUC-ROC evaluates how well the recommender system can distinguish between positive and negative instances, while Mean Reciprocal Rank (MRR) focuses on the position of the first relevant recommendation.

Examples & Analogies

Consider a teacher grading students' essays. Precision would be like measuring the percentage of top essays the teacher identified among all essays they praised. Recall would be how many top essays the teacher pointed out among all excellent essays produced. The F1-Score would help ensure that the teacher is not too lenient or too strict, finding a balance in grading. MAE and RMSE would represent how far off the grades were compared to what the students expected based on a rubric.

Online Evaluation

Chapter 3 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

• A/B testing in real-time environments.

• Metrics: CTR (Click Through Rate), conversion rate, dwell time

Detailed Explanation

Online evaluation occurs in real-time settings and involves testing the recommender system with actual users. One common method is A/B testing, where users are split into groups, and each group experiences different versions of the recommender system. This helps determine which version performs better based on specific metrics. Click Through Rate (CTR) measures how often users click on recommendations, while conversion rate tracks how many users follow through and take desired actions, such as making a purchase. Dwell time refers to how long users engage with the recommended items.

Examples & Analogies

Imagine a restaurant trying out two different menus. They serve Menu A to half the customers and Menu B to the other half. By observing which menu leads to more orders (CTR), better overall satisfaction (conversion rate), and longer diners staying at tables (dwell time), the restaurant can decide which menu works better in the real world.

Key Concepts

-

Offline Evaluation: Usage of historical data to assess recommender system performance.

-

Online Evaluation: Real-time testing methods like A/B that analyze user interactions.

-

Precision: Measure of accuracy in recommendations.

-

Recall: Measure of capturing relevant recommendations.

-

A/B Testing: A method to compare two versions of a recommendation for user engagement.

Examples & Applications

In an e-commerce platform, if a system suggests 5 products and 3 are purchased, precision can be calculated as 0.6.

A recommender system that shows 10 movies, and 7 are enjoyed by the user corresponds to a recall of 0.7.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

For precision and recall, like a seesaw; one needs the right, the other all in sight.

Stories

Imagine a librarian who tries to recommend books. Precision is how many of the books chosen by her readers are enjoyed, while recall is how many favorites out of the whole collection she managed to suggest.

Memory Tools

Please Reference A-B Testing: Precision, Recall, and Dwell Time.

Acronyms

PARE

Precision

A/B testing

Recall

Engagement time.

Flash Cards

Glossary

- Precision

The ratio of true positive recommendations to the total number of recommended items.

- Recall

The ratio of true positive recommendations to the total number of relevant items.

- F1Score

The harmonic mean of precision and recall, providing a single metric for performance evaluation.

- Mean Absolute Error (MAE)

The average of the absolute errors between predicted ratings and actual ratings.

- Root Mean Squared Error (RMSE)

A quadratic scoring method used to measure the differences between predicted and actual values.

- AUCROC

Area Under the Receiver Operating Characteristic curve, indicating the model's ability to distinguish between classes.

- Mean Reciprocal Rank (MRR)

A metric reflecting the rank position of the first relevant recommendation.

- Click Through Rate (CTR)

The ratio of users who clicked on a recommendation to those who viewed it.

- Conversion Rate

The percentage of clicks that result in a desired action, such as making a purchase.

- Dwell Time

The total time users spend engaging with recommended content.

Reference links

Supplementary resources to enhance your learning experience.