Edge and Fog Computing in IoT

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Introduction to Edge Computing

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today, we will explore edge computing, which is all about processing data right at or near the source where it's generated. Can anyone explain what they think edge computing means?

Is it about doing computations on devices like sensors instead of sending everything to the cloud?

Excellent! Edge computing allows for local decision-making, thereby reducing latency and saving bandwidth. It’s crucial for applications requiring fast responses. Remember the acronym 'LBR'—Latency, Bandwidth, Reliability, which encapsulates its core benefits.

Can you give me an example of where edge computing is used?

Of course! Think of a smart camera that can detect intruders locally instead of sending video streams to the cloud. This minimizes the amount of data transmitted.

So, it's like making decisions on the spot, right?

Exactly! Edge devices provide immediate reactions by filtering and processing data on-the-go. To summarize, edge computing enhances efficiency in the IoT by minimizing delays. Let's remember: LBR helps us recall its primary advantages.

Understanding Fog Computing

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Next, let’s talk about fog computing, which serves as an intermediary between edge devices and cloud computing. Who can tell me the role of fog computing?

Does it help in processing data before it goes to the cloud?

Exactly! Fog computing performs additional processing on aggregated data, improves coordination, and provides networking services that enhance overall performance. Think of it as the 'middle layer.'

How does this architecture look in a typical setup?

Great question! In a typical architecture, we have the Edge Layer with devices like sensors, the Fog Layer that manages local servers or gateways for preliminary processing, and finally, the Cloud Layer for deep analytics. Keep in mind the phrase 'Three Layers for Efficiency'—it highlights the structure.

So, fog computing acts as a buffer and enhances communication?

Precisely! This dual-level approach is vital for responsive and scalable IoT systems. Remember the three layers: Edge, Fog, and Cloud, for a clearer perspective on their roles.

Applications of Edge and Fog Computing

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now that we understand edge and fog computing, let’s dive into some real-world applications! Can anyone name a scenario where this technology would be essential?

In smart cities, traffic lights could adjust in real-time based on local vehicle data.

Spot on! Smart cities utilize immediate data processing for efficiency. What about healthcare?

Wearables can monitor health metrics and alert medical systems without lag.

Exactly! Here, edge computing ensures that critical health alerts happen without delay. These applications demonstrate the importance of reduced latency in time-sensitive operations.

What about industry? How does this work there?

Great observation! In industrial automation, machines can shut down instantly upon detecting faults. This capability is a prime example of how edge and fog computing enhance safety and efficiency. To recap, smart cities and healthcare demonstrate the versatility of these technologies across various sectors.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

As the IoT ecosystem expands, edge and fog computing serve as key solutions to reduce latency, bandwidth consumption, and improve responsiveness by processing data closer to its source. This section covers concepts, architectures, use cases, and the significance of these paradigms in enhancing the reliability and efficiency of IoT systems.

Detailed

Edge and Fog Computing in IoT

The vast expansion of connected devices in the Internet of Things (IoT) has resulted in an overwhelming amount of data being generated. Traditional cloud computing models face challenges including high latency, significant bandwidth consumption, and limited responsiveness. To mitigate these issues, edge computing and fog computing emerge as critical solutions, enabling data processing closer to the data source.

Key Concepts:

- Edge Computing: Processes data locally at or near the source, such as on sensor nodes or gateways, allowing for faster decision-making and reduced network traffic.

- Fog Computing: Acts as a bridge between edge devices and the cloud, utilizing distributed nodes to offer processing, storage, and networking services.

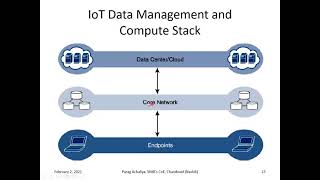

Architecture consists of three layers:

1. Edge Layer: Hosts IoT devices like sensors and embedded systems.

2. Fog Layer: Contains gateways or local servers that provide intermediate data processing and analytics.

3. Cloud Layer: Engages in extensive analytics and centralized management.

Use Cases include smart cities, healthcare monitoring systems, industrial automation, and intelligent retail applications, each benefitting from the reduced latency and improved reliability these paradigms offer.

Incorporating Edge AI, which combines machine learning at the edge, enhances real-time capabilities through functions like anomaly detection and image recognition, enabling immediate responses without needing to communicate with the cloud. The comprehensive understanding of edge and fog computing is pivotal for creating responsive, intelligent IoT ecosystems.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Introduction to Edge and Fog Computing

Chapter 1 of 8

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

The exponential growth of connected devices in the Internet of Things (IoT) ecosystem has created massive volumes of data. Traditional cloud-centric architectures face challenges like latency, bandwidth consumption, and limited responsiveness. To overcome these issues, edge and fog computing have emerged as vital paradigms that extend computing and analytics closer to the data source.

Detailed Explanation

As more devices become connected in the IoT ecosystem, they generate a significant amount of data. Traditional computing models, which mainly rely on cloud servers for data processing, struggle due to issues like slow data transfer (latency), excessive use of internet bandwidth, and slow responsiveness. Edge and fog computing address these challenges by processing data closer to where it is generated. This leads to more efficient data management and quicker responses.

Examples & Analogies

Think of a fast-food restaurant with a huge kitchen (the cloud) that processes all orders from across town. If a customer places an order, sending it to the kitchen could take time, leading to delays. Instead, if there is a small prep area (edge) right near the dining area where some orders can be processed immediately, customers get their meals faster, reducing wait times and kitchen workload.

Concepts of Edge and Fog Computing

Chapter 2 of 8

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Edge Computing refers to processing data at or near the location where it is generated, such as on a sensor node, embedded system, or gateway device. Instead of sending all raw data to the cloud for processing, edge computing enables local decision-making, minimizing latency and reducing network traffic. Fog Computing is a more distributed model that sits between the edge and the cloud. It involves intermediate nodes—such as routers, gateways, or micro data centers—that offer additional processing, storage, and networking services.

Detailed Explanation

Edge computing is about handling data right at the source, like a device that processes information locally rather than sending everything to the cloud for analysis. This means decisions can be made much faster, as there's no need to wait for data to travel to the cloud and back. On the other hand, fog computing acts as a middle layer that helps manage and process data from multiple edge devices, providing additional capabilities like storage and analytics that are closer to these devices but not quite at the edge.

Examples & Analogies

Imagine a smart thermostat (edge computing) that learns your routine and adjusts the temperature automatically without needing to ask remote servers what to do. However, as more devices work together, like in a smart home, a hub (fog computing) can analyze data from multiple devices to make better overall decisions—like lowering the temperature based on how many people are home.

Comparison: Edge, Fog, and Cloud Computing

Chapter 3 of 8

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

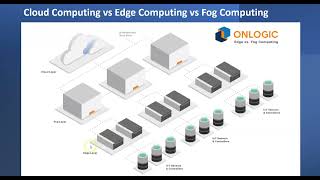

Comparison: ● Edge Computing: Operates directly at data source (e.g., sensor or device) ● Fog Computing: Operates at a layer between edge and cloud (e.g., gateway) ● Cloud Computing: Centralized processing at data centers.

Detailed Explanation

This section highlights key differences among edge, fog, and cloud computing. Edge computing focuses on immediate data processing where the data is generated. Fog computing provides a supportive layer between edge computing and centralized cloud processing, helping to manage data from various edges. In contrast, cloud computing centralizes data processing in large data centers, which can lead to latency challenges due to the distance data must travel.

Examples & Analogies

Consider a restaurant scenario: Edge computing is like a server taking orders and serving food directly to diners; fog computing is like a kitchen helper who takes multiple orders from several servers and organizes the cooking process. Cloud computing is like a central kitchen that must prepare all dishes from scratch, which takes longer because every order has to be processed there.

Edge AI and Real-time Data Processing

Chapter 4 of 8

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Edge AI is the deployment of machine learning models on edge devices to perform intelligent tasks like image recognition, anomaly detection, or voice processing in real time. Benefits of Edge AI: ● Reduced latency: Immediate response without cloud round trips ● Bandwidth savings: Only important or summarized data is sent to the cloud ● Privacy and security: Sensitive data remains on the device ● Offline functionality: AI can operate without internet connectivity.

Detailed Explanation

Edge AI allows smart devices to handle complex tasks using machine learning directly on the device instead of relying on cloud processing. This reduces delays in responding to situations. For example, instead of sending video footage to the cloud for analysis, a smart camera can analyze the footage locally and only send alerts if it detects something unusual. This ensures that data is processed faster, saves internet bandwidth, and keeps private information secure.

Examples & Analogies

Think of a smart speaker that can recognize your voice commands without needing to check with remote servers every time. When you ask for the weather, it quickly processes your request locally and responds instantly, just like having a personal assistant who is right next to you.

Architecture, Use Cases, and Deployment Models

Chapter 5 of 8

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

A typical architecture that includes edge and fog layers looks like this: 1. Edge Layer: IoT devices (sensors, actuators, embedded systems) with local compute capabilities. 2. Fog Layer: Gateways or local servers that process aggregated data and make intermediate decisions. 3. Cloud Layer: Performs deeper analytics, long-term storage, and centralized management. Each layer has its own role: ● Edge: Immediate reaction and filtering ● Fog: Coordination and intermediate analytics ● Cloud: Complex computation and data archiving.

Detailed Explanation

This architecture showcases how different layers of computing work together in an IoT system. The edge layer contains devices that can react and filter data instantly. The fog layer acts as an intermediary, processing and analyzing data that comes from these devices. Lastly, the cloud layer takes over for more complex data analytics and long-term storage. This setup enables efficient data processing and management by leveraging the strengths of each layer.

Examples & Analogies

Imagine a relay race: the edge layer is the sprinter who runs fast and passes the baton quickly. The fog layer is like the next runner who supports the sprinter by ensuring the baton is securely in hand and ready to run as well. The cloud layer is the last runner who analyzes the race overall and decides how to improve future races based on the collected data from the entire relay.

Use Cases of Edge and Fog Computing

Chapter 6 of 8

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Use Cases ● Smart Cities: Traffic lights adjust dynamically using local vehicle data ● Healthcare: Wearables monitor vitals and alert nearby medical systems ● Industrial Automation: Machines shut down instantly on detecting faults ● Retail: In-store devices process customer interactions to offer promotions.

Detailed Explanation

Edge and fog computing have numerous applications across various sectors. In smart cities, traffic management can optimize traffic flows in real time based on vehicle data without delays from cloud processing. In healthcare, wearables can instantly monitor health metrics and notify medical personnel. Manufacturing industries can prevent accidents by immediately shutting down malfunctioning machines, while retail environments can enhance customer experience by personalizing promotions based on real-time customer interactions.

Examples & Analogies

Imagine walking into a grocery store where the shelves automatically adjust their prices based on how many items are in the cart of each customer. This is possible with edge devices collecting data on the customer interactions in real time, showcasing how effective immediate data processing can enhance customer service and operational efficiency.

Deployment Models: Edge and Fog

Chapter 7 of 8

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Deployment Models ● On-device AI/ML: Model inference runs on microcontrollers or NPUs ● Gateway-centric Processing: Gateways collect and analyze data from multiple sensors ● Hybrid Models: Combine edge, fog, and cloud for layered intelligence.

Detailed Explanation

Different deployment models for edge and fog computing include on-device AI that operates on individual devices, gateway-centric processing which integrates data from various sensors for analysis, and hybrid models that merge edge, fog, and cloud computing. These approaches allow organizations to leverage the best of each computing style to maximize performance and effectiveness based on specific needs and applications.

Examples & Analogies

Think of a suite of tools in a toolbox. An on-device model is like having a screwdriver in hand for quick fixes; gateway processing is like having a multi-tool that combines several functions—ideal for medium tasks. Hybrid models are akin to having both at your disposal, allowing you to choose the right approach based on the task, whether it’s simple repairs or larger projects.

Conclusion on Edge and Fog Computing

Chapter 8 of 8

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Edge and fog computing are critical for building responsive, scalable, and intelligent IoT systems. By pushing computation closer to the source of data, they improve latency, enhance reliability, and reduce cloud dependency. These paradigms are especially vital for real-time applications across industries like manufacturing, healthcare, transportation, and smart infrastructure.

Detailed Explanation

In summary, edge and fog computing enhance the effectiveness and efficiency of IoT systems by bringing computation closer to the data source. This minimizes delays, increases reliability, and lessens the demand on cloud resources. As industries continue to rely on real-time data, these computing paradigms will be essential for various applications and sectors.

Examples & Analogies

Picture a high-speed highway system that allows cars to quickly adjust speeds based on real-time traffic data. The cars (edge devices) receive instantaneous updates about traffic conditions, while toll booths (fog nodes) can make immediate decisions, all without needing to check with a distant control center (cloud). This illustrates how imperative edge and fog computing are in our increasingly connected world.

Key Concepts

-

Edge Computing: Processes data at the source.

-

Fog Computing: Intermediate model between edge and cloud.

-

Real-time Data Processing: Critical for applications requiring immediate response.

-

Edge AI: Local processing of AI tasks for increased efficiency.

Examples & Applications

A smart surveillance camera that detects suspicious activity locally instead of streaming video to the cloud.

Traffic management systems in smart cities adjusting lights based on real-time vehicle counts.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

At the edge, data flows right, quick responses are in sight!

Stories

Imagine a world where every smart device talks intelligently to each other, filtering crucial information locally, much like a vigilant guardian ensuring safety without needing to call for help every time.

Memory Tools

Remember 'CLOE' - Cloud, Layer, Edge, and Operations—describing the computing landscape.

Acronyms

LBR

Latency

Bandwidth

Reliability—key benefits of edge computing.

Flash Cards

Glossary

- Edge Computing

A computing paradigm that processes data closer to the source of its generation.

- Fog Computing

A decentralized computing model that extends cloud services to the edge of the network.

- Latency

The delay before a transfer of data begins following an instruction.

- Bandwidth

The maximum rate of data transfer across a network.

- Cloud Computing

On-demand availability of computer system resources, primarily data storage and processing power.

- Edge AI

The implementation of artificial intelligence at the edge of the network, processing data locally.

- Realtime Data Processing

The execution of data processing tasks at the moment the data is created.

- Deployment Models

Frameworks defining how edge and fog computing resources are utilized.

Reference links

Supplementary resources to enhance your learning experience.