Multithreading and Concurrency

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Introduction to Multithreading

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today, we’re going to explore multithreading. Can anyone tell me what multithreading means?

Is it when a program runs multiple threads at the same time?

Exactly! Multithreading is when multiple threads are executed simultaneously within a process. This improves resource utilization and performance. Can anyone think of a situation where multithreading might be useful?

Maybe in a web server that handles multiple requests?

Great example! This way, the server can serve many users at once without waiting for one operation to finish.

Understanding Concurrency

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now let’s discuss concurrency. Who can define it for us?

Is it when two or more tasks are being processed simultaneously?

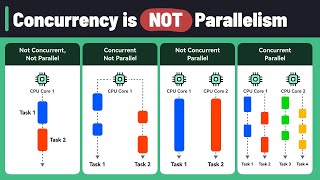

Close! Concurrency refers to multiple sequences of operations being executed at the same time, which does involve multitasking. How does this relate to multithreading?

Concurrency is a broader term that includes multithreading?

Exactly! All multithreading is concurrency, but not all concurrency is multithreading. Keep this in mind!

Thread Synchronization

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now, let's talk about synchronization. Why do we need synchronization when multiple threads are running?

To prevent they access the same data at the same time?

Correct! When multiple threads access shared resources, race conditions can occur, where the output depends on the sequence of thread execution. What tools can we use for synchronization?

Mutexes and semaphores?

Right! Mutexes allow only one thread to access a resource, while semaphores can manage access levels. Remember MUI for **M**utex and **S**emaphore for synchronization in multithreading!

Deadlocks and Race Conditions

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Next, let's discuss deadlocks and race conditions. Who has heard of these terms before?

I've heard of deadlocks as programs getting stuck, right?

Exactly! A deadlock occurs when two or more threads are waiting for each other to release resources. Can you think of how to avoid this?

We could use a timeout or order resource acquisition?

Great strategies! Understanding these issues is crucial for building robust applications.

Thread Pools and Parallel Computing

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Finally, let's touch on thread pools. What do you think a thread pool is?

Is it a collection of pre-initialized threads?

Precisely! Thread pools manage a set number of threads to handle multiple tasks without the overhead of constantly creating new ones. Why is this efficient?

It saves time and resources, right?

Exactly! Pooling is an excellent strategy for improving performance and resource utilization in applications.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

In this section, we delve into the principles of multithreading and concurrency, discussing key concepts such as thread life cycles, synchronization mechanisms, potential issues like deadlocks and race conditions, and advanced methods like thread pools to enhance performance and manage complexity in modern software applications.

Detailed

Multithreading and Concurrency

Multithreading refers to the ability to run multiple threads, or smaller units of a process, simultaneously, which significantly improves resource utilization and boosts application performance. On the other hand, concurrency is the execution of multiple sequences at the same time, which requires careful management to ensure thread synchronization and data consistency. This section covers essential topics including:

- Thread Life Cycle: Understanding how threads are created, executed, and destroyed.

- Synchronization: Mechanisms such as mutexes and semaphores that are used to control thread access to shared resources to avoid conflicts.

- Deadlocks and Race Conditions: Problems that arise from improper synchronization, leading to frozen states or incorrect data outcomes.

- Thread Pools and Parallel Computing: Techniques that enhance performance by reusing existing threads rather than constantly creating new ones.

The significance of mastering these concepts cannot be overstated, as they form the backbone of effective modern application development and enable programmers to write more efficient, scalable, and responsive software.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Introduction to Multithreading

Chapter 1 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Multithreading

• Running multiple threads (smaller units of process) simultaneously.

• Improves resource utilization and performance.

Detailed Explanation

Multithreading refers to the ability of a CPU, or a single core in a multi-core processor, to provide multiple threads of execution concurrently. Essentially, it involves splitting a process into smaller threads that can run simultaneously. This approach leverages the capabilities of modern processors, which can handle multiple tasks at once, leading to better resource utilization and improved performance. In a multithreaded environment, each thread executes its code independently, sharing the same resources but having its separate execution path.

Examples & Analogies

Imagine a restaurant with multiple chefs preparing different dishes simultaneously. Each chef represents a thread, working independently but within the shared kitchen (resources). This setup allows the restaurant to serve more customers quickly compared to just one chef working alone.

Understanding Concurrency

Chapter 2 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Concurrency

• Execution of multiple sequences at the same time.

• Involves managing thread synchronization and data consistency.

Detailed Explanation

Concurrency refers to the ability of the system to execute multiple sequences of operations at the same time. It’s an essential concept in programming when dealing with multithreading. In a concurrent environment, multiple threads are managing the execution of different tasks simultaneously. However, this also requires careful synchronization to avoid conflicts and ensure that data remains consistent. Data consistency is critical because if multiple threads attempt to read and write to the same data simultaneously without proper control, it can lead to unpredictable results or data corruption.

Examples & Analogies

Think of a banking system where several customers can make transactions at the same time. If two customers try to withdraw money from the same account simultaneously, the bank must ensure that both transactions are processed correctly, maintaining the correct balance. This requires a synchronization mechanism, much like a bank teller who ensures only one customer accesses their account at a time.

Key Concepts of Multithreading

Chapter 3 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Key Concepts

• Thread life cycle.

• Synchronization (mutex, semaphore).

• Deadlocks and race conditions.

• Thread pools and parallel computing.

Detailed Explanation

This section covers essential concepts associated with multithreading:

1. Thread life cycle: This describes the various states a thread can be in, such as new, runnable, blocked, or terminated, indicating its status during execution.

- Synchronization: This involves mechanisms like mutexes (mutual exclusions) and semaphores to control access to shared resources, preventing conflicts among threads.

- Deadlocks: This situation occurs when two or more threads are waiting on each other to release resources, resulting in a standstill where no thread can proceed.

- Race conditions: This happens when the outcome of a process depends on the sequence or timing of uncontrollable events, leading to inconsistent results.

- Thread pools: These are collections of pre-initialized threads that can be reused for executing tasks, promoting efficient resource utilization and easing system load.

- Parallel computing: This technique divides a discrete problem into smaller tasks performed simultaneously, leveraging the full potential of processor resources.

Examples & Analogies

Consider a factory assembly line (the thread life cycle) where products (threads) move through various stages (runnable, blocked) to get completed (terminated). If one worker (thread) needs a tool (resource) that's currently in another worker’s hand, a mutex can help ensure that only one worker accesses it at a time. Meanwhile, if two workers are waiting for each other to finish their tasks to use the tool, that's a deadlock, and the flow of production halts. By having a pool of tools (thread pool), workers can grab the next available tool, ensuring work continues effectively without interruption.

Key Concepts

-

Multithreading: Running multiple threads simultaneously to improve performance.

-

Concurrency: Executing multiple sequences at the same time, which includes multithreading.

-

Thread Life Cycle: The phases of a thread's existence, from creation to destruction.

-

Synchronization: Mechanisms used to manage access to shared resources among threads.

-

Deadlocks: A blockage situation caused by competing threads awaiting each other.

-

Race Conditions: Errors that occur due to non-synchronized access to shared variables.

-

Thread Pools: Reusable collections of threads for efficient task management.

-

Parallel Computing: Running multiple computations simultaneously to enhance performance.

Examples & Applications

A web server handles multiple simultaneous requests using multithreading to serve users efficiently.

In a video game, multiple threads might handle different game elements like player input, graphics rendering, and physics calculations simultaneously, improving responsiveness.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

In threading we divide and conquer, resources we adjust, !keep them in check, for performance is a must!

Stories

Imagine a busy restaurant: chefs (threads) cook dishes (tasks) at the same time. Each chef uses shared ingredients (resources). If two chefs want the same pot, chaos ensues. They must synchronize like a dance to prevent conflict!

Memory Tools

Remember MDS (Mutex, Deadlock, Synchronization) to stay aware of key multithreading concepts.

Acronyms

Use TLP (Thread Life Cycle, Synchronization, Parallel Computing) to recall essential aspects of multithreading.

Flash Cards

Glossary

- Multithreading

A programming concept that allows multiple threads to be executed simultaneously, improving application performance.

- Concurrency

The ability to execute multiple sequences of operations simultaneously, often through multithreading.

- Thread Life Cycle

The lifecycle phases of a thread, including creation, execution, and termination.

- Synchronization

The coordination of concurrent threads to ensure thread safety, typically using tools like mutexes and semaphores.

- Deadlocks

A situation in which two or more threads are unable to proceed because each is waiting for the other to release resources.

- Race Condition

A flaw that occurs when the timing of actions impacts the correctness of a program, often due to unsynchronized access to shared data.

- Thread Pool

A collection of threads that can be reused to perform multiple tasks, reducing the overhead of thread creation.

- Parallel Computing

The simultaneous execution of multiple computations, typically leveraging multiple processors.

Reference links

Supplementary resources to enhance your learning experience.