Edge and Fog Computing

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Introduction to Edge and Fog Computing

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today, we are diving into edge and fog computing. Let's start by thinking about why we need to process data closer to its source. Can anyone give me an example of when low latency is crucial?

In autonomous vehicles, every millisecond matters for decision-making.

Exactly! That’s a perfect example. Edge computing allows for this immediate processing. Now, how does fog computing relate to this?

Fog computing seems to handle more data from many connected devices instead of just one.

Right! Fog computing acts as an intermediary layer that aggregates data from multiple edge devices. It helps us manage all that information efficiently before it hits the cloud.

Key Benefits of Edge and Fog Computing

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now that we understand what edge and fog computing are, let’s talk about their benefits. Why do you think minimizing latency is a benefit?

It allows quicker responses in critical situations, like health monitoring systems.

Excellent point! Lower latency is vital not just in healthcare but also in manufacturing and entertainment. Energy efficiency is another benefit. How does processing closer to the source save energy?

If we process data locally, we reduce the amount of data sent to the cloud, so less data transmission energy is used.

Exactly! By utilizing edge and fog computing, we can significantly improve overall energy efficiency.

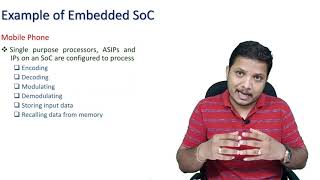

The Role of SoCs in Edge and Fog Computing

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Let’s connect the dots between edge computing and SoCs. Why do you think compact, autonomous SoCs are essential for these architectures?

They need to be small and efficient to fit into various devices and handle real-time processing.

Correct! Compact SoCs facilitate efficient processing without taking up too much space or power. Can you think of an application where this is critical?

In smart sensors installed in factories that monitor production lines.

Exactly! Those devices need to operate efficiently and reliably in real-time.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

This section discusses edge and fog computing architectures, which optimize computation and data handling at the network's edge to reduce latency, improve energy efficiency, and enable real-time data processing. These architectures necessitate compact, autonomous system-on-chip (SoC) designs.

Detailed

Edge and Fog Computing

Edge and fog computing represent a transformative shift in computing architectures, aimed at bringing processing and data storage closer to data generation sources. This approach is particularly significant in contexts where low latency, energy efficiency, and real-time analytics are paramount.

- Edge Computing: This refers to processing data at or near the source of data generation (e.g., IoT devices). By moving computation closer to the data, edge computing reduces latency, improves response times, and allows for immediate decision-making. This is crucial for applications such as autonomous vehicles or smart industrial systems.

- Fog Computing: This complements edge computing by distributing data processing tasks across multiple layers, from edge devices to centralized cloud services, forming an intermediate layer (the 'fog'). Fog computing enables broader network capabilities, allowing for the aggregation and filtering of data from many edge devices, which can then be processed more efficiently.

Both architectures necessitate the design of compact, autonomous SoCs capable of handling real-time data analytics with minimal power consumption. These SoCs are fundamental in facilitating the increasing demands of modern applications, ensuring high performance while addressing constraints related to size and energy efficiency.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Overview of Edge and Fog Computing

Chapter 1 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

● Architectures tailored for processing at the edge of the network

Detailed Explanation

Edge and fog computing are architectures designed to process data closer to its source rather than relying on a centralized data center. This means that instead of sending all data to a remote server to be analyzed, these systems analyze data at the location where it is generated, which can be on devices or local servers nearby. This approach reduces latency, or the time it takes to send data back and forth, leading to faster responses and better performance in applications.

Examples & Analogies

Think of edge computing like a smart assistant in your home that can answer your questions immediately without having to call a remote server each time. For instance, if you ask your assistant to play music, it doesn’t delay by contacting a server far away—it's able to use its local resources to play your request right away.

Low-Latency and Energy Efficiency

Chapter 2 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

● Emphasize low-latency, energy efficiency, and real-time analytics

Detailed Explanation

One of the primary advantages of edge and fog computing is its focus on low-latency, meaning that data is processed and acted upon as quickly as possible. This is particularly important for applications that require immediate feedback, such as in self-driving cars or healthcare monitoring devices. Energy efficiency is also a key consideration; by processing data at the edge, less bandwidth is consumed, and devices can conserve power, which is vital for battery-operated devices in the Internet of Things (IoT).

Examples & Analogies

Imagine a time-sensitive game like basketball, where every second counts. If the referee had to pause the game to check a ruling with a faraway judge, that would slow everything down. Edge computing, similar to having judges on the court, ensures that decisions are made in real-time, allowing the game to flow without unnecessary interruptions.

Compact and Autonomous Systems

Chapter 3 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

● Require compact, autonomous SoCs

Detailed Explanation

Edge and fog computing necessitate the use of compact, autonomous System-on-Chips (SoCs) that are capable of processing data independently without relying on larger, centralized servers. These SoCs integrate various functionalities in a small form factor, allowing devices to operate seamlessly at the edge of the network. This design approach is especially useful for IoT devices, drones, smart cameras, and more, where space and power efficiency are critical.

Examples & Analogies

Consider a Swiss Army knife. It combines many tools into a single device, making it compact and versatile for various tasks. Similarly, an SoC functions like this by combining multiple processing capabilities in a small chip, making it perfect for devices that need to perform various jobs without requiring extra space or power.

Key Concepts

-

Edge Computing: Processes data at or near the source.

-

Fog Computing: Distributes and processes data across multiple layers.

-

Low Latency: Essential for real-time responses in critical applications.

-

Energy Efficiency: Minimizes power consumption in data processing.

Examples & Applications

Autonomous vehicles rely on edge computing to analyze sensor data instantly for navigation decisions.

Smart city infrastructures utilize fog computing to manage data from various sensors and devices efficiently.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

In the fog are data streams, processed before they leave our dreams; edge close by, quick as can be, helping data flow effortlessly.

Stories

Imagine a city where every street light is smart, processing data on the go. This is the world of edge computing where decisions are made in real-time, while fog computing connects them together, managing the flow of information between them efficiently.

Memory Tools

EASE: Edge for Accuracy, Speed, and Efficiency.

Acronyms

FEED

Fog for Efficient Enhanced Data processing.

Flash Cards

Glossary

- Edge Computing

A computing paradigm that processes data at or near the source of data generation.

- Fog Computing

A decentralized computing infrastructure that extends cloud computing to the edge of the network.

- Latency

The delay before a transfer of data begins following an instruction for its transfer.

- SystemonChip (SoC)

An integrated circuit that incorporates all components of a computer or system on a single chip.

Reference links

Supplementary resources to enhance your learning experience.