Heterogeneous Computing

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Introduction to Heterogeneous Computing

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Good morning, class! Today, we're diving into heterogeneous computing. Can anyone tell me what we mean by 'heterogeneous' in this context?

It means different, right? Like using different kinds of processors for different tasks?

Exactly! Heterogeneous computing involves combining different types of processors like CPUs, GPUs, and NPUs on a single chip. This approach helps optimize the execution of diverse workloads.

So, why would we want to do that?

Great question! If you think about it, some tasks need a lot of power, while others don’t. By having a combination of high-performance and low-power processors, we can manage these tasks more efficiently.

Is there a specific example of this kind of architecture?

Yes! A well-known example is ARM’s big.LITTLE architecture. It combines big, powerful cores for heavy tasks with little, energy-efficient cores for lighter tasks.

That sounds really useful for mobile devices!

Absolutely! This design allows mobile devices to conserve battery life while ensuring smooth performance when needed. Now, let’s summarize: Heterogeneous computing combines different processors to optimize task execution and increase power efficiency.

Benefits of Heterogeneous Computing

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

In our last session, we talked about the basics of heterogeneous computing. Can anyone remind me why it is advantageous?

Because it allows tasks to run more efficiently by matching processors to the work that needs to be done.

That's correct! By assigning tasks to the most suitable processors, we can improve performance and reduce power consumption. This is crucial in environments like mobile devices, where battery life is paramount.

And it also helps with thermal management, right? Since we don't always need to run the powerful cores at full load.

Exactly! By dynamically migrating workloads between high-power and low-power cores, we can minimize heat generation and enhance overall system reliability.

What happens when a task needs more performance than a low-power core can provide?

In such cases, the system can automatically switch to using high-performance cores. This flexibility is crucial in providing a seamless user experience across a variety of applications.

So, it’s all about balancing power and performance!

Exactly right! Remember, the key benefit of heterogeneous computing is the optimized balance it strikes between performance and power efficiency.

Dynamic Workload Scheduling

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now let’s discuss the dynamic scheduling of workloads in heterogeneous systems. Can anyone explain how this process works?

Is it about deciding which task goes to which processor based on current needs?

That's right! The system continuously monitors the processing demands of tasks and decides whether to run them on high-performance or low-power cores based on performance and power requirements.

What kind of criteria do they use to make those decisions?

Excellent question! Criteria can include current workload intensity, power availability, and thermal conditions. The goal is to ensure efficient processing while conserving energy.

How important is it for applications to be designed for this kind of scheduling?

Very important! For optimum efficiency, application developers need to enable workload awareness in their apps. This means building applications that can recognize and adapt to varying performance environments.

So, in a way, it’s a partnership between hardware capability and software design!

Exactly! Both components must work harmoniously for the benefits of heterogeneous computing to be fully realized. Let’s recap: dynamic workload scheduling matches tasks with the right processor type to optimize performance and power use.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

The section on heterogeneous computing discusses how combining different types of processors, such as CPUs, GPUs, and NPUs, on the same chip can enhance execution efficiency for a variety of workloads, exemplified by ARM's big.LITTLE architecture.

Detailed

Heterogeneous Computing

Heterogeneous computing refers to the use of multiple types of processors or cores within a single System-on-Chip (SoC). This architecture allows for specialized processing capabilities, leading to optimized execution of a broader range of workloads, which can include everything from graphics rendering to machine learning tasks.

One of the notable examples is ARM’s big.LITTLE architecture, which employs both high-performance and low-power cores. This setup empowers dynamic workload migration, allowing the system to shift tasks between cores based on their performance and power consumption needs. As a result, heterogeneous computing can effectively balance the demands of task performance while conserving power, making it particularly advantageous in portable and embedded systems.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Introduction to Heterogeneous Computing

Chapter 1 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

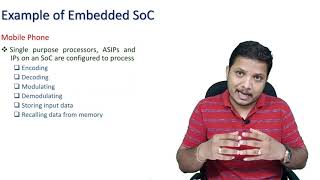

Heterogeneous architectures combine different types of processors (CPU, GPU, NPU) on the same chip.

Detailed Explanation

Heterogeneous computing refers to the integration of multiple types of processors within a single chip, such as CPUs (Central Processing Units), GPUs (Graphics Processing Units), and NPUs (Neural Processing Units). Each type of processor is optimized for specific tasks; for instance, CPUs handle general-purpose computing tasks, GPUs specialize in parallel processing for graphics and complex calculations, and NPUs focus on machine learning computations. This architectural approach allows for greater efficiency and performance when handling various workloads by leveraging the strengths of each processor type.

Examples & Analogies

Think of a heterogeneous computing system like a team of specialists in a restaurant. The chef (CPU) prepares a variety of dishes, the pastry chef (GPU) focuses on making desserts, and the nutritionist (NPU) designs meal plans based on dietary needs. Each chef has a specific role, and by working together, they create a complete and balanced meal efficiently.

Optimization of Diverse Workloads

Chapter 2 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Optimizes execution of diverse workloads

Detailed Explanation

The primary advantage of a heterogeneous architecture is its ability to optimize the execution of diverse workloads. Different tasks require different types of processing power; for example, rendering graphics requires a lot of data parallelism, which GPUs excel at, while general application processing benefits from the high efficiency of CPUs. By distributing tasks to the appropriate processor, systems can perform more efficiently, achieving faster execution with lower energy consumption.

Examples & Analogies

Imagine mixing different colors for paint. Each color (or processor type) has its unique properties, and when combined correctly, they create a more vibrant final product. Similarly, using different processors effectively allows a computing system to handle complex tasks more efficiently.

Example: ARM's big.LITTLE Architecture

Chapter 3 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Example: ARM’s big.LITTLE architecture combines high-power and low-power cores.

Detailed Explanation

ARM's big.LITTLE architecture is a practical example of heterogeneous computing. It combines high-performance cores (big) with energy-efficient cores (LITTLE) on the same chip. The system dynamically switches between these cores based on the workload requirements. For instance, during lighter tasks like scrolling through a web page, the system might use the more energy-efficient LITTLE cores. However, for demanding tasks like gaming or video editing, it will activate the more powerful big cores. This approach not only improves performance but also extends battery life in mobile devices.

Examples & Analogies

Consider a car that has both a powerful engine for speed (big core) and a fuel-efficient engine for city driving (LITTLE core). The car automatically selects the best engine based on driving conditions, optimizing performance and fuel consumption.

Dynamic Workload Migration

Chapter 4 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Enables dynamic workload migration based on performance/power needs.

Detailed Explanation

Heterogeneous computing architectures allow for dynamic workload migration, meaning that tasks can be moved between different types of processors in real-time based on current performance and power needs. For instance, if a device is running low on battery, less power-intensive tasks can be offloaded to the LITTLE cores, while more demanding tasks can be handled by the big cores as needed. This flexibility ensures that systems are not only performant but also efficient in their power usage.

Examples & Analogies

Imagine a construction site with workers assigned to different tasks. A supervisor reallocates workers based on the workload—more workers on heavy lifting when needed and fewer during lighter tasks, ensuring the project stays on schedule while managing energy efficiently.

Key Concepts

-

Heterogeneous Computing: The integration of different types of processors on a single chip for optimized workloads.

-

Dynamic Workload Scheduling: A method in heterogeneous systems that allocates tasks to the most suitable processors based on real-time performance and power needs.

Examples & Applications

ARM's big.LITTLE architecture efficiently combines high-performance and low-energy cores for mobile devices.

In heterogeneous computing, a machine learning task may be processed by an NPU while traditional processing may be handled by a CPU.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

For power and speed, just choose the right core, with both high and low, you'll achieve so much more!

Stories

Imagine a busy restaurant where some chefs specialize in quick dishes and others in gourmet meals. This is like how heterogeneous computing allows different processors to handle specific tasks efficiently.

Memory Tools

HETERO - Heterogeneous Efficient Task Execution with Several Types of Resources Operating.

Acronyms

DWS - Dynamic Workload Scheduling

Decide Where to Schedule based on workload.

Flash Cards

Glossary

- Heterogeneous Computing

A computing environment that utilizes different types of processors or cores in a single architecture for optimized workload execution.

- CPU

Central Processing Unit, the primary component of a computer that performs most of the processing inside a computer.

- GPU

Graphics Processing Unit, a specialized processor designed to accelerate graphics rendering and parallel processing tasks.

- NPU

Neural Processing Unit, a type of processor designed to accelerate artificial intelligence workloads.

- Dynamic Workload Scheduling

The process of distributing tasks across multiple processors based on current performance and power needs.

- big.LITTLE architecture

An ARM architecture that combines high-performance cores with energy-efficient cores for optimized power use and performance.

Reference links

Supplementary resources to enhance your learning experience.