Common Control Strategies

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

PID Control explained

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today, we're going to explore PID control, one of the most crucial strategies in control systems. PID stands for Proportional, Integral, and Derivative. Who can tell me what each component does?

The proportional part adjusts the control output based on the current error?

Exactly! Now, what about the integral part?

It deals with past errors, accumulating them over time to eliminate steady-state error.

And the derivative part looks at how fast the error is changing, right?

Correct! Together, these three components help to minimize the error in control systems. To remember them, think of the acronym PID: 'Pursue Immediate Discrepancies'.

Now, let’s summarize: PID control helps us correct present errors, accumulate past discrepancies to improve future outputs, and adjust for the rate of error change. How does that sound to everyone?

State-Space Control

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Next up is state-space control. Can anyone remind me what a state variable is?

It’s a variable that represents a system's state at a given time, like position or velocity?

Correct! State-space control uses these variables to describe the dynamics of the system. Why might state-space be preferable for complicated systems?

Because it can handle multiple inputs and outputs more effectively than a transfer function model?

Exactly! In multi-input multi-output systems, state-space approaches provide a more comprehensive representation. Here’s a memory aid: Remember 'S for State and Systems.'

In summary, state-space control is crucial for tackling complex dynamics that traditional models might overlook.

Optimal Control

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now, let’s discuss optimal control. What does it aim to achieve?

It aims to find the best control inputs to minimize the cost function representing errors?

Exactly! By minimizing this cost function, we achieve efficiency in our control system. Can anyone provide an example of where optimal control might be applied?

Perhaps in robotics, where precision and efficiency are crucial?

Great example! Remember the phrase: 'Optimal Control = Efficiency through Precision.' It helps to remember this strategy's essence.

In summary, optimal control is about finding the best balance to minimize deviation, enhancing overall system performance.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

Common control strategies are crucial for effective control system design. This section discusses PID control, which combines proportional, integral, and derivative actions; state-space control for complex systems; and optimal control aimed at minimizing system discrepancies. Understanding these strategies is essential for improving system performance in various applications.

Detailed

Common Control Strategies

This section focuses on three fundamental strategies employed in control systems engineering: PID control, state-space control, and optimal control. Each of these strategies plays a vital role in ensuring that systems behave as intended and maintain performance despite external disturbances.

1. PID Control

PID control stands for Proportional-Integral-Derivative control. This widely-used method enhances control performance by combining three terms:

- Proportional: Corrects the error based on the present deviation from the desired output.

- Integral: Addresses accumulated past errors, thus eliminating steady-state errors over time.

- Derivative: Anticipates future errors by considering the rate of change of the error.

These components work together to minimize the error in the control system. For instance, a PID controller is frequently utilized in temperature control systems to maintain a specific temperature accurately.

2. State-Space Control

State-space control is based on the presentation of a system's dynamics using state variables. It utilizes equations governing how these state variables evolve over time, allowing for a systematic representation of both single-output and multi-output systems. This method is particularly advantageous when a system is too complex to model with traditional transfer functions. In state-space control, feedback can be applied effectively to enhance system response and stability.

3. Optimal Control

Optimal control seeks to find the best possible control inputs to minimize a cost function representing the difference between the desired system response and the actual output. This method ensures that control strategies are not only effective but also efficient, leading to improved performance in various applications. In practice, optimal control can involve sophisticated mathematical techniques, but it offers the potential for significant improvements in control system performance.

Overall, understanding these control strategies is pivotal for engineers to design systems that are robust, efficient, and capable of meeting performance specifications.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

PID Control

Chapter 1 of 2

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

A widely used controller that combines three terms: Proportional, Integral, and Derivative, to achieve better control performance.

The proportional term corrects errors based on the current deviation, the integral term addresses accumulated past errors, and the derivative term anticipates future errors based on the rate of change.

Detailed Explanation

PID control is a popular strategy in control systems that optimizes system performance by using three distinct calculations. The Proportional term reacts to the current error in the system, meaning it responds in real-time to how far away the current state is from the desired state. The Integral term accumulates past errors, which helps in eliminating steady-state errors over time. Finally, the Derivative term predicts future errors based on how quickly the current error is changing, allowing the system to react before the error becomes larger. Thus, these three components work together to provide precise and efficient control.

Examples & Analogies

Imagine you are driving a car. The Proportional component is like your foot on the gas pedal; it helps you accelerate based on how fast you need to go. The Integral aspect is akin to your experience driving—over time, you learn to adjust for the small dips in speed you previously ignored. Finally, the Derivative component is similar to looking ahead on the road; if you see a hill approaching, you can start to adjust your speed before you reach it. Together, these strategies ensure smooth and effective driving.

State-Space Control

Chapter 2 of 2

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

In state-space control, the system's state variables (like position, velocity, etc.) are used to describe the system, and control is based on the state of the system at any given time.

This approach is particularly useful for systems that are difficult to model with transfer functions (e.g., multi-input, multi-output systems).

Detailed Explanation

State-space control is a modern approach that utilizes the current state of a system to make decisions about control actions. Instead of just looking at input and output, state-space control focuses on the variables that define the system's state, such as position or velocity. This method can handle complex systems that involve multiple inputs and outputs—something traditional methods, like transfer functions, struggle to accurately model. By considering the entire state of the system, it can provide more effective control and allow for better performance in real-world applications.

Examples & Analogies

Think of state-space control as how an athlete trains. If a soccer player evaluates their performance, they look at various aspects: how they're moving (position), how fast they're running (velocity), and their stance. Rather than focusing on just scoring a goal (input) and the final score (output), they consider their entire state in terms of physical and tactical execution. This approach allows them to improve their performance holistically.

Key Concepts

-

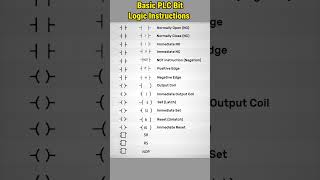

PID Control: A strategy using proportional, integral, and derivative actions for error correction.

-

State-Space Control: A representation of system dynamics using state variables.

-

Optimal Control: A method for minimizing discrepancies between desired and actual outputs.

Examples & Applications

A temperature control system using PID to maintain consistent room temperature.

A robotic arm using state-space control to accurately follow desired trajectories.

A flight control system implementing optimal control to ensure fuel efficiency during operation.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

For control that's nice and neat, PID helps make errors meet.

Stories

Imagine a chef (PID) who tastes each dish (present error), remembers past dishes (integral), and anticipates how flavors change (derivative), creating the perfect meal every time.

Memory Tools

To remember PID: P for Present, I for In the past, D for Dynamic future.

Acronyms

SOC for State, Outputs, Control - essential parts of state-space representation.

Flash Cards

Glossary

- PID Control

A control strategy combining Proportional, Integral, and Derivative components for improved system regulation.

- StateSpace Control

A control method using state variables to represent the system's dynamics, suitable for complex systems.

- Optimal Control

A strategy aimed at minimizing a cost function that represents the difference between desired and actual system behavior.

Reference links

Supplementary resources to enhance your learning experience.