Autoencoders

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Introduction to Autoencoders

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today, we’re discussing autoencoders, which are fascinating tools in deep learning. Can anyone tell me what they think an autoencoder does?

I think it’s something related to encoding data?

Exactly! An autoencoder encodes data into a compressed format and then decodes it back to the original data. Remember, it has two parts: the encoder and the decoder. Can anyone name the two functions of the autoencoder?

The encoder compresses the data, and the decoder reconstructs it?

Great job! The encoder takes the input data and compresses it into a latent representation, while the decoder reconstructs the output. A good memory aid is ‘E for Encode, D for Decode’.

Applications of Autoencoders

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now that we know what autoencoders are, let’s discuss their applications. What do you think are some uses for autoencoders in the real world?

Maybe in reducing image sizes for storage?

That’s a good example! They’re also used in anomaly detection. If an autoencoder is trained on a dataset of normal patterns, it can flag anything that doesn't fit. Can anyone tell me why this might be useful?

It helps in finding fraud or errors in data?

Precisely! Additionally, autoencoders can be employed for denoising data. They learn to remove noise from useful information during the reconstruction phase.

How Autoencoders Function

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Let’s dive deeper into how autoencoders function. Can anyone describe the basic architecture of an autoencoder?

It has input, hidden, and output layers?

Exactly! The input layer receives the data, while the hidden layer represents the compressed form. The output layer aims to replicate the input as closely as possible. Remember, it’s crucial for the autoencoder to minimize the difference between the input and output. This process is called minimizing reconstruction error. Who can tell me why it’s important?

To ensure that the essential features of the data are captured?

Correct! Capturing those features is essential for successful applications.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

This section discusses autoencoders, which consist of an encoder and a decoder, functioning as a compression mechanism for data. Autoencoders are applied in various fields, particularly in anomaly detection and denoising processes due to their ability to learn efficient data representations.

Detailed

Autoencoders

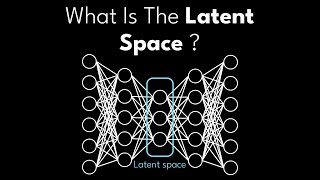

Autoencoders are a type of artificial neural network used for unsupervised learning, primarily aimed at reducing the dimensionality of data or learning compressed representations. They consist of two main components: an encoder that transforms the input into a compressed representation called the latent space, and a decoder that reconstructs the original data from this compressed form.

The architecture of an autoencoder generally consists of input, hidden, and output layers:

- Encoder: This part captures essential features of the input data and encodes it into a lower-dimensional representation. It acts as a feature extractor.

- Decoder: The decoder attempts to reconstruct the original input from the latently encoded data, ensuring the network learns to represent critical features while ignoring irrelevant information.

Applications and Significance

Autoencoders have become important in various applications:

- Anomaly Detection: They can detect outliers in data by learning normal behavior patterns. If the reconstruction error is higher than a predefined threshold, the data point is considered an anomaly.

- Denoising: Autoencoders can learn to remove noise from data by training on noisy inputs while reconstructing clean outputs.

In summary, autoencoders represent a powerful tool in the deep learning toolbox, enabling efficient data processing, representation learning, and various applications across different domains.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Overview of Autoencoders

Chapter 1 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Autoencoders are used for unsupervised learning and dimensionality reduction.

Detailed Explanation

Autoencoders are a type of neural network designed to learn efficient representations of data. They do this by encoding the input data into a compressed form and then decoding it back to reconstruct the original input. This process allows them to capture the most important features of the data while discarding noise. In unsupervised learning, autoencoders find patterns in data without labeled outcomes, making them useful for exploratory data analysis and feature extraction.

Examples & Analogies

Think of an autoencoder as a skilled artist who compresses a detailed drawing into a simple sketch that captures the essence of the original artwork. When shown the sketch, the artist can recreate the detailed drawing. Similarly, autoencoders take input data, simplify it while retaining its essence, and can recreate a close version of the input.

Structure of Autoencoders

Chapter 2 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Autoencoders consist of two main components: Encoder and Decoder.

Detailed Explanation

The structure of autoencoders is divided into two main parts: the encoder and the decoder. The encoder processes the input data and maps it to a lower-dimensional space, which effectively compresses the data. In contrast, the decoder takes this compressed representation and attempts to reconstruct the input data from it. This two-part structure allows autoencoders to learn not just how to reduce data complexity but also how to generalize the information contained in the original input.

Examples & Analogies

Imagine a storage facility where items are stored in boxes. The encoder is like the person organizing and labeling these boxes in a way that maximizes space and minimizes clutter. The decoder is the same person, who, when asked for an item, can find and retrieve it efficiently, ensuring that the contents of the box still resemble what's inside the original item despite the box being more compact.

Applications of Autoencoders

Chapter 3 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Applications include anomaly detection and denoising.

Detailed Explanation

Autoencoders have several practical applications. One major application is anomaly detection, where they learn the patterns of normal data during training. When they encounter new data, they can identify anomalies if the reconstruction error is high, indicating that the new data doesn't conform to the learned patterns. Another application is denoising, where autoencoders are trained on noisy inputs and learn to filter out noise to reconstruct the clean version of the data, which is especially useful in image processing.

Examples & Analogies

Consider an autoencoder used in fraud detection for a bank. It learns what normal transactions look like over time. If a transaction occurs that is significantly different from the norm (an anomaly), the bank can flag it for review. Similarly, think of an autoencoder applied in noise reduction for photographs; it filters out unwanted noise, similar to how a musician might use sound editing software to remove background chatter from a recording, ensuring clarity in the final output.

Key Concepts

-

Autoencoder: A neural network for unsupervised learning that compresses data into a latent space and reconstructs it.

-

Encoder: Compresses the input into a lower-dimensional representation.

-

Decoder: Reconstructs the original input from the encoded representation.

-

Reconstruction Error: Used to evaluate the quality of the reconstruction by measuring the difference between input and output.

Examples & Applications

A common example of an autoencoder application is in image compression, where an image is reduced in size while retaining its important features.

Autoencoders can also be used for detecting fraud in transactions by reconstructing normal transaction patterns.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

Encode to compress, decode to express, autoencoders truly are the best.

Stories

Imagine a character who learns to pack their bags light for a trip. They compress what they need into a small suitcase (encoder) and later unpack it (decoder) to get everything needed for their adventure.

Memory Tools

E-D: Remember the order, Encoder first, then Decoder!

Acronyms

AE

The ‘A’ stands for Auto

the ‘E’ for Encoder

the heart of the process.

Flash Cards

Glossary

- Autoencoder

A type of neural network used to learn efficient representations of data, consisting of an encoder and a decoder.

- Encoder

The component of an autoencoder that compresses the input data into a lower-dimensional representation.

- Decoder

The component of an autoencoder that reconstructs the original input from the compressed representation.

- Latent Space

The lower-dimensional representation of data produced by the encoder in an autoencoder.

- Reconstruction Error

The difference between the original input and its reconstructed output, used to measure the performance of the autoencoder.

Reference links

Supplementary resources to enhance your learning experience.