Strategic Memory Management and Robust Device Drivers in RTOS Environments

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Static Memory Allocation

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Let's start with static memory allocation. Can anyone tell me what it is?

Static memory is allocated at compile-time, right?

Correct! Static allocation means we define our memory needs upfront. Now, what are the advantages?

It’s predictable, so there's no runtime overhead.

Exactly! Now, while static allocation avoids fragmentation, can you think of any downsides?

It can be inflexible because we need to know the maximum memory at compile-time.

Good point! Let's summarize—static memory allocation is deterministic and avoids fragmentation but lacks flexibility. Remember: 'Safe and sure is static.'

Dynamic Memory Allocation

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Next, let’s talk about dynamic memory allocation. Who can describe this method?

Dynamic allocation happens at runtime from a heap, using functions like malloc.

That's right! What’s an advantage of dynamic allocation?

It's flexible, allowing us to allocate memory as needed.

Exactly! But what are the significant drawbacks of dynamic allocation?

It can lead to fragmentation and unpredictable allocation times.

Absolutely! Always remember: 'Dynamic allocation is flexible but can be a fragmenting foe.'

Memory Pools

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now let’s explore memory pools. What do you think a memory pool is?

I think it's a mix of static and dynamic memory, allowing predefined fixed-size blocks.

Exactly, Student_3! It allocates memory from pre-defined blocks, reducing fragmentation. What are the benefits?

Faster and more deterministic allocation since it manages fixed blocks.

Yes! But what about the cons?

It can cause internal fragmentation since blocks are of a fixed size.

Well done! Summarizing this, remember: 'Memory pools provide speed and control but can waste a little space.'

Memory Protection Units

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Let's shift to Memory Protection Units. Who can explain their purpose?

They protect memory spaces from unauthorized access, preventing crashes.

That's correct! Why is protection critical in RTOS?

It ensures that critical tasks can't be corrupted by buggy code.

Exactly! Remember this: 'MPUs guard tasks, ensuring safe processes last.'

Device Drivers

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Lastly, let’s cover device drivers. What functions do they serve?

They connect the RTOS to hardware, handling low-level functions.

Correct! What are some critical roles device drivers play in RTOS?

They manage data transfer and handle interrupts for peripherals.

Well done! And why is abstraction important in this context?

It simplifies application programming by hiding hardware complexity.

Exactly! To wrap up, remember: 'Device drivers bridge software and hardware, keeping systems in order.'

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

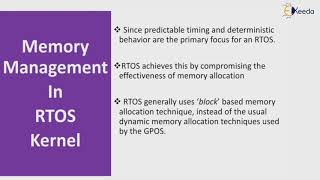

Efficient memory management and robust device drivers are vital for ensuring performance and stability in RTOS environments, where resource constraints are common. This section discusses various memory allocation strategies and the crucial function of device drivers in interfacing application software with hardware.

Detailed

In embedded systems utilizing Real-Time Operating Systems (RTOS), effective memory management and robust device drivers are critical for performance and reliability. Given the constraints of memory in such systems, strategic memory management involves approaches like static memory allocation, dynamic memory allocation, and memory pools to optimize available resources. Static allocation offers predictability and avoids fragmentation by allocating memory at compile-time. In contrast, dynamic allocation provides flexibility in memory usage at runtime but poses challenges such as fragmentation and non-determinism. Memory pools combine aspects of both static and dynamic methods by pre-allocating fixed-size blocks for frequent use. Additionally, Memory Protection Units (MPUs) enhance system robustness by safeguarding against unauthorized memory access, ensuring the integrity of critical tasks. The section further discusses the role of device drivers as the bridge between application software and hardware peripherals, emphasizing their functions in hardware abstraction, interrupt management, and data transfer operations, often utilizing RTOS synchronization mechanisms for safe, concurrent access to hardware resources.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

6.5.1 Strategic Memory Management within an RTOS Context

Chapter 1 of 5

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Embedded systems often operate with severely limited Random Access Memory (RAM) and Flash memory. Therefore, how memory is managed becomes a critical design decision affecting system stability, performance, and predictability.

- Static Memory Allocation (Compile-Time Allocation):

- Concept: All necessary memory for tasks (their TCBs and stacks), RTOS objects (queues, semaphores, mutexes), and application buffers is allocated and fixed at compile time. Memory regions are defined in the linker script or as global/static variables, and their sizes are known and immutable before the program even begins execution.

- Advantages:

- Highly Predictable: No runtime overhead for memory allocation or deallocation. Allocation time is effectively zero.

- No Fragmentation: The dreaded problem of memory fragmentation (where usable memory is broken into small, unusable chunks) simply does not occur, as memory blocks are pre-assigned.

- Robustness: Significantly reduces the risk of memory-related bugs such as memory leaks (forgetting to free allocated memory) or "use-after-free" errors (accessing memory that has already been deallocated), which are notoriously difficult to debug in dynamic systems.

- Determinism: Since allocation is compile-time, memory operations are deterministic.

- Disadvantages:

- Less Flexible: Requires precise knowledge of maximum memory needs for all tasks and objects upfront. Overestimating can waste valuable RAM; underestimating leads to system failure.

- Limited Dynamic Behavior: Cannot easily adapt to changing memory requirements at runtime (e.g., creating tasks dynamically).

- Typical Use Cases: Highly recommended for hard real-time and safety-critical systems where absolute predictability and avoidance of runtime memory issues are paramount. Many smaller RTOSes (like FreeRTOS's default allocation schemes, heap_1.c to heap_4.c) provide options that encourage or simplify static allocation.

Detailed Explanation

This chunk discusses the importance of strategic memory management in embedded systems that have limited memory resources. It introduces static memory allocation, which allocates all necessary memory at compile time. This method is very predictable and avoids the common issues of memory fragmentation and bugs that could occur with dynamic memory allocation. However, it lacks flexibility since the memory requirements must be known beforehand, limiting its adaptability to changing conditions.

Examples & Analogies

Think of static memory allocation like planning a dinner party where you prepare all the food ahead of time, knowing exactly how many guests will attend. You measure the ingredients and lock them in before cooking, ensuring you have the right amount and type of food without the risk of shortages. If you were to cook dynamically, guessing how much each guest would eat as they arrive, you might end up with either too much waste or not enough food (like dynamic memory allocation issues).

Dynamic Memory Allocation (Heap Allocation at Runtime)

Chapter 2 of 5

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

- Dynamic Memory Allocation (Heap Allocation at Runtime):

- Concept: Memory is allocated and deallocated during program execution from a general-purpose memory pool known as the heap (analogous to using malloc() and free() in standard C programming).

- Advantages:

- High Flexibility: Adapts easily to varying and unpredictable memory requirements throughout the system's runtime.

- Efficient Usage: Memory is allocated only when needed and can be returned to the pool when no longer required, potentially leading to better overall memory utilization compared to over-provisioning with static allocation.

- Disadvantages (Significant for RTOS):

- Non-Deterministic: The time taken for malloc() and free() operations can vary significantly depending on the current state of heap fragmentation, making it unpredictable.

- Memory Fragmentation: Over extended periods, repeated allocations and deallocations of different-sized blocks can lead to the heap becoming fragmented into many small, unusable chunks, even if the total available memory is theoretically sufficient for a larger allocation. This can cause subsequent malloc() calls to fail.

- Memory Leaks: A common programming error where a program requests memory but fails to free it after use. Over time, this gradually consumes the heap, leading to system failure.

- Race Conditions on Heap Management: The malloc/free functions themselves operate on shared heap data structures. If called from multiple tasks concurrently, they must be protected by internal mutexes within the RTOS's heap manager, introducing potential blocking and overhead.

- Typical Use Cases: Generally used with extreme caution in RTOS applications, primarily for non-critical, infrequent allocations where predictability is less of a concern. Many RTOSes provide specialized, simpler heap managers that are more optimized and slightly more predictable than typical general-purpose OS heap implementations.

Detailed Explanation

This chunk explains dynamic memory allocation, where memory is allocated from a pool (heap) during runtime. While this approach provides flexibility for fluctuating memory needs, it poses challenges such as unpredictability in allocation time, potential fragmentation of memory, and risks of memory leaks if allocated memory isn't properly deallocated. Developers need to be cautious when using this method in RTOS environments due to inherent timing unpredictability.

Examples & Analogies

Imagine dynamic memory allocation like a restaurant that prepares food on demand (using a kitchen supply room as its heap). If a large order comes in, and they don't have enough ingredients ready (like not enough memory), they have to scramble to prepare the food, which can lead to delays and mistakes. If they keep making small batches as orders come in without organizing supplies properly, they may run out of ingredients unexpectedly or have waste (fragmentation) when they don't use what they have efficiently.

Memory Pools (Fixed-Size Block Allocation)

Chapter 3 of 5

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

- Memory Pools (Fixed-Size Block Allocation):

- Concept: A hybrid memory management strategy that combines aspects of both static and dynamic allocation. Instead of a single, amorphous heap, the system pre-allocates one or more large blocks of memory (the "pools") at compile time. Each pool is then internally subdivided into many smaller, identical, fixed-size blocks. When a task requests memory, it is given one of these pre-sized blocks from a pool.

- Advantages:

- Faster and More Deterministic: Allocation and deallocation operations are very quick and predictable, as they primarily involve simply managing a linked list of free blocks within the pool.

- No External Fragmentation: Because all blocks within a given pool are of the same size, the classic problem of external fragmentation (where memory is broken into unusable small pieces) is eliminated.

- Easier Debugging: Memory errors are often confined to a specific pool.

- Disadvantages:

- Internal Fragmentation: If a task needs a block of memory that is slightly smaller than the smallest available block size in a pool, the remaining space within that allocated block is wasted (internal fragmentation).

- Fixed Size Limitations: Can only allocate blocks of predefined sizes. Requires multiple pools if different fixed sizes are needed.

- Less Flexible: Cannot handle arbitrary-sized memory requests.

- Typical Use Cases: Very common in RTOS design for allocating frequently used, fixed-size objects like messages transferred via queues, control block structures, or specific data buffers. This offers a good balance of flexibility, performance, and predictability.

Detailed Explanation

This chunk details memory pools, a strategy combining features of static and dynamic memory allocation. Instead of using a single heap, memory pools consist of pre-allocated blocks subdivided into fixed sizes, ensuring quicker allocations and no external fragmentation. However, tasks that require smaller memory blocks than the fixed size may lead to wasted space, a downside known as internal fragmentation.

Examples & Analogies

Think of memory pools like a bakery that prepares a specific number of uniform pie desserts in advance. When customers order pies, they pick from these pre-made options without waiting for new pies to be made. If a customer wants a specialty pie that requires a different size, the bakery will need to prepare a specific number of different sizes in advance. This way, they maintain efficiency (quick service) without worrying about half-baked pies wasting resources (fragmentation).

Memory Protection Units (MMU / MPU): Hardware-Enforced Safety Guards

Chapter 4 of 5

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

- Memory Protection Units (MMU / MPU):

- Purpose: The primary goal of memory protection hardware is to prevent tasks or applications from accidentally (or maliciously) accessing memory regions that they are not authorized to use. This is a crucial feature for enhancing the robustness, stability, and security of an RTOS-based system, especially where critical data or code must be isolated.

- Memory Management Unit (MMU): (Typically found in more powerful embedded processors, especially those capable of running complex OSes like embedded Linux, e.g., ARM Cortex-A series).

- Full Virtual Memory: Provides a sophisticated layer of abstraction, translating virtual memory addresses used by applications into physical memory addresses. This enables complex features like virtual memory, paging, and demand paging.

- Hardware-Enforced Protection: Defines granular access permissions (e.g., read-only, read/write, execute, no access) for memory pages or segments. If a task attempts an unauthorized memory access (e.g., writing to a read-only area, executing code from a data area), the MMU triggers a hardware fault (e.g., a "segmentation fault" or "page fault"), which the OS can then handle.

- Use Cases: Necessary for multi-process operating systems where strong isolation between processes is required, or for complex systems requiring virtual memory features.

- Memory Protection Unit (MPU): (More commonly found in microcontrollers with an RTOS, e.g., ARM Cortex-M series).

- Simpler Protection: A less complex hardware unit compared to an MMU. It does not provide full virtual memory but focuses on hardware-enforced memory access control.

- Regional Protection: An MPU allows the definition of a limited number of distinct memory regions (e.g., typically 8 to 16 configurable regions). Each region has a defined base address, size, and most importantly, specific access permissions.

- Access Permissions: For each configured region, you can specify permissions like:

- Read-Only (RO)

- Read/Write (RW)

- Execute (X), No-Execute (NX)

- Privileged vs. Unprivileged Access (e.g., kernel access vs. user task access).

- Use Cases in RTOS:

- Task Isolation: Preventing a buggy task from corrupting the memory (stack or data) of another task or the RTOS kernel itself.

- Kernel Protection: Marking the RTOS kernel's code and data memory as privileged access only, preventing user tasks from inadvertently modifying it.

- Stack Overflow Detection: Placing a protected "guard page" at the bottom of each task's stack. If the stack overflows, it hits this protected page, triggering an MPU fault, which the RTOS can catch and handle, preventing unpredictable crashes.

- Peripheral Security: Restricting access to specific peripheral registers to only the necessary driver task.

Detailed Explanation

This chunk describes how Memory Protection Units (MPUs) and Memory Management Units (MMUs) enhance the safety and security of an RTOS by enforcing access control to memory regions. MPUs are simpler and define distinct memory areas with specific permissions, whereas MMUs provide full virtual memory capabilities. Both methods are crucial for isolating tasks from one another and safeguarding critical system components.

Examples & Analogies

Imagine an office building with restricted access areas. An MMU would be like a sophisticated keycard system that dynamically loads permissions for various rooms based on who you are. An MPU would be a simpler locked door system, where only those with the right key or badge can enter specific areas. Both protect sensitive information (data and code) from unauthorized access and ensure that employees (tasks) can't disrupt each other’s work.

6.5.2 Device Drivers in an RTOS Environment: The Hardware-Software Interface

Chapter 5 of 5

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

- Device Drivers in an RTOS Environment: The Hardware-Software Interface:

- Fundamental Role:

- Hardware Abstraction: Drivers abstract away the low-level complexities of directly manipulating hardware registers and bitfields. They provide a high-level, standardized Application Programming Interface (API) to application tasks (e.g., a simple UART_send_byte(char byte) instead of complex register writes). This promotes modularity and makes application code portable across different hardware platforms (as long as a driver exists).

- Interrupt Management: Drivers are responsible for registering and managing the specific Interrupt Service Routines (ISRs) associated with their peripheral, configuring interrupt priorities, and enabling/disabling interrupts.

- Data Transfer Management: They handle the nuances of moving data between the peripheral and system memory, whether through direct CPU access, Direct Memory Access (DMA), or other specialized mechanisms.

- Key RTOS Integration Points for Device Drivers:

- Synchronization Primitives: Device drivers almost invariably utilize RTOS synchronization primitives to ensure safe, concurrent access to the physical peripheral.

- Mutexes/Binary Semaphores: If a peripheral can only be accessed by one task at a time (e.g., a shared I2C bus, a single UART), the driver will use a mutex or binary semaphore to enforce mutual exclusion. Any task wanting to use the peripheral must first acquire the mutex.

- Counting Semaphores/Queues: For peripherals that buffer data (e.g., incoming UART data), the driver's ISR might increment a counting semaphore (signaling "data available") or put data into a message queue. The application task then waits on the semaphore or receives from the queue.

- Inter-Task Communication (ITC): Drivers frequently use ITC mechanisms for deferred interrupt processing. An ISR, after quickly handling the immediate hardware event (the "top half"), might put data into a message queue or set an event flag to signal a dedicated application task (the "bottom half") to perform the more complex data processing.

- Task Context: While ISRs handle the initial, immediate response from the hardware, any complex or potentially blocking operations (e.g., lengthy data processing, waiting for a peripheral to complete a multi-step sequence) are typically offloaded to a dedicated driver task that runs in standard RTOS task context, allowing it to safely use blocking RTOS APIs and be managed by the scheduler.

- Time Management: Drivers may utilize RTOS software timers for implementing timeouts (e.g., waiting for a peripheral response within a certain time) or for scheduling periodic maintenance tasks (e.g., polling a sensor at regular intervals if interrupt-driven is not feasible).

Detailed Explanation

This chunk details the role of device drivers in an RTOS, emphasizing how they abstract hardware complexity, manage interrupts, and facilitate data transfers between peripherals and memory. It stresses the importance of synchronization primitives, such as mutexes and semaphores, in ensuring safe access to shared resources and highlights the integration of task contexts and RTOS features for efficient driver operation.

Examples & Analogies

Think of device drivers as translators between two languages: your application software and the hardware. Just like a translator makes sure that a visitor can communicate effectively, regardless of the language spoken in the country they are visiting, drivers convert high-level commands from software into low-level instructions that hardware can understand. For instance, when you request to print a document, the printer driver translates your print command into a specific language the printer can understand, managing the whole interaction in the background.

Key Concepts

-

Static Memory Allocation: Predictable and fixed memory allocation done at compile time.

-

Dynamic Memory Allocation: Flexible allocation done at runtime, but poses risks.

-

Memory Pool: Pre-allocated fixed-size memory blocks for efficient allocation.

-

Memory Protection Units: Enhance system stability through controlled memory access.

-

Device Drivers: Interfaces between application software and hardware, managing data transfer and interrupts.

Examples & Applications

Static allocation can be used for fixed-sized buffers in tasks where maximum size is known.

Dynamic allocation may be applied in an application that requires variable-sized data structures.

Memory pools can allocate message buffers for inter-task communication quickly.

Memory Protection Units are used in medical devices to prevent unauthorized access to critical memory regions.

Device drivers for UART allow applications to send and receive data without knowing the underlying hardware details.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

In embedded systems, memory's a dime, static for safety, dynamic's on time.

Stories

Imagine a library where all books must be known before opening; that's static memory. But in a café, we grab just what's needed, that's dynamic memory, flexible and quick!

Memory Tools

For memory management, think 'SMD' - Static is for safety, Memory Pools are for speed, Dynamic is for need!

Acronyms

Remember 'DPM' - Dynamic for flexibility, Pools for speed, and Memory Protection for safety.

Flash Cards

Glossary

- Static Memory Allocation

Memory allocation that occurs at compile-time, ensuring predictability and no fragmentation.

- Dynamic Memory Allocation

Memory allocation that occurs at runtime, allowing flexible usage but posing risks like fragmentation and leaks.

- Memory Pool

A system where fixed-size blocks of memory are pre-allocated to optimize allocation speed and determinism.

- Memory Protection Unit (MPU)

Hardware that provides memory access control, enhancing system stability and preventing unauthorized access.

- Device Drivers

Software components that interface between the RTOS and hardware peripherals, managing data transfer and interrupts.

Reference links

Supplementary resources to enhance your learning experience.