Convergence of LMS

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Introduction to LMS Algorithm Convergence

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today, we’re diving into the convergence of the LMS algorithm. Can anyone tell me what convergence means in this context?

I think it means how quickly the algorithm finds a stable solution.

Exactly! Convergence refers to how quickly and reliably the LMS algorithm adjusts its coefficients to minimize the error signal. Now, what do you think influences this convergence?

Is it related to the step-size parameter, μ?

Great point, Student_2! The step-size parameter μ is crucial. If it’s too high, the algorithm might diverge. Can anyone suggest why that might happen?

Maybe it causes the updates to be too aggressive, overshooting the optimal values?

Right! It leads to instability. Conversely, if μ is too small, what happens?

The convergence will be very slow, making it inefficient.

Exactly! So, finding the optimal value for μ is key to effective adaptation in LMS.

Balancing Step-Size Parameter

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now, let’s talk about how we might choose an optimal value for μ. How could someone go about it?

I think they could try different values and see which one stabilizes the output.

Good approach! This empirical method is common. What else could be used to set μ?

Maybe theoretical analysis based on the input signals?

Correct! Theoretical analysis can help guide the initial value of μ, especially based on expected signal characteristics. What are some risks of a poorly chosen μ?

It could lead to either slow processing or, worse, instability.

Exactly right! Remember, in adaptive filtering, finding that sweet spot for μ can optimize performance.

Importance of LMS Algorithm in Adaptive Filtering

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Finally, let’s recap why understanding the convergence of the LMS algorithm is vital in adaptive filtering.

Because without proper convergence, the filter can’t adapt effectively to changing signals.

Exactly! LMS is popular for its simplicity and efficiency, but we must manage μ wisely. Can anyone think of real-world applications for this knowledge?

It’s used in noise cancellation systems, right?

Yes! Adaptive noise cancellation is a prime example, where maintaining quick and stable convergence is crucial. Any other applications?

What about in communications, like echo cancellation?

Absolutely! These applications highlight the importance of effective adaptive filtering, of which understanding LMS convergence is fundamental.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

The convergence of the LMS algorithm is critically dependent on the step-size parameter, μ. A large μ can lead to instability and divergence, while a small μ results in slow convergence. Understanding this balance is key to effective application of the LMS algorithm in adaptive filtering.

Detailed

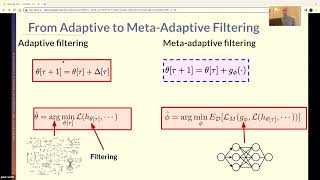

Convergence of LMS

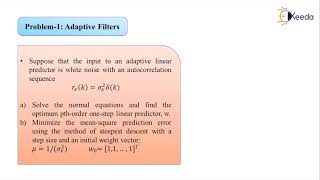

The convergence of the Least Mean Squares (LMS) algorithm is governed primarily by the choice of the step-size parameter, μ. This parameter is crucial in determining the rate at which the algorithm adapts its coefficients in response to errors. If the value of μ is too large, it undermines the stability of the adaptation process, leading to potential divergence of the algorithm where it fails to find a solution. Conversely, if μ is set too small, the algorithm may still find a solution, but the convergence will be prohibitively slow.

To achieve optimal performance, the step-size parameter needs to strike a delicate balance. The optimal value is often derived using experimental methods or theoretical analyses, taking into account the specific characteristics of the signal and noise in the application. This section emphasizes the simplicity and computational efficiency of the LMS algorithm while also pointing to the careful consideration required in practical implementations to ensure that it converges effectively.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Effect of Step-Size Parameter

Chapter 1 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

The convergence of the LMS algorithm depends on the step-size parameter μ. If μ is too large, the algorithm may become unstable and fail to converge. If μ is too small, convergence will be slow.

Detailed Explanation

This chunk discusses the importance of the step-size parameter (μ) in the LMS algorithm. The step-size dictates how quickly the algorithm adapts the filter coefficients based on the error. If μ is set too high, the updates to the filter coefficients can be too drastic, leading to instability, causing the algorithm to oscillate or diverge rather than settle towards a solution. Conversely, setting μ too low means that changes to the coefficients happen very slowly, resulting in prolonged convergence time, which can be inefficient.

Examples & Analogies

Think of the step-size parameter like the gas pedal in a car. If you press the pedal too hard (large μ), the car may skid or lose control (unstable convergence). If you barely press it (small μ), the car moves forward very slowly (slow convergence). The optimal driving speed (ideal μ) ensures you reach your destination smoothly and efficiently.

Choosing the Optimal μ

Chapter 2 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

The optimal value of μ is often chosen experimentally or based on theoretical analysis.

Detailed Explanation

Finding the right value for the step-size (μ) is crucial for the performance of the LMS algorithm. Since too high or too low values can lead to instability or slow convergence, practitioners often determine the best μ through experimentation. They might start with a small value, observe how quickly the algorithm adapts, and adjust based on performance until they find a balance that provides good convergence speed without sacrificing stability.

Examples & Analogies

Consider baking a cake as an analogy. The amount of sugar in the recipe is like the step-size parameter: too much sugar (large μ) will result in a cake that's overly sweet and might collapse (unstable), while too little sugar (small μ) will create a bland cake (slow convergence). Finding the right balance is key to making a delicious cake.

Advantages of the LMS Algorithm

Chapter 3 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

The LMS algorithm is known for its simplicity, low computational cost, and relatively fast convergence, making it a popular choice for adaptive filtering tasks.

Detailed Explanation

The LMS algorithm stands out in the field of adaptive filtering due to its straightforward implementation and efficiency. Its simplicity means that it requires fewer resources to operate, making it appealing for real-time applications where computational power is limited. Additionally, it provides sufficiently rapid convergence under appropriate conditions, allowing it to be used effectively in various applications like noise cancellation, echo suppression, and system identification.

Examples & Analogies

Imagine using a simple toolset, like a screwdriver and hammer, to fix varied things around your house. It's easy to use, well-known, and always gets the job done quickly compared to a more complicated set of tools that might work better but takes longer to figure out. The LMS algorithm is like that efficient toolset in adaptive filtering.

Key Concepts

-

Convergence of LMS: Refers to how quickly the LMS algorithm stabilizes its filter coefficients to minimize error.

-

Step-size Parameter (μ): A crucial factor that influences the stability and speed of convergence in the LMS algorithm.

-

Mean Square Error: The average of the squared differences between predicted and actual values, crucial for assessing filter performance.

Examples & Applications

If the LMS algorithm is used in a noise cancellation system, proper convergence ensures that the system can quickly adapt to ambient noise changes.

In echo cancellation systems, the algorithm must converge rapidly to effectively remove echo from communication signals.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

For convergence smooth and fast, choose μ that’s just right, not too vast!

Stories

Imagine a train on tracks; if it’s too fast, it derails; if too slow, it’ll never arrive! The step-size parameter keeps the train on course.

Memory Tools

Use 'MUST' to remember: M = Minimum, U = Utility, S = Stability, T = Time; ensure the LMS is versatile and convergent.

Acronyms

Remember 'STABLE'

= Step-size

= Tight

= And

= Balanced

= Leads

= Efficiency.

Flash Cards

Glossary

- LMS Algorithm

An adaptive filtering algorithm that minimizes the mean square error by iteratively adjusting filter coefficients.

- Stepsize parameter (μ)

A critical parameter in the LMS algorithm that affects the speed and stability of convergence.

- Convergence

The process by which an algorithm adjusts its coefficients to stabilize and accurately represent the desired output.

- Mean Square Error (MSE)

A measure of the average of the squares of the errors, used to evaluate the performance of adaptive filters.

Reference links

Supplementary resources to enhance your learning experience.