LMS Algorithm: Update Rule

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Understanding the LMS Update Rule

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today, we are diving into the LMS algorithm's update rule. This is critical for how adaptive filters learn from input signals. Does anyone know how the filter coefficients are updated?

Isn't it based on the error between the desired and predicted outputs?

Exactly! We measure that error as e[n] = d[n] - \hat{y}[n]. When we update the weights, we adjust them based on this error. The update equation is: w[n+1] = w[n] + \mu e[n] x[n]. Who can tell me what \mu represents?

It’s the step-size parameter, right? It controls how fast the filter learns.

Correct! The step-size parameter governs the adaptation rate, and choosing it wisely is really important for the filter’s stability. If we set it too high, things can get unstable!

What happens if \mu is too small?

Good question! A very small \mu means slow convergence—it will take longer for the filter to adapt to changes. Finding a balance is key!

In brief, today's session focused on how the LMS algorithm updates the filter coefficients using the error signal and the importance of the step-size for stability. Great participation!

Applications of the LMS Algorithm

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Alright, now let’s shift gears to the applications of the LMS algorithm. What do you think makes this algorithm popular?

I’ve heard it is used in noise cancellation systems?

Absolutely! Adaptive filters using the LMS algorithm are commonly used in noise cancellation applications, helping to filter out unwanted noise from signals. Can anyone suggest another application?

How about in speech recognition?

Correct! Speech processing systems use LMS to adaptively enhance the clarity of spoken commands. It’s all about making the most out of signal processing in dynamic environments. Now, what challenges might we face when implementing the LMS algorithm?

I think the choice of the step-size is a big challenge.

Exactly! It's a balancing act between stability and adaptation speed. Summarizing today, we covered the applications of the LMS algorithm, particularly in noise cancellation and speech recognition, as well as the challenges associated with its implementation.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

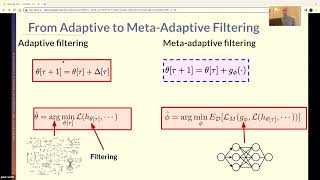

This section introduces the update rule of the LMS (Least Mean Squares) algorithm, which iteratively adjusts filter coefficients based on the error signal to enhance prediction accuracy. Key components include the step-size parameter and the stability of convergence.

Detailed

Detailed Summary

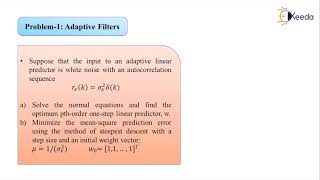

The Least Mean Squares (LMS) algorithm is an adaptive filtering technique widely used for minimizing the mean square error (MSE) between a desired output and the filter output. In this section, we specifically focus on the update rule that governs how the filter coefficients are adjusted over time to ensure accurate predictions.

Update Rule

The fundamental equation for updating the filter coefficients in the LMS algorithm is given by:

$$w[n+1] = w[n] + \mu e[n] x[n]$$

Where:

- $w[n]$ represents the vector of filter coefficients at time $n$.

- $\mu$ is the step-size parameter determining the adaptation rate.

- $e[n] = d[n] - \hat{y}[n]$ is the error signal, derived from the desired output $d[n]$ and the predicted output $\hat{y}[n]$.

- $x[n]$ is the input signal at time $n$.

The choice of the step-size parameter $\mu$ is crucial as it influences the stability and convergence rate of the LMS algorithm. A small step-size enables stable convergence, but at the cost of slower adaptation speed, while a larger step-size can lead to instability.

In essence, this section underlines the importance of the update rule in achieving effective adaptive filtering, allowing for real-time adjustment of filter parameters based on incoming signals.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

LMS Update Equation

Chapter 1 of 2

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

The LMS algorithm updates the filter coefficients w[n] using the following equation:

w[n+1] = w[n] + μe[n]x[n]

Where:

- w[n] is the vector of filter coefficients at time n.

- μ is the step-size parameter, controlling the rate of adaptation.

- e[n] = d[n] - y^[n] is the error signal, with d[n] as the desired output.

- x[n] is the input signal at time n.

Detailed Explanation

In the LMS algorithm, the update of the filter coefficients is crucial for adapting to changing signals. The equation w[n+1] = w[n] + μe[n]x[n] describes how each coefficient is updated. Here, w[n] represents the current state of the filters' coefficients, and e[n] is the error, which tells us how far off our prediction was from the actual desired output d[n]. The term μ, known as the step-size parameter, determines how big of a change we make to our coefficients based on the error and input signal. A well-chosen μ will help the algorithm adapt effectively without overshooting or oscillating around the solution.

Examples & Analogies

Think of adjusting the volume on a radio. If you turn the volume up too quickly (like a large μ), you might overshoot the right level and make it too loud. Conversely, if you turn it up too slowly (like a small μ), it might take forever to get to a comfortable level. The LMS algorithm aims to find the right pace to adjust the volume so you can listen comfortably without feedback loops or distortions.

Significance of Step-Size Parameter

Chapter 2 of 2

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

The parameter μ should be chosen small enough to ensure stable convergence but large enough to allow the filter to adapt quickly.

Detailed Explanation

Selecting the right value for the step-size parameter μ is critical in the LMS algorithm because it impacts how the filter learns over time. If μ is too large, the algorithm can oscillate wildly, potentially diverging instead of converging to an optimal solution. If it's too small, the adaptation process will be sluggish, leading to a long learning time. Therefore, finding a balance is essential, and researchers often test different values to find the most effective one for a given situation.

Examples & Analogies

Imagine you're trying to walk on a tightrope. If you take big, bold steps (akin to a large μ), you might lose your balance and fall. However, if you take tiny, cautious steps (similar to a small μ), you'll move forward very slowly. The best approach is to find a pace that allows you to maintain balance while also progressing forward effectively.

Key Concepts

-

LMS Algorithm: A method for adaptive filtering to minimize the mean square error.

-

Filter Coefficients: Adjustable parameters that define the filter's behavior.

-

Step-Size Parameter: A critical value that influences the speed and stability of adaptation.

-

Error Signal: A key component in updating filter coefficients.

Examples & Applications

Using the LMS algorithm in noise cancellation applications, such as improving sound quality in headphones.

Implementing LMS in speech recognition systems to enhance clarity by learning from previous voice inputs.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

To make the filters adapt with grace, use mean squares to find their place.

Stories

Imagine a chef adjusting a recipe based on tasting the dish. Just as the chef refines the ingredients to achieve the perfect flavor, the LMS algorithm refines its coefficients to minimize error in predictive tasks.

Memory Tools

Remember: 'Step Up' means careful choice of μ for stability in LMS.

Acronyms

LMS

Learn

Minimize

Stabilize—focus of the algorithm.

Flash Cards

Glossary

- LMS Algorithm

A method used in adaptive filtering to minimize the mean square error between the desired output and the filter output.

- Filter Coefficients

Parameters in an adaptive filter that are adjusted to optimize performance.

- StepSize Parameter (μ)

A value that controls the rate at which filter coefficients are updated.

- Error Signal (e[n])

The difference between the desired output and the predicted output, used to update the filter coefficients.

Reference links

Supplementary resources to enhance your learning experience.