Measures of Dispersion (Optional)

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Introduction to Measures of Dispersion

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today, we're diving into measures of dispersion, which helps us understand how varied our data is! Can anyone tell me why knowing about dispersion is essential?

Perhaps to see how much data points differ from the average?

Exactly! When we know how spread out our data is, it gives us a clearer picture of the dataset as a whole. Let's start with the simplest measure: the range. Does anyone know how to calculate it?

I think you take the highest value and subtract the lowest value?

Right! So, if our dataset is 2, 4, 6, 8, the range would be 8 - 2 = 6. Remember the mnemonic 'RHS' for Range = Highest - Smallest.

Understanding Standard Deviation

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now let's talk about standard deviation. Who can explain what that measures?

Isn't it about how far each number is from the mean?

Yes! In fact, SD calculates the average distance of every data point from the mean. The formula may look a bit daunting, but it gives us a more rounded picture than the range alone. To help remember it, think of 'distance from home!'

So, it’s better for datasets with lots of values?

Correct! It’s less influenced by outliers compared to the range. Let's summarize: range looks at extremes, while standard deviation considers all data.

Practical Application of Measures of Dispersion

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Let's apply what we've learned with an example. Suppose we have test scores: 58, 79, 85, 91, and 76. Who can help me calculate the range and standard deviation?

The range is 91 - 58, which equals 33!

That's right! Now, to calculate standard deviation, we first find the mean. Anyone want to give that a shot?

The mean is (58 + 79 + 85 + 91 + 76) / 5, right? So, it's 77.

Perfect! Now we can use that to calculate the deviation of each score from the mean. It’s all about practice!

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

Measures of dispersion can assess how spread out the values in a data set are. Key measures include the range, which indicates the difference between the highest and lowest values, and the standard deviation, which measures the average distance of each data point from the mean.

Detailed

Measures of Dispersion

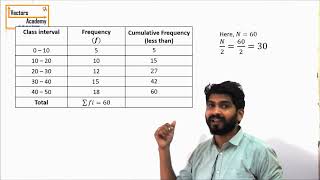

Measures of dispersion quantify the degree to which data points differ from each other. Understanding dispersion is crucial because it adds context to measures of central tendency (like mean, median, and mode) by showing how much the data varies. The two primary measures of dispersion are:

- Range: The simplest measure, calculated as the difference between the maximum and minimum values in the dataset. It provides a quick gauge of how wide the data is spread but can be heavily influenced by outliers.

- Standard Deviation (SD): A more sophisticated measure that calculates how spread out the numbers in a dataset are, relative to the mean. It considers all data points rather than only the extremes, making it a reliable measure of variability.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Range

Chapter 1 of 2

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

The range is the difference between the highest and lowest values in a dataset.

Detailed Explanation

The range is one of the simplest measures of dispersion. To find the range, you take the highest value in your dataset and subtract the lowest value from it. This gives you a single value which represents the span of the data. A larger range indicates a wider spread of data points, while a smaller range suggests that the data points are more clustered together.

Examples & Analogies

Consider the temperatures recorded in a week: 70°F, 75°F, 72°F, 68°F, and 74°F. The highest temperature is 75°F and the lowest is 68°F. The range would be 75 - 68 = 7°F. This range tells you that over the week, the temperatures varied by 7 degrees, giving you an idea of the fluctuation in weather.

Standard Deviation

Chapter 2 of 2

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

The standard deviation measures the amount of variation or dispersion of a set of values.

Detailed Explanation

Standard deviation is a more advanced measure of dispersion that quantifies how much the values in a dataset deviate, on average, from the mean (average) of the dataset. A low standard deviation means that the data points tend to be very close to the mean, while a high standard deviation indicates that the data points are spread out over a wider range. To calculate it, you find the mean of the dataset, subtract the mean from each value to find the variance, and then take the square root of that variance.

Examples & Analogies

Think of students’ heights in a class. If all students are around the same height, their heights will have a low standard deviation. But if in a different class, some students are very tall and others are very short, the heights would have a higher standard deviation. Hence, understanding these heights' variability can help in forming sports teams or organizing physical activities.

Key Concepts

-

Range: Difference between highest and lowest data values.

-

Standard Deviation: Average distance of each data point from the mean, providing insight into variability.

Examples & Applications

In a dataset of ages: 10, 12, 14, 26, the range is 26 - 10 = 16, while calculating the standard deviation tells us how closely the ages cluster around the mean age of 14.

For a set of temperatures recorded over a week: 20, 22, 24, 18, the range is 24 - 18 = 6, and the standard deviation helps in understanding if temperatures fluctuate a lot or stay relatively consistent.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

To find the range, take the top and the base, subtract and you'll find the space.

Stories

Imagine a race with sprinters. The range shows the difference between the fastest and slowest, while standard deviation reveals how close the runners generally are.

Memory Tools

Use RHD: Range = Highest - Lowest, and for Standard Deviation, think SD for Spread Distance.

Acronyms

SD for Standard Deviation = Spread Deviation!

Flash Cards

Glossary

- Range

The difference between the highest and lowest values in a dataset.

- Standard Deviation (SD)

A measure that indicates the average distance of data points from the mean.

- Mean

The average value of a dataset, calculated by summing all values and dividing by the count of values.

Reference links

Supplementary resources to enhance your learning experience.