Process Synchronization in Real-Time Systems

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Introduction to Process Synchronization

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Welcome everyone! Today, we're diving into process synchronization in real-time systems. Can anyone tell me why synchronization is crucial in these systems?

To ensure that tasks that share resources can work together without conflicts?

Exactly! Synchronization prevents race conditions and data inconsistency. Also, real-time systems require these mechanisms to avoid deadlocks. Let's make sure we remember that as we go on.

What do you mean by race conditions?

Great question! A race condition occurs when multiple tasks try to access shared resources simultaneously, leading to unpredictable results. It's crucial to ensure only one task accesses shared resources at a time.

So, how do we manage this access?

We use various synchronization primitives, like mutexes and semaphores. Let's keep exploring this topic!

Critical Section Problem

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now, let's talk about the critical section problem. Can anyone tell me what a critical section is?

It's a portion of code where shared resources are accessed directly by tasks?

Correct! And why is it important to control access to this section?

To prevent race conditions and ensure consistency?

Absolutely! Only one task should access the critical section at a time. To enforce this, we need synchronization mechanisms like mutexes. Anyone know how a mutex works?

It locks the resource for the current task until it's finished?

Exactly right! Let's keep these key points in mind as we continue.

Synchronization Primitives

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Next, let’s discuss synchronization primitives in more detail. Who can name a few?

I remember mutexes and semaphores. Are there others?

Yes, there are also binary semaphores, counting semaphores, and event flags. Each has its use cases. Can anyone think of when you'd use a counting semaphore?

For managing access to a pool of resources, like threads or buffers?

Exactly! Counting semaphores are perfect for that. Remember, they allow multiple instances at once, unlike mutexes which are singular. Let's keep this in mind!

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

Process synchronization in real-time systems is crucial for the coordinated execution of tasks that share resources. This section outlines synchronization mechanisms like mutexes and semaphores, addresses the critical section problem, and discusses the priority inversion issue, providing a foundation for reliable and predictable system behavior.

Detailed

Detailed Summary

Process synchronization is essential in real-time systems to ensure that tasks sharing common resources execute correctly without conflicts. This section introduces the critical issues that arise from concurrent task execution, including race conditions, data inconsistency, and deadlocks. To address these, various synchronization primitives are utilized:

- Critical Section Problem: This context point refers to sections of code where shared resources are accessed. Only one task can enter a critical section at any time to prevent inconsistency and ensure atomic operations.

- Mutex (Mutual Exclusion): A locking mechanism that ensures only one task holds the lock at any given time, preventing concurrent access.

- Binary Semaphore: Similar to a mutex but does not track ownership.

- Counting Semaphore: Manages access to resources limited in count, like buffers.

- Event Flags: Useful for signaling between tasks.

- Message Queues: Facilitate data sharing and synchronization among tasks.

- Spinlocks: A busy-wait lock, used less frequently in real-time systems due to their inefficiency.

- Priority Inversion: A phenomenon where a low-priority task holds a resource needed by a high-priority task, effectively delaying the latter. Solutions include priority inheritance, allowing the low-priority task's priority to be temporarily raised to expedite resource release.

- Real-Time Operating System (RTOS) Synchronization APIs: Different RTOS implementations, like FreeRTOS, Zephyr, and VxWorks, provide specific functions for managing synchronization.

This section's content emphasizes the importance of efficient synchronization mechanisms to enhance system reliability and predictability, aiding in the development of robust real-time applications.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Introduction to Process Synchronization

Chapter 1 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Process synchronization ensures coordinated execution of tasks in real-time systems where multiple tasks share common resources.

● In a real-time environment, synchronization mechanisms must be fast, predictable, and free from deadlocks.

● Synchronization is essential to prevent race conditions, data inconsistency, and priority inversion.

Detailed Explanation

This segment introduces the concept of process synchronization, which is crucial in real-time systems. It defines synchronization as the mechanism that allows multiple tasks to work together smoothly when they need to use shared resources, such as memory or hardware. In real-time environments, these synchronization methods must operate quickly and consistently without causing deadlocks, which would halt progress. Furthermore, proper synchronization is necessary to prevent common issues like race conditions (where multiple tasks try to modify shared data simultaneously), data inconsistency (where data changes unexpectedly), and priority inversion (where lower-priority tasks block higher-priority ones).

Examples & Analogies

Imagine a busy restaurant kitchen where several chefs are preparing different dishes but share the same stove and refrigerator. If they do not coordinate their usage of these shared resources, the chefs may clash, leading to burnt food or forgotten ingredients, similar to how processes clash without proper synchronization.

Need for Synchronization

Chapter 2 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Tasks in real-time systems often:

● Share I/O devices, memory buffers, or global variables

● Execute concurrently on multi-core processors

● Access critical sections of code that must not be interrupted

Without synchronization:

● Race conditions may occur

● Inconsistent states may arise

● Deadlocks or starvation may block system functions.

Detailed Explanation

This chunk clarifies why synchronization is necessary in real-time systems. Tasks often share resources such as input/output devices, memory, and critical sections of code. When these tasks run at the same time, if there's no coordination, it can lead to significant problems: race conditions happen when multiple tasks attempt to change shared data simultaneously without safeguards; inconsistent states arise when data is improperly modified, creating unpredictable outcomes; and deadlocks or starvation can occur, which stops the system from functioning entirely as some tasks wait indefinitely for resources held by others.

Examples & Analogies

Think of a traffic intersection without signals or signs. Cars coming from different directions (tasks) might reach the intersection simultaneously and attempt to go through, leading to accidents (race conditions) or gridlock (deadlocks). A well-coordinated traffic system ensures smooth passage and reduces confusion.

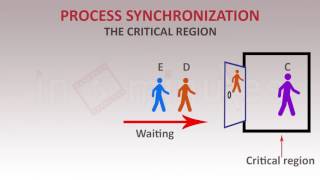

Critical Section Problem

Chapter 3 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

A critical section is a code segment where shared resources are accessed.

To prevent problems:

● Only one task should access the critical section at a time.

● Entry and exit must be atomic and deterministic.

Detailed Explanation

Here, the critical section problem is introduced, which involves the portion of code that accesses shared resources. To manage access to these critical sections, it’s crucial that only one task can be inside this section at any given time. This ensures that the data being accessed isn’t changed by another task simultaneously, which could cause errors. Also, entry into and exit from critical sections need to happen in a single, uninterruptible step (atomic) and should always produce the same outcome under the same conditions (deterministic).

Examples & Analogies

Imagine a single-user bathroom in a busy office. Only one person can use it at a time (exclusive access), and once someone enters, it should be ensured that they can leave without any disruptions (atomic and deterministic). If two people tried to use it at the same time, chaos would ensue!

Synchronization Primitives

Chapter 4 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Mechanism Description

Mutex (Mutual Exclusion) Only one task can hold the lock; prevents concurrent access

Binary Semaphore Similar to a mutex, but does not track ownership

Counting Semaphore Allows access to a limited number of instances (e.g., buffers)

Event Flags Signal multiple tasks on specific conditions

Message Queues Used for both data sharing and synchronization

Spinlocks Busy-wait locks for SMP systems (used rarely in RTOS)

Detailed Explanation

This segment lists various mechanisms used for synchronization called synchronization primitives. Mutex ensures that only one task can access a resource at a time. A binary semaphore allows the same kind of control but does not keep track of which task is accessing it. Counting semaphores allow a specified number of tasks to access a resource concurrently. Event flags notify multiple tasks about particular conditions they are waiting for. Message queues help in exchanging data between tasks while also ensuring synchronization. Lastly, spinlocks are a straightforward but less frequently used method in real-time systems.

Examples & Analogies

Think of the different locks on a cabinet where certain items are stored. A mutex is like a padlock that only one person can use at a time; a binary semaphore is similar but doesn’t care who has the key. Counting semaphores are like an occupancy limit for a room, allowing a set number of people inside. Event flags are like a signal that tells you when an elevator arrives, while message queues are like passing notes between friends in class, ensuring they receive information when it is available.

Key Concepts

-

Process Synchronization: The coordination of concurrent processes to ensure safe shared resource usage.

-

Critical Section: A part of code accessing shared resources, requiring controlled access.

-

Mutex: A mechanism that provides mutual exclusion to prevent concurrent access.

-

Binary Semaphore: A signaling tool that does not keep track of ownership.

-

Counting Semaphore: Manages access for multiple tasks to a limited number of resources.

Examples & Applications

Using a mutex in FreeRTOS to protect critical sections of code, ensuring only one task can manipulate shared data.

Implementing a counting semaphore to manage a shared buffer that can only hold a fixed number of items.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

In the race to share, don’t be a fool, use a mutex to keep your data cool.

Stories

Imagine a busy office where only one person can use the printer at a time. If two people try to print together, chaos ensues! The office manager (mutex) makes sure only one person uses the printer (critical section) at any time.

Memory Tools

For remembering synchronization primitives: M-B-C-E-M stands for Mutex, Binary Semaphore, Counting Semaphore, Event Flags, Message Queues.

Acronyms

To remember the key problems

R-D-P (Race conditions

Deadlocks

Priority inversion).

Flash Cards

Glossary

- Mutex

A mutual exclusion mechanism that allows only one task to hold a lock, preventing concurrent access to shared resources.

- Binary Semaphore

A signaling mechanism similar to a mutex but does not track ownership, allowing synchronization between tasks.

- Counting Semaphore

A semaphore that permits access to a given number of resources, allowing coordination among multiple tasks.

- Priority Inversion

A scenario in which a lower-priority task holds a resource needed by a higher-priority task, causing delays.

- Critical Section

A segment of code that accesses shared resources, requiring controlled access to avoid race conditions.

Reference links

Supplementary resources to enhance your learning experience.