Sensor Fusion Techniques

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Introduction to Sensor Fusion

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today, let's explore sensor fusion techniques. Can anyone explain why we might need to combine data from multiple sensors in a robot?

To get more accurate information about the environment!

Exactly! By leveraging different sensors, we can enhance the reliability and accuracy of our measurements. This is particularly important in robotics where precision is key.

What types of sensor fusion are there?

Great question! We have complementary, redundant, and cooperative fusion techniques. Complementary fusion uses different sensors to cover each other's limitations, while redundant fusion increases reliability by utilizing multiple sensors of the same type.

So, what about cooperative fusion?

Cooperative fusion is where sensors work together to extract new information. It's like a team effort among sensors to get a fuller picture.

To summarize: sensor fusion helps improve data quality through various techniques. Has everyone got that?

Algorithms in Sensor Fusion

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now let's move on to the algorithms used in sensor fusion. Who can name one algorithm?

The Kalman filter!

Absolutely! The Kalman filter is designed to combine noisy measurements into an optimal estimate. It's widely used in robotics for state estimation.

What if the system is non-linear?

Good point! In such cases, we use the Extended Kalman Filter, which adapts the Kalman filter for non-linear systems.

I've also heard of Bayesian networks. How do they fit in?

Bayesian networks are a probabilistic model that helps us integrate data from multiple sensors, allowing us to make better decisions based on available information. They're particularly useful in environments with uncertainties.

To wrap up this session: we've learned about the Kalman filters, both standard and extended, and Bayesian networks. Why are these algorithms important in robotics?

Real-World Application of Sensor Fusion

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Let's discuss real-world applications of sensor fusion. Who can give an example where sensor fusion might be critical?

Self-driving cars use sensor fusion!

Exactly! They integrate data from various sensors like LiDAR, cameras, and radar to navigate safely. Can anyone think of another example?

Drones! They use sensor fusion for stable flight.

Spot on! Drones combine data from GPS, IMUs, and cameras for precise navigation and control. Sensor fusion is vital in scenarios where environmental awareness is crucial.

So, without sensor fusion, robots would struggle to interpret their surroundings effectively?

That's right. Sensor fusion enhances the robot's ability to operate autonomously, making it safer and more efficient. Remember these concepts as they play a vital role in robotics.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

In robotic systems, sensor fusion is critical for improving data accuracy and reliability. Various techniques, such as complementary, redundant, and cooperative fusion methods, work alongside algorithms like Kalman Filters and Bayesian Networks, making it possible to utilize multiple sensor inputs effectively in dynamic environments.

Detailed

Sensor Fusion Techniques

Sensor fusion techniques are essential in robotic systems, providing enhanced accuracy and reliability by integrating data from multiple sensors. By leveraging the strengths of different sensors, robotics can make better-informed decisions based on a more thorough understanding of their environment. The main types of fusion include:

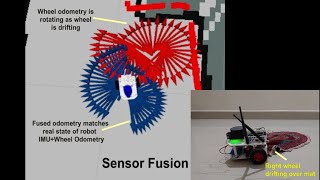

- Complementary Fusion: This method utilizes different types of sensors whose strengths compensate for each other's weaknesses. For example, a combination of an accelerometer and a gyroscope can yield a more reliable estimate of motion.

- Redundant Fusion: Here, multiple sensors of the same type are combined to improve reliability. If one sensor fails or provides noisy data, the others can maintain system integrity.

- Cooperative Fusion: In this approach, multiple sensors work together, sharing information to extract new insights that would be unavailable through individual sensing.

The section also highlights key algorithms used in sensor fusion:

- Kalman Filter: This algorithm is particularly effective in combining noisy measurements to yield an optimal estimate of the system's state.

- Extended Kalman Filter (EKF): An adaptation of the Kalman filter designed for non-linear systems, making it more versatile.

- Bayesian Networks: These probabilistic models enable the integration of data from multiple sensors to improve decision-making processes.

Overall, sensor fusion is a critical element in enhancing the capability of robotic systems, ensuring they can interpret environments accurately while responding effectively.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

What is Sensor Fusion?

Chapter 1 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Sensor fusion combines data from multiple sensors to provide more reliable and accurate information.

Detailed Explanation

Sensor fusion is the process of integrating signals from different sensors to obtain more comprehensive and accurate data about an environment or object. Instead of relying on a single sensor, sensor fusion allows a robotic system to pull together insights from various sensors, thereby increasing the overall reliability of the data being processed.

Examples & Analogies

Think of a person trying to determine the weather. If they only look out the window, they might see that it is cloudy and assume it will rain. However, if they check a weather app and listen to the radio, they will get a more complete picture—perhaps it’s going to be cloudy but there’s no rain expected. Similarly, sensor fusion helps robots to gain a more complete understanding by combining different pieces of information.

Types of Sensor Fusion

Chapter 2 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

8.6.1 Types of Fusion:

• Complementary: Different sensors complement each other (e.g., accelerometer + gyroscope)

• Redundant: Multiple sensors of the same type increase reliability

• Cooperative: Sensors work in coordination to extract new information.

Detailed Explanation

There are three primary types of sensor fusion. 1) Complementary fusion occurs when different sensors bring distinct strengths to the table, enabling them to support each other; for example, an accelerometer and a gyroscope are often used together in devices for motion tracking. 2) Redundant fusion involves using multiple sensors of the same type, which enhances reliability as the failure of one sensor does not compromise the entire system. 3) Cooperative fusion occurs when sensors work together strategically to provide new insights or data that would not be possible for a single sensor to capture alone.

Examples & Analogies

Imagine working as a journalist reporting a story. To capture the full picture, you might use a combination of interviews (different 'sensors' giving you human insights), official records (another type of 'sensor' providing factual data), and social media analysis (a different approach altogether). Each method complements the others by providing unique information. In robotics, this is similar to using a combination of various sensors to create a detailed understanding of the environment.

Sensor Fusion Algorithms

Chapter 3 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

8.6.2 Algorithms:

• Kalman Filter: Combines noisy measurements into optimal estimate

• Extended Kalman Filter (EKF): For non-linear systems

• Bayesian Networks: Probabilistic model for multi-sensor integration.

Detailed Explanation

Sensor fusion relies heavily on sophisticated algorithms to combine data effectively. The Kalman Filter is a commonly used algorithm that takes multiple noisy measurements and produces an optimal estimate; this is particularly useful when precise tracking is needed. The Extended Kalman Filter (EKF) builds on this concept for systems that are non-linear, allowing for more complex data fusion tasks. Bayesian Networks represent a probabilistic approach that captures the uncertainty and relationships between different sensor inputs, enabling integrated decision-making processes.

Examples & Analogies

Consider how you make decisions based on various bits of information. If a friend tells you there is a traffic jam ahead but another friend says it's clear, you weigh both pieces of information while knowing both might not be 100% accurate. You might choose a different route based on your combined understanding of their inputs. In robotics, algorithms like the Kalman Filter work in a similar way to integrate various sensor readings to make the best decision possible based on available data.

Key Concepts

-

Sensor Fusion: The integration of data from multiple sensors to improve accuracy and reliability.

-

Kalman Filter: An algorithm that combines noisy measurements for state estimation.

-

Complementary Fusion: Different sensors aid one another to provide more reliable data.

-

Redundant Fusion: Multiple identical sensors to ensure system reliability.

-

Cooperative Fusion: Collaboration among different sensors to extract new information.

Examples & Applications

In self-driving cars, sensor fusion combines inputs from cameras, LiDAR, and radar to navigate safely.

Drones use sensor fusion to stabilize flight, integrating data from IMUs, GPS, and cameras.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

Sensors unite day and night, to measure and combine what's right.

Stories

Two friends, a thermometer and a barometer, teamed up to create accurate weather forecasts, showing how different sensors can work collaboratively.

Memory Tools

Remember 'CRCC' for sensor fusion: Complementary, Redundant, Cooperative, and the algorithms Kalman and Bayesian.

Acronyms

Use 'KRB' to remember key algorithms

Kalman

Redundant

Bayesian.

Flash Cards

Glossary

- Sensor Fusion

The process of integrating data from multiple sensors to improve overall system accuracy.

- Complementary Fusion

Method where different sensors are used to balance each other's limitations.

- Redundant Fusion

Utilizing multiple sensors of the same type to increase system reliability.

- Cooperative Fusion

A scenario where sensors collaborate, allowing for new insights and information extraction.

- Kalman Filter

An algorithm used to estimate state variables over time by combining noisy measurements.

- Bayesian Networks

Probabilistic models that represent a set of variables and their conditional dependencies, used in sensor fusion.

Reference links

Supplementary resources to enhance your learning experience.