Cache Access Principles

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Locality of Reference

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today, we'll start with the concept of locality of reference. Can anyone tell me what we mean by locality in computing?

Is it about how programs access memory?

Exactly, locality of reference describes how data access patterns tend to cluster. We have two types: spatial locality and temporal locality. Can someone explain spatial locality?

I think spatial locality means that if you access one memory address, nearby addresses are likely to be accessed soon.

Perfect! Caches utilize this by pre-loading nearby data. And what about temporal locality?

That’s when the same memory locations are accessed repeatedly over a short period!

That's right! Caches keep recently accessed data to exploit this locality. Remember the acronym **SLT** for Spatial and Temporal Locality.

So, SLT reminds us that both types of locality help improve cache efficiency?

Yes! Let's move on to cache mapping techniques.

Cache Mapping Techniques

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now, regarding cache mapping techniques, we have three types: direct-mapped, associative, and set-associative. Who can summarize what a direct-mapped cache is?

In a direct-mapped cache, each memory address can only go to one specific cache line.

Correct. This makes access simple, but it can create conflicts. Can anyone think of a disadvantage of this method?

Yeah, if multiple addresses map to the same line, that can cause cache misses, right?

Exactly! Now, what about associative caches?

Associative caches allow any memory address to be put in any cache line, reducing conflicts.

Great! Can anyone summarize the set-associative approach?

Set-associative combines elements of both. It divides the cache into sets, and there are multiple lines per set. A given address can map to any line in its set.

Excellent! This balance is essential for optimizing cache performance as it minimizes the chance of conflicts. Let’s now discuss replacement policies.

Replacement Policies

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

When a cache is full, we need to decide which data to evict, and that's where replacement policies come in. Can anyone name one?

Least Recently Used (LRU)?

Correct! LRU evicts the least recently accessed item. Why is that beneficial?

Because it's likely that data we haven't used recently won't be needed soon.

Exactly! What about FIFO?

First-In, First-Out, right? It just removes the oldest item.

Good! And what's a downside of FIFO?

It doesn’t consider how recently an item was used, so sometimes we could evict something that's still useful.

Correct, and what about Random Replacement?

It's completely random, which isn't efficient but sometimes helps unpredictable access patterns.

Great discussion! Remember the mnemonic **LRU, FIFO, Random** to recall these replacement policies. Let’s summarize today’s session.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

This section explores the fundamental principles of cache access, specifically focusing on locality of reference, cache mapping techniques like direct-mapped, associative, and set-associative caches, and replacement policies that dictate which data to evict when the cache is full.

Detailed

Cache Access Principles

Cache access principles define how data is efficiently retrieved and stored in cache memory to optimize performance. Key concepts include:

Locality of Reference

Locality of reference is critical in caching, which consists of two types:

1. Spatial Locality: This principle states that data locations that are accessed are often close to each other. Caches utilize this by loading contiguous blocks of data into memory, reducing future access time.

2. Temporal Locality: This principle highlights that recently accessed data is likely to be accessed again shortly. Caches leverage this behavior by retaining recently accessed data, thus speeding up future requests.

Cache Mapping Techniques

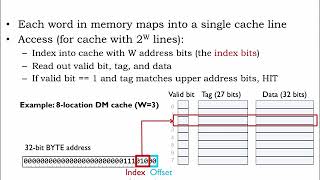

- Direct-Mapped Cache: Each memory address maps to one specific cache line, which simplifies access but can lead to cache conflicts when multiple addresses map to the same line.

- Associative Cache: Here, data can be stored in any cache line, reducing conflicts at the cost of increased complexity in management.

- Set-Associative Cache: A hybrid approach where the cache is divided into sets, and each address maps to any line within its assigned set, striking a balance between conflict reduction and management complexity.

Replacement Policies

Replacement policies determine which data to evict when new data needs to be loaded into a full cache. Common techniques include:

- Least Recently Used (LRU): Evicts the least recently accessed line, based on the assumption it will not be needed soon.

- First-In, First-Out (FIFO): Evicts the oldest line regardless of access frequency, which can lead to inefficiencies.

- Random Replacement: Randomly selects a line for eviction without considering usage patterns.

These principles and techniques are essential for optimizing cache performance and enhancing overall system efficiency.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Locality of Reference

Chapter 1 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Cache access is governed by several principles, including locality of reference and cache mapping strategies, which determine how data is stored and retrieved.

Locality of Reference:

- Spatial Locality: Refers to accessing data locations that are close to each other. Caches exploit this by loading contiguous blocks of data.

- Temporal Locality: Refers to the tendency to access the same memory locations repeatedly in a short time. Caches take advantage of this by keeping recently accessed data in cache.

Detailed Explanation

Locality of reference is a fundamental concept in cache design that helps improve the efficiency of memory accesses.

- Spatial Locality: This principle states that when a particular memory location is accessed, it is likely that nearby memory locations will be accessed soon after. Caches utilize this by fetching not just the requested data but also the adjacent data, storing them together. For example, when a program accesses an array, if it accesses the first element, it will likely access the second element soon after.

- Temporal Locality: This principle indicates that if a memory location is accessed, it is probable that the same location will be accessed again in the near future. Caches keep recently used data easily accessible to speed up this process. For instance, if a function frequently retrieves the same variable, the cache retains this variable for quicker access.

Examples & Analogies

Imagine you are studying in a library. If you frequently read books that are next to each other on the shelf (spatial locality), it makes sense for you to keep those books on your desk to quickly refer to them again (temporal locality) rather than returning them to the shelf each time.

Cache Mapping Techniques

Chapter 2 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Cache Mapping Techniques:

- Direct-Mapped Cache: Each memory address maps to exactly one cache line. This is the simplest cache mapping technique but can lead to cache conflicts.

- Associative Cache: A cache line can store data from any address. This reduces cache conflicts but is more complex.

- Set-Associative Cache: A compromise between direct-mapped and fully associative caches. The cache is divided into sets, and each memory address can map to any cache line within a set.

Detailed Explanation

Cache mapping techniques are methods used to determine how data is stored in the cache from the main memory.

- Direct-Mapped Cache: This is the simplest form of caching where a specific memory address can be mapped to only one particular cache line. Though straightforward, this technique can experience 'cache conflicts' if multiple memory addresses try to map to the same cache slot, leading to inefficient cache usage.

- Associative Cache: This technique allows any memory address to be stored in any cache line, enhancing flexibility and reducing the chances of cache conflicts. However, it introduces complexity in determining where to store and manage the data.

- Set-Associative Cache: This method takes a middle ground between the first two. It divides the cache into sets, and a memory address can map into any line within a designated set. This balances flexibility and simplicity, optimizing cache performance while avoiding conflicts.

Examples & Analogies

Think of a library where direct-mapped is like having a shelf designated for specific genres, causing overcrowding if too many books of the same genre are donated. Associative is akin to mixing all genres on one big shelf, while set-associative is like having several smaller genre shelves but allowing books to shift among them based on their popularity.

Replacement Policies

Chapter 3 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Replacement Policies:

- Least Recently Used (LRU): Evicts the cache line that has been accessed the least recently.

- First-In, First-Out (FIFO): Evicts the oldest cache line, regardless of usage.

- Random Replacement: Randomly selects a cache line to evict.

Detailed Explanation

Replacement policies are rules that determine which data to remove from the cache when there is no space to store new data.

- Least Recently Used (LRU): This policy keeps track of how often data is accessed and evicts the cache line that hasn't been accessed for the longest time, assuming it is less likely to be needed soon.

- First-In, First-Out (FIFO): This method simply removes the oldest data that entered the cache first, without regard to how often or recently it has been accessed.

- Random Replacement: As the name suggests, this policy randomly selects a cache line to evict, which can be a simple but less effective approach since it doesn’t consider usage patterns.

Examples & Analogies

Consider a group of people at a food buffet. LRU would mean that the last person to take their food (or the food they took) will be the one to leave when someone new arrives. FIFO means whoever arrived first exits the line to make room for someone else, regardless of how hungry they still are. Random replacement is akin to a random person being asked to leave without any reasoning, which could be chaotic!

Key Concepts

-

Locality of Reference: The principle that memory accesses tend to cluster both spatially and temporally, enhancing cache design.

-

Spatial Locality: The concept that accessing one memory address likely indicates access to nearby addresses.

-

Temporal Locality: The tendency for recently accessed data to be accessed again shortly.

-

Direct-Mapped Cache: A cache where one address maps to a unique cache line, leading to potential conflicts.

-

Associative Cache: Allows any line to be filled by any address, reducing resistance to conflicts.

-

Set-Associative Cache: Combines concepts from direct-mapped and associative caches to optimize performance by organizing into sets.

-

Replacement Policy: A rule governing which cache line to evict, essential for maintaining cache efficiency.

Examples & Applications

In a direct-mapped cache, if memory address 5 maps to cache line 2, any subsequent access that maps to line 2 could result in a conflict if it's also accessing address 8.

Spatial locality can be observed when loading data arrays in sequential order, as accessing the first element often leads to accessing the adjacent elements.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

When memory tasks abound, nearby data can be found; for every time you reload, the closest neighbors assist the code.

Stories

Imagine a librarian who knows that books on similar topics are often borrowed together, so she keeps them on the same shelf. This is like spatial locality in a cache.

Memory Tools

Remember SLT for Spatial and Temporal Locality, focusing on proximity and recent reuse of data.

Acronyms

Use **DAS** to remember Direct-Mapped, Associative, Set-Associative caches.

Flash Cards

Glossary

- Locality of Reference

The principle that data access patterns exhibit spatial and temporal proximity, influencing cache design and effectiveness.

- Spatial Locality

The tendency to access nearby memory locations, leading caches to load blocks of contiguous data.

- Temporal Locality

The tendency to access the same memory locations repeatedly within a short period.

- DirectMapped Cache

Cache configuration where each memory address maps to a single unique cache line.

- Associative Cache

Cache configuration where any memory address can be stored in any cache line, reducing conflicts.

- SetAssociative Cache

Cache structure that divides lines into sets, allowing multiple lines per set, thus balancing access and management.

- Replacement Policy

Strategy for determining which cache lines to evict when new data needs to be loaded into the cache.

- Least Recently Used (LRU)

Replacement policy that evicts the least recently accessed data, under the assumption it is less likely to be needed.

- FirstIn, FirstOut (FIFO)

Replacement strategy that evicts the oldest item in the cache, regardless of recent access patterns.

- Random Replacement

Eviction method that randomly selects a cache line to remove regardless of usage.

Reference links

Supplementary resources to enhance your learning experience.