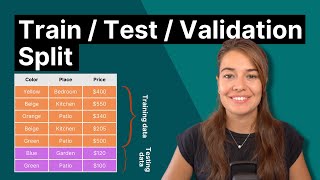

Data Splitting Techniques

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Introduction to Data Splitting Techniques

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today, we'll explore various data splitting techniques. Why do you think it's important to split our data?

To test how well our model performs on new data?

Exactly! Splitting data helps us evaluate the model's generalization capabilities. Let's start with the simplest method called Hold-Out Validation.

How do we decide the ratio for splitting?

Common ratios are 70:30 or 80:20, depending on the dataset size. Remember, though, it can lead to high variance due to differing splits. We need to be cautious about that!

K-Fold Cross-Validation

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now, let's discuss K-Fold Cross-Validation. Who can tell me what this method involves?

It splits the data into K parts and trains on K-1, testing on the remaining fold?

Great! And why do we average the scores across all folds?

To get a more reliable estimate of how the model will perform in general?

Correct! It stabilizes our performance measurements. Typically, K is set to 5 or 10.

Stratified K-Fold Cross-Validation

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Next, let's talk about Stratified K-Fold. How does it differ from standard K-Fold?

It keeps the proportions of classes the same in each fold?

Exactly! This is crucial when dealing with imbalanced datasets. Can someone think of a scenario where this would be essential?

In medical research, if one disease is rarer than another?

Exactly! Ensuring that each fold reflects the true class distributions helps in maintaining model integrity.

Leave-One-Out Cross-Validation and Nested Cross-Validation

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now, let's go into Leave-One-Out Cross-Validation or LOOCV. Can anyone tell me the benefit and drawback?

It has very low bias but is computationally expensive?

Correct! It's ideal when you have limited data but requires significantly more resources. Now, what about Nested Cross-Validation?

It evaluates models and tunes hyperparameters?

Exactly! This method helps prevent data leakage, which is something we must always watch out for.

Conclusion and Summary

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

So, in summary, what are the key data splitting techniques we've discussed today?

Hold-Out Validation, K-Fold, Stratified K-Fold, LOOCV, and Nested Cross-Validation!

Excellent! Remember the pros and cons of each and the contexts where they're best used. This knowledge is crucial for model performance evaluation.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

This section discusses various data splitting techniques, including Hold-Out Validation, K-Fold Cross-Validation, Stratified K-Fold, Leave-One-Out Cross-Validation (LOOCV), and Nested Cross-Validation. Each method is evaluated for its advantages and disadvantages concerning bias and computational cost.

Detailed

Data Splitting Techniques

In machine learning, data splitting techniques are crucial for assessing how well a model can generalize to unseen data. These strategies help avoid pitfalls like overfitting and underfitting during model evaluation. The following are the main types of data splitting techniques:

- Hold-Out Validation: This is the simplest method, where the dataset is split into a training set and a test set. Common ratios for this split are 70:30 or 80:20. While this technique is fast and straightforward, it has high variance because the performance can change dramatically depending on the specific split used.

- K-Fold Cross-Validation: This method involves dividing the data into 'k' different parts (folds). The model is trained on k-1 folds and tested on the remaining fold. This process is repeated for each fold, and the average score is then computed, providing a more reliable estimate of model performance. Typical values for k are 5 or 10.

- Stratified K-Fold Cross-Validation: Similar to K-Fold, this technique ensures that each fold retains the same proportion of classes as the original dataset, which is particularly important for imbalanced classification.

- Leave-One-Out Cross-Validation (LOOCV): This is an extreme case of K-Fold where the number of folds equals the number of data points. While it offers very low bias, the computational cost is very high because it trains a model for each data point in the dataset.

- Nested Cross-Validation: This approach utilizes two loops: an outer loop for model evaluation and an inner loop for hyperparameter tuning. It helps prevent data leakage during model selection and is beneficial for creating robust models.

Each of these techniques has its own pros and cons, and selecting the right one depends on the dataset size and problem context.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Hold-Out Validation

Chapter 1 of 5

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Hold-Out Validation

- Train-Test Split: Common ratio: 70:30 or 80:20

- Pros: Simple, fast

- Cons: High variance depending on the split

Detailed Explanation

Hold-Out Validation involves dividing your dataset into two parts: the training set and the test set. Common ratios for this split are 70% of the data for training and 30% for testing, or 80% for training and 20% for testing. The main advantages of this method are its simplicity and speed; it’s quick to implement. However, one of its major downsides is that the model’s performance can vary significantly depending on how the data was split, which means single tests might not give a reliable performance estimate.

Examples & Analogies

Think of Hold-Out Validation like trying out a new recipe by cooking just one portion to see if it tastes good. The first time you try it, the results could vary based on how you prepared it and the ingredients you used. If the dish turns out great, it doesn’t guarantee it’ll taste amazing every time, just like a model’s performance can differ based on how data is split.

K-Fold Cross-Validation

Chapter 2 of 5

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

K-Fold Cross-Validation

- Split data into k parts (folds)

- Each fold used once as test; remaining as train

- Average score across folds gives robust estimate

- Typical values: k = 5 or 10

Detailed Explanation

K-Fold Cross-Validation enhances the evaluation process by dividing the dataset into 'k' equally sized portions or folds. Here’s how it works: for each iteration, one fold is used as the test set while the remaining 'k-1' folds are used for training. This process is repeated 'k' times, with each fold serving as the test set exactly once. The results (performance scores) are then averaged to provide a more reliable estimation of the model’s performance. Commonly, 'k' is set to 5 or 10.

Examples & Analogies

Imagine you’re studying for a big exam and you take your ten chapters of notes and work through one chapter at a time, testing yourself on it after studying while reviewing the rest of the material beforehand. This method allows you to gauge your understanding from multiple perspectives, getting a more well-rounded view of your knowledge, much like how K-Fold gives a comprehensive performance measure.

Stratified K-Fold Cross-Validation

Chapter 3 of 5

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Stratified K-Fold Cross-Validation

- Ensures each fold has the same proportion of classes as the original dataset

- Important for imbalanced classification

Detailed Explanation

Stratified K-Fold Cross-Validation is a variation of K-Fold Cross-Validation that maintains the same proportion of different classes within each fold. This technique is particularly important when dealing with imbalanced datasets, where some classes have significantly more instances than others. By ensuring that each fold represents the overall distribution of classes in the dataset, stratification helps to prevent bias that can distort model evaluation.

Examples & Analogies

Consider a bag of mixed candies where red candies are fewer than blue ones. If you randomly take out samples to taste (test your candy), you might end up with mostly blue ones, missing out on the taste of reds. Conversely, if you take equal samples of both colors every time you taste, you get a better understanding of the overall candy mix, akin to how stratification maintains class distribution in each fold.

Leave-One-Out Cross-Validation (LOOCV)

Chapter 4 of 5

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Leave-One-Out Cross-Validation (LOOCV)

- n folds where n = number of data points

- Pros: Very low bias

- Cons: Very high computational cost

Detailed Explanation

Leave-One-Out Cross-Validation (LOOCV) is the most intensive form of cross-validation. In LOOCV, the dataset has 'n' folds where 'n' equals the total number of data points. This means that each instance (data point) in the dataset will be tested while using all the other data points for training. The primary benefit of LOOCV is that it reduces bias significantly, yielding a very accurate estimate of model generalization. However, because it requires training the model 'n' times, it can be computationally expensive and time-consuming, especially with large datasets.

Examples & Analogies

Think of LOOCV as a teacher grading student presentations. Instead of giving each student feedback based on a few classmates, the teacher listens to each student separately, while keeping notes on all others for their feedback. This way, every student gets a fair assessment, ensuring that evaluation is comprehensive, but it takes much longer than just a few presentations.

Nested Cross-Validation

Chapter 5 of 5

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Nested Cross-Validation

- Outer loop for model evaluation

- Inner loop for hyperparameter tuning

- Prevents data leakage during model selection

Detailed Explanation

Nested Cross-Validation is an advanced technique that involves two levels of cross-validation: an outer loop for evaluating model performance and an inner loop for tuning hyperparameters. This method provides a robust framework for model selection and hyperparameter optimization while preventing data leakage. In this approach, during each iteration of the outer loop, the folds are separated for testing, while in the inner loop, hyperparameters are tuned solely based on the training set from the outer loop, ensuring that test data is never used inappropriately for training.

Examples & Analogies

Imagine preparing your dishes in a cooking competition where you have a practice round (inner loop) to perfect a recipe before presenting it to the judges (outer loop). Each time you cook, you refine your recipe based solely on the practice round outcomes. This way, you can ensure the judges only taste the best version of your dish, replicating how nested cross-validation keeps the training and testing datasets separate to prevent any unfair advantages.

Key Concepts

-

Hold-Out Validation: A simple data splitting method with high variance.

-

K-Fold Cross-Validation: A robust model evaluation technique that averages performance across multiple train-test splits.

-

Stratified K-Fold: Maintains the proportion of classes in each fold to prevent bias.

-

Leave-One-Out Cross-Validation (LOOCV): Minimizes bias with high computational costs, training on nearly all data points.

-

Nested Cross-Validation: Uses separate loops for model validation and parameter tuning to avoid data leakage.

Examples & Applications

Hold-Out Validation is beneficial for rapidly evaluating model performance but may lead to inaccurate assessments due to its dependence on the particular data split.

In an imbalanced dataset, Stratified K-Fold ensures that all classes are appropriately represented in each fold during cross-validation.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

Cross-validate with K, let errors fade away! Each fold will hold the game, keeping bias to the same.

Stories

Once in a data kingdom, the wise ML wizard decided to split his data into folds. Some folds became knights testing each other, ensuring fairness and balance in their quests to conquer unseen data.

Memory Tools

Remember the acronym HKN for Hold-Out, K-Fold, and Nested Cross-Validation!

Acronyms

FOLD - Fitting Overlooked Learning Dilemmas paths helpful for every student.

Flash Cards

Glossary

- HoldOut Validation

A technique that splits data into training and testing sets, commonly with a ratio of 70:30 or 80:20.

- KFold CrossValidation

A method that divides the dataset into k parts, using each part once as a test set while training on the remaining parts.

- Stratified KFold

A variation of K-Fold Cross-Validation that maintains the same class distribution in each fold as in the full dataset.

- LeaveOneOut CrossValidation (LOOCV)

A special case of K-Fold where n equals the number of observations, training on all but one instance each time.

- Nested CrossValidation

A method that uses two cross-validation loops: one for model evaluation and the other for hyperparameter tuning.

Reference links

Supplementary resources to enhance your learning experience.