Regression Metrics

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Mean Squared Error (MSE)

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today, we're focusing on one of the key regression metrics: Mean Squared Error, or MSE. Can anyone define what it is?

Isn't it the average of the squared differences between the predicted values and the actual values?

Exactly! The formula for MSE is MSE = Σ(y - ŷ)² / n. This means it emphasizes larger errors more significantly. Why do you think this might be important?

Well, if larger errors are more critical, we’d want to catch those in our evaluation.

Right! So, remember the acronym MSE: **M**easurement of **S**quared **E**rrors. It's key for focusing on outliers.

What are some limitations of using MSE?

Great question! MSE can be disproportionately affected by outliers, making it less reliable in those conditions.

When should we use MSE over other metrics?

Use MSE when larger errors matter more, such as in financial forecasting. Let’s summarize: MSE provides a more significant weight to larger discrepancies between predicted and actual results.

Root Mean Squared Error (RMSE)

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now let's discuss RMSE. Can anyone tell me how it relates to MSE?

I think it’s the square root of MSE?

That's correct! RMSE = √MSE. One primary advantage of RMSE is that it's in the same units as the target variable, which can be very helpful for interpretation. Why do you think this is useful?

It makes it easier to understand how far off our predictions are in real terms.

Exactly! We can visualize RMSE as a radius around our predictions. Remember: **R**ound to **M**etric **E**quivalence. It puts predictions back in context.

Are there scenarios where RMSE is better than MSE?

Definitely! Use RMSE when you need a clear interpretation of error magnitude, especially in applications where large errors are significantly more damaging.

So, RMSE is like a 'real-world' understanding of errors?

Exactly! It translates the abstract concept of errors into practical terms. To summarize, RMSE is crucial for evaluating how close predictions are in measurable terms.

Mean Absolute Error (MAE)

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Next, let’s discuss the Mean Absolute Error or MAE. Who can define it?

MAE is the average of the absolute differences between predicted and actual values, right?

Correct! The formula is MAE = Σ |y - ŷ|. One key advantage of MAE is its robustness against outliers. Why is that beneficial?

Because it gives a clearer picture of the prediction errors without being skewed by extreme values?

Exactly! For MAE, think of **M**ean **A**bsolute **E**rrors - it's all about capturing the magnitude without sensitivity to outliers. Can anyone think of situations where MAE would be preferable?

Maybe in cases with heavy noise or when we'd like to keep things simple?

Right! MAE is easier to derive meaning from, especially in everyday terms. To recap, MAE provides a less distorted sense of error, making it ideal for straightforward use cases.

R² Score

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Finally, let’s look at the R² Score. What does it represent?

It gives the proportion of variance explained by the model, right?

Correct! The formula is R² = 1 - [Σ(y - ŷ)² / Σ(y - ȳ)²]. A higher R² indicates a better fit. What are the implications of a low R² score?

It might suggest that the model isn't capturing the underlying trends well.

Exactly! It indicates the need for more features or possibly reconsidering the modeling approach. A mnemonic for this is **R**apture the **²** variance - it's all about the fit! What’s one critical caveat when using R²?

Sometimes it can be misleading if the model is overly complex?

Spot on! It can give a false sense of accuracy if the model is simply memorizing the data instead of learning it. To summarize, R² is a powerful metric but requires careful interpretation.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

In this section, we delve into essential metrics for regression model evaluation. We cover the Mean Squared Error (MSE), Root Mean Squared Error (RMSE), Mean Absolute Error (MAE), and R² Score. Each metric serves a specific purpose, helping to assess model performance in various contexts, ensuring the model's reliability and accuracy.

Detailed

Regression Metrics in Machine Learning

In the realm of machine learning, evaluating the performance of regression models is crucial to understanding their effectiveness and reliability. This section delves into four principal metrics used for regression evaluation:

- Mean Squared Error (MSE): Defined as the average of the squares of the errors, MSE emphasizes larger errors more significantly, making it sensitive to outliers. Its formula is given by:

\[ MSE = \frac{\Sigma(y - \hat{y})^2}{n} \]

This makes it suitable for situations where large errors are especially undesirable.

- Root Mean Squared Error (RMSE): This metric is simply the square root of the MSE, converting the error back into the units of the target variable, which makes interpretation easier. Its formula is:

\[ RMSE = \sqrt{MSE} \]

Being expressed in the same units as the output variable is a key advantage of RMSE.

- Mean Absolute Error (MAE): MAE assesses the average magnitude of errors in a set of predictions, without considering their direction. It is less sensitive to outliers compared to MSE and RMSE. The formula is:

\[ MAE = \Sigma |y - \hat{y}| \]

MAE provides an easily interpretable measure of predictive accuracy.

- R² Score (Coefficient of Determination): This metric provides an indication of how well the independent variables explain the variability of the dependent variable. It ranges from 0 to 1, with higher values indicating a better fit of the model. The formula is:

\[ R² = 1 - \frac{\Sigma (y - \hat{y})^2}{\Sigma (y - \bar{y})^2} \]

Each of these metrics plays a complementary role in assessing model performance, enabling practitioners to choose the most appropriate one based on the specific context and needs of their data.

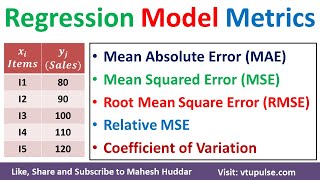

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Mean Squared Error (MSE)

Chapter 1 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

MSE (Mean Squared Error)

Σ(y - ŷ)² / n

Penalizes larger errors more

Detailed Explanation

Mean Squared Error (MSE) measures the average of the squares of the errors, which are the differences between predicted values (ŷ) and actual values (y). It effectively emphasizes larger errors by squaring them, making it useful in situations where these larger deviations are more problematic. The formula involves summing the squared errors and dividing by the number of observations (n). This gives a single scalar value that indicates the model's error magnitude.

Examples & Analogies

Consider a teacher grading several students' tests. If a student scores 95 instead of 100, that's an error of 5 points. However, if another student scores 60 instead of 100, the error is 40 points. By squaring these errors, the teacher can see that the student with the lower score has a much larger deviation from the expected outcome, emphasizing the need to address this larger error.

Root Mean Squared Error (RMSE)

Chapter 2 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

RMSE (Root MSE)

√MSE

In same units as target

Detailed Explanation

Root Mean Squared Error (RMSE) is simply the square root of the Mean Squared Error (MSE). This conversion is beneficial because it brings the error measurement back to the same unit as the target variable, making it easier to interpret. While MSE gives us a value that emphasizes larger errors, RMSE makes that value relatable to the scale of the original data.

Examples & Analogies

Imagine you are tracking the speed of cars on a road. If your predicted speeds often lead to RMSE of 5 miles per hour, this means your predictions are on average 5 miles per hour off. When you communicate this to non-technical stakeholders, saying 'our speed prediction errors are 5 miles per hour' is clearer than saying 'our MSE value is 25', as 25 is not directly interpretable.

Mean Absolute Error (MAE)

Chapter 3 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

MAE (Mean Absolute Error)

Σ |y - ŷ|

Use MAE for easily interpretable errors

Detailed Explanation

Mean Absolute Error (MAE) calculates the average of the absolute differences between predicted values (ŷ) and actual values (y). Unlike MSE, which squares errors, MAE merely takes the absolute values, making it a more straightforward measurement of average error without emphasizing larger errors disproportionately. This can be especially useful in scenarios where all errors should be treated equally, regardless of their size.

Examples & Analogies

Think of a delivery service measuring how far off their predicted delivery times are. If one delivery is 30 minutes late and another is 5 minutes late, MAE would treat both deviations as simply their lengths—30 and 5 minutes—without squaring them, leading to a clearer understanding of performance over all deliveries.

R² Score (Coefficient of Determination)

Chapter 4 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

R² Score (Coefficient of Determination)

1 - [Σ(y - ŷ)² / Σ(y - ȳ)²]

Proportion of variance explained

Detailed Explanation

The R² score, or Coefficient of Determination, quantifies how well the independent variables in a regression model explain the variability of the dependent variable. It ranges from 0 to 1, where 0 indicates that the model explains none of the variability, and 1 indicates that it explains all of it. The formula compares the sum of the squared differences between actual and predicted values to the total variance of the target variable. A higher R² score indicates a better fit of the model.

Examples & Analogies

Imagine trying to predict a person's monthly spending based on their income and lifestyle. If your model yields an R² score of 0.9, it suggests that 90% of the variation in spending is explained by the factors in your model, which gives a high level of confidence in your predictive abilities. Conversely, an R² score of 0.2 would indicate that your model is failing to capture important factors influencing spending, thus leading to less reliable predictions.

Key Concepts

-

Mean Squared Error (MSE): A metric that averages the squared differences between predictions and actuals, sensitive to outliers.

-

Root Mean Squared Error (RMSE): Provides an interpretable scale by returning to the same unit as the target variable, useful for real-world applications.

-

Mean Absolute Error (MAE): An average of absolute errors that is less affected by outliers than MSE, providing a robust measure of accuracy.

-

R² Score: Indicates how well independent variables explain variance in the dependent variable, ranges from 0 to 1.

Examples & Applications

If a regression model predicts housing prices, MSE would capture how far off the predictions are compared to actual market prices, heavily influencing how those prices are assessed.

In temperature prediction, if one model predicts average temperatures with low RMSE, it indicates accurate predictions in realistic temperature ranges, which is essential for climate awareness.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

In regression land, errors abound, MSE's squares make the big ones found.

Stories

Once upon a time in a data kingdom, the predictive models faced a test. The king, MSE, valued every large mistake, while RMSE wore shoes of clarity that fit just right!

Memory Tools

Remember MSE → Measurement of Squared Errors; MAE → Magnitude of Absolute Errors. They help track what errs.

Acronyms

For R², think 'R' for 'Rate' of explained variance and '²' for the square of understanding.

Flash Cards

Glossary

- Mean Squared Error (MSE)

The average of the squared differences between predicted and actual values.

- Root Mean Squared Error (RMSE)

The square root of the Mean Squared Error, expressed in the same units as predicted values.

- Mean Absolute Error (MAE)

The average of the absolute differences between predictions and actual values, less sensitive to outliers.

- R² Score

A statistical measure indicating the proportion of variance explained by the independent variables in the model.

Reference links

Supplementary resources to enhance your learning experience.