Importance of Model Evaluation

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Estimating Generalization Performance

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today, we're going to discuss the importance of model evaluation, starting with generalization performance. Can anyone tell me why we need to evaluate how well a model performs on unseen data?

So that we know if it's actually going to work in the real world, right?

Exactly! It's vital for a model's success. When we only test on training data, we might miss out on issues that arise in practical applications. That's why we always emphasize testing on new data.

What happens if a model looks good on training data but fails on new data?

Great question! That scenario is known as overfitting. The model learns the training data too well, including noise, which doesn't help it generalize. Thus, evaluation helps us avoid such pitfalls. Remember: 'Generalize to Survive!'

Comparison of Models and Hyperparameter Tuning

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Let’s move on to comparing models and tuning hyperparameters. Why do you think this is important?

Because we need the best-performing model, right?

Exactly! Through evaluation, we can determine which model performs best based on specified metrics. This ensures that we're making informed choices and can optimize model performance effectively.

How do we know what to adjust in hyperparameters?

That's where techniques like cross-validation come into play. They help us systematically test different configurations and measure their performance using the evaluation metrics. Remember: 'Model Performance First, Adjust Second!'

Avoiding Overfitting and Underfitting

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Another crucial reason for model evaluation is to avoid overfitting and underfitting. Can someone explain what these terms mean?

Overfitting is when a model learns the details too well, and underfitting is when it doesn't learn enough?

Spot on! Evaluating models helps us identify and rectify these problems, leading to a well-balanced model that captures necessary patterns without being too complex.

What techniques can we use to mitigate these issues?

Good question! Techniques like regularization and cross-validation can help control overfitting and ensure our model learns appropriately. Just remember: 'Balance is Key!'

Meeting Business KPIs

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Finally, let's discuss the importance of aligning model evaluations with business goals. Why does this matter?

I think it's to ensure the model is effective for the company’s needs?

Exactly! A model that performs well in technical terms but doesn't meet KPIs can be a failure for business. Ideally, we want to create models that not only are statistically sound but also bring value to the organization. Remember: 'Business Needs First, Tech Strength Second!'

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

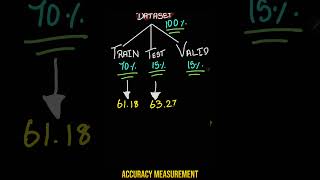

Model evaluation is a crucial step in the machine learning lifecycle, allowing data scientists to assess generalization performance, compare models, and ensure their applicability to business objectives. Proper evaluation techniques prevent model issues such as overfitting and underfitting.

Detailed

Importance of Model Evaluation

Model evaluation is an integral component in the development of machine learning models. It serves multiple essential purposes:

- Estimating Generalization Performance: Evaluation helps to determine how well a model performs on unseen data, which is vital for ensuring its effectiveness in real-world scenarios.

- Comparing Models and Tuning Hyperparameters: It provides a means to compare various models and optimize their parameters, enhancing overall performance.

- Avoiding Overfitting and Underfitting: Effective evaluation identifies whether a model is too complex (overfitting) or too simplistic (underfitting), allowing for necessary adjustments.

- Meeting Business KPIs: Ensuring that a model aligns with specific business key performance indicators (KPIs) or goals is critical for its successful deployment.

In conclusion, understanding the importance of model evaluation fosters a more reliable machine learning process, ultimately leading to the development of robust and generalizable models.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Purpose of Model Evaluation

Chapter 1 of 1

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

- To estimate generalization performance.

- To compare models and tune hyperparameters.

- To avoid overfitting or underfitting.

- To ensure the model meets business KPIs or goals.

Detailed Explanation

Model evaluation serves several critical purposes in the machine learning process. First, it allows us to estimate how well a model will perform on unseen data, which is called generalization performance. This is important because a model that performs well on training data might not perform well in the real world.

Second, model evaluation is crucial for comparing different models or configurations. By evaluating multiple models, we can identify which model performs best and also tune hyperparameters to optimize performance further. Hyperparameters are settings that control the learning process, and their optimization can lead to better outcomes.

Next, evaluation helps us avoid common problems such as overfitting and underfitting. Overfitting occurs when a model learns too much from the training data, including noise and outliers, which hampers its ability to generalize. On the other hand, underfitting happens when a model is too simplistic to capture the data trends effectively. Both conditions can lead to poor model performance.

Lastly, effective model evaluation ensures that the model aligns with business key performance indicators (KPIs) or goals. This alignment guarantees that the model is not only technically sound but also adds value to the business objectives it aims to serve.

Examples & Analogies

Imagine a chef perfecting a new recipe. The chef tastes the dish first (model training) but might find it tastes different when served to guests (real-world application). Model evaluation is like asking guests for feedback – it helps the chef adjust ingredients (hyperparameters) and ensure the dish meets diner expectations (business KPIs). Just like a chef needs to balance flavors without oversalting (overfitting) or making the dish bland (underfitting), data scientists strive for that perfect model balance.

Key Concepts

-

Generalization Performance: The model's effectiveness on unseen data.

-

Overfitting: A scenario where the model is too complex, capturing noise.

-

Underfitting: A situation where the model is too simplistic.

-

KPI Alignment: Ensuring the model meets business objectives.

Examples & Applications

In a medical diagnosis model, if it predicts results accurately in training data but fails with real patients, it has overfitted.

A model predicting sales trends might underfit if it consistently misses large fluctuations in data.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

Evaluate, don’t be late, to a model’s true fate!

Stories

Imagine a chef who only practices on the same dish. He thinks he's great until judging day arrives, and he fails to impress because he has not tested his dish with different flavors. Always evaluate!

Memory Tools

Remember the four 'E's: Estimate, Compare, Avoid Overfitting, and Ensure KPIs.

Acronyms

G.O.A.L

Generalization

Overfitting

Avoid Underfitting

Link to KPIs.

Flash Cards

Glossary

- Generalization Performance

The ability of a model to perform well on unseen data.

- Overfitting

When a model learns the training data too well, capturing noise along with patterns, leading to poor performance on new data.

- Underfitting

When a model is too simplistic to capture underlying patterns in the data, resulting in high bias.

- KPIs (Key Performance Indicators)

Metrics used to evaluate the success of a model against business goals.

Reference links

Supplementary resources to enhance your learning experience.