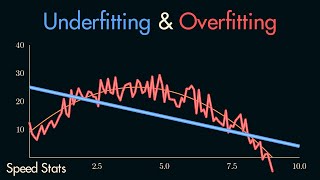

Underfitting

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Understanding Underfitting

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Welcome everyone! Today, we'll be discussing underfitting. Can anyone tell me what they think underfitting means?

Is it when the model doesn't learn enough from the data?

Exactly! Underfitting occurs when a model is too simplistic and fails to capture the underlying patterns of the data. It leads to high bias. Can you think of a scenario where this might happen?

If I use a straight line to model something that has curves, right?

Great example! A linear model trying to fit a nonlinear relationship is a classic case of underfitting.

Remember, high bias leads to underfitting. Let's summarize: underfitting leads to poor performance both on training and validation sets.

Symptoms and Causes

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now, let's talk about the symptoms of underfitting. What do you think happens to the accuracy of a model that's underfitting?

It would be low on both the training and test sets, right?

Exactly! That's a clear sign of underfitting. And what do you think might cause this?

Using a simpler model than what's needed?

Yes! Using overly simple models is a main contributing factor. Another reason might be insufficient feature engineering. Let’s remember: more complex models often help capture necessary patterns.

Solutions to Underfitting

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now that we understand what underfitting is, let's explore some solutions. What might we do to improve a model that is underfitting?

We could try a more complex model?

Correct! Another strategy could be to enhance feature engineering. What does that mean in practice?

Adding more features or using polynomial features?

Absolutely! Increasing model capacity can also involve tuning hyperparameters effectively. Remember, our goal is to find a balance where the model doesn’t underfit or overfit.

Real-World Implications

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

So, let’s wrap this up with the real-world implications of underfitting. Why is it crucial to address underfitting in real projects?

Because if our model fails to predict accurately, we might make bad business decisions?

Exactly! Poor performance can lead to negative outcomes in business contexts and affect trust in model predictions. Ensuring models are appropriately complex can mitigate these risks.

So, it affects everything from predictions to overall model credibility!

Well said! Always remember, understanding underfitting and addressing it is vital for model success.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

This section discusses underfitting in machine learning models, highlighting its causes, identification methods, and solutions. It explains how underfitting leads to high bias and emphasizes the importance of selecting appropriate model complexity and enhancing feature engineering.

Detailed

Underfitting in Machine Learning

Underfitting is a scenario in which a machine learning model is too simplistic to accurately represent the complexity of the underlying data. This results in a model that fails to learn from the training data, leading to poor performance on both training and unseen datasets.

Key Points:

- Definition: Underfitting is characterized by a model that cannot capture the underlying trends of the data, usually due to its simplicity, resulting in high bias.

- Symptoms: The primary symptom of underfitting is poor performance in terms of accuracy or any chosen metric on both training and validation datasets.

- Causes:

- Choosing a model that is too simple (e.g., a linear model for nonlinear data).

- Insufficient feature engineering or ignoring relevant features.

- Insufficient training time (e.g. too few epochs in neural networks).

- Solutions:

- Consider using more complex models or algorithms that can handle the data better.

- Enhance feature engineering by adding polynomial or interaction terms to better capture relationships in the data.

- Increase model capacity through tuning hyperparameters.

- Expand or gather more data to provide the model with a richer understanding to learn from.

Understanding and addressing underfitting is critical as it directly impacts the generalizability and effectiveness of machine learning models.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Definition of Underfitting

Chapter 1 of 2

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

• Model fails to capture underlying patterns.

Detailed Explanation

Underfitting occurs when a machine learning model is too simple to learn from the training data. This means that the model doesn't grasp the underlying relationships within the data, resulting in poor performance on both the training and test datasets. Essentially, the model system fails to identify patterns and trends, leading to inadequate predictions.

Examples & Analogies

Imagine a student who only studies a few basic topics in math and skips understanding the foundational theories and problem-solving techniques. When faced with a complex math problem that requires applying multiple concepts, the student struggles because they haven't grasped the essential patterns and principles of mathematics.

Causes of Underfitting

Chapter 2 of 2

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

• Consider more complex models or better feature engineering.

Detailed Explanation

Underfitting can often be traced back to using overly simplistic models or insufficient feature engineering. A simplistic model lacks the necessary parameters or complexity to capture the intricacies of the data effectively. Additionally, if the features used to train the model are not representative or detailed enough to reveal the trend in the data, the model will also likely underfit. Therefore, using a more sophisticated model and improving the quality of input features can assist in addressing underfitting.

Examples & Analogies

Think of it like trying to use a toy hammer to build a complex piece of furniture. The toy hammer represents a basic model—you can hit the nails in but not with the precision or strength needed for a sturdy build. Using a proper hammer (more complex models) along with better materials (improved features) would be necessary to create a well-constructed piece of furniture.

Key Concepts

-

Underfitting: A failure to learn from the training data due to an overly simplistic model.

-

High Bias: A common result of underfitting, indicative of a model's inability to capture data complexity.

-

Feature Engineering: Enhancing the predictive power by creating relevant features.

Examples & Applications

Using a linear regression model to fit data that exhibits a polynomial relationship is a classic example of underfitting.

An application that tries to predict housing prices with only one feature, like the size of the house, is likely to underfit because it ignores other important factors.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

Underfitting's a plight, with models too light; they miss the data's true sight.

Stories

Once in a village, there was a baker who used an old oven that couldn't bake bread properly; his bread was always doughy—he just didn’t have the right tools to create the warm environments required. This represents underfitting; the old oven symbolizes a simple model!

Memory Tools

Remember 'Simple Models Can Fail' (SMCF) when you're thinking of underfitting.

Acronyms

Use the acronym UBI

Underfitting

Bias

Insufficient features to learn!

Flash Cards

Glossary

- Underfitting

A modeling error that occurs when a model is too simple to capture the underlying patterns of the data.

- High Bias

A type of error due to overly simplistic assumptions in the learning algorithm, leading to underfitting.

- Feature Engineering

The process of using domain knowledge to select, modify, or create features to improve model performance.

Reference links

Supplementary resources to enhance your learning experience.