Optimization Opportunities at Different Design Levels

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Optimization at the Algorithm Level

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today we are going to explore how optimization starts at the algorithm level. Can anyone tell me why the choice of algorithm significantly impacts performance?

I think if we choose an inefficient algorithm, the hardware will take longer to compute, right?

Exactly, great point! An efficient algorithm can reduce the computational complexity. For example, using a Fast Fourier Transform instead of a direct Discrete Fourier Transform can drastically improve speed. We often use the acronym FCT - 'Fast Complexity Transformation' to remember this benefit.

Can you explain how we can reduce redundant calculations?

Sure! By using common sub-expression elimination, we can avoid recalculating values that we already know. This way, we minimize unnecessary operations and memory access, which can take a lot of time.

So, if we make our algorithms efficient, we can run our hardware more effectively?

Absolutely! In summary, selecting the right algorithm not only affects performance but also impacts power consumption and size.

Optimizing the FSMD

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now let's discuss FSMDs and how they act as the blueprint for our SPP. Why do you think optimizing the FSMD is crucial?

Maybe because it helps in defining control sequences and data paths more efficiently?

Correct! Optimizing the FSMD includes techniques like state merging. If we have states that perform identical operations, we can merge them, leading to fewer flip-flops and simpler control logic.

What do you mean by re-timing?

Re-timing involves moving registers across combinational logic to shorten the longest paths. This change can increase the clock frequency, allowing our processor to run faster. Remember the phrase 'Register Relay - Reduce the delay!'

That’s a neat way to remember it! So, optimizing FSMD helps improve both performance and efficiency?

Exactly! Essential takeaways on FSMD optimization will help in crafting efficient hardware designs.

Datapath Optimization Techniques

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Let’s dive into datapath optimization. Does anyone remember what resource-sharing means in this context?

Is it about using the same hardware for different operations to save space?

Yes, that's right! By using one adder for multiple calculations at different times, we can cut down on area and potentially power consumption. This is known as time-multiplexing and can be coined with the acronym STAMP - 'Share To Alleviate Maximum Power!'

And what about register allocation?

Great question! If we know two registers are not needed at the same time, we can allocate the same physical register to both. This saves on resources. Always think of it as 'Allocate, Don't Duplicate!'

All these methods sound effective for reducing complexity!

Exactly! Proper management of the datapath can lead to optimizations that ultimately improve overall system efficiency.

Controller Optimization Guidelines

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Next, we’ll look at controller optimization. Can anyone explain why minimizing states is so beneficial?

Fewer states lead to a simplified design and less logic, right?

Definitely! This improvement reduces the complexity of next-state and output logic. Techniques like the Partitioning Algorithm help significantly in finding equivalent states. Remember the catchphrase 'Less is More – Trim States for High Score!'

What about encoding states, how does that help?

Fantastic inquiry! Using encoding schemes like one-hot encoding simplifies your control logic. It reduces the depth of logic paths. Think of it as 'Go Hot or Go Home!'

So optimizing controllers can have a big impact on the overall efficiency?

Absolutely! Each optimization strategy contributes to enhanced system performance significantly, and knowing these concepts helps in designing more efficient processors.

Power Optimization Techniques

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Finally, let's talk about power optimization techniques. Why is power reduction critical in embedded systems?

Because many embedded devices run on batteries, and saving power extends their life!

Exactly! Techniques like clock gating can massively reduce power consumption by disabling unused components. An easy way to recall it – 'Only Run When You Need to Have Fun!'

How does voltage and frequency scaling help?

Good question! By dynamically adjusting voltage and frequency, we can drastically lower power use during idle states. We refer to this technique as DVFS - 'Dynamic Voltage & Frequency Savings'.

That’s a very effective method!

Indeed! Lastly, consider integrating low-power methodologies during design for maximum impact in embedded systems!

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

The section discusses how optimization can occur at multiple levels of system design, from algorithm selection to hardware implementation. By examining trade-offs among performance, power consumption, area, and cost, it sheds light on strategies to enhance the efficiency of single-purpose processors (SPPs), ensuring high performance for dedicated tasks.

Detailed

Optimization Opportunities at Different Design Levels

Overview

This section delves into the significance of optimization across various design levels in the context of embedded systems and single-purpose processors (SPPs). It highlights that optimization is not merely an afterthought but an essential part of the design process, influencing key metrics such as performance, power consumption, and area.

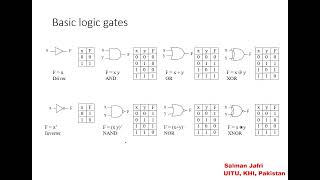

Key Optimization Levels

-

Algorithm Level: The initial point of optimization occurs in the choice of algorithms. Efficient algorithms can lead to substantial improvements in performance and power savings. Techniques like algorithmic refinement can greatly enhance efficiency.

- Minimizing memory access and redundant calculations are crucial in this context.

-

Finite State Machine with Datapath (FSMD): At this architectural level, optimizations include state merging and re-timing.

- State merging simplifies designs by grouping equivalent states, reducing the overall complexity.

- Re-timing can help enhance maximum clock frequency by strategically placing registers.

- Datapath Optimization: Resource management is key here, involving techniques such as resource sharing and register allocation. Time-multiplexing can significantly lower area requirements while adjusting the complexity of multiplexers.

- Controller Optimization: Refining control logic is vital for sequencing and performance. Reducing the number of states in the FSM, using efficient state encoding methods, and minimizing logic depth all contribute to enhanced controller performance.

- Power Optimization: Given the critical need for energy efficiency in embedded systems, techniques like clock gating and voltage scaling are essential for reducing power consumption. Consideration of switching activity is majorly impactful.

Conclusion

The prioritization of optimization at various design levels maximizes the overall effectiveness of embedded systems, ensuring they meet performance targets while being power-efficient and cost-effective.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

High-Level Impact of Optimizing the Original Program/Algorithm

Chapter 1 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

2.4.2.1 Optimizing the Original Program/Algorithm: High-Level Impact

Principle: The most significant gains in performance, power, and area often come from selecting or developing a fundamentally more efficient algorithm. A clever algorithm can outperform a brute-force one, regardless of hardware implementation.

Techniques:

- Algorithmic Refinement/Selection: Research and choose algorithms with lower computational complexity (e.g., O(NlogN) instead of O(N2)). For example, using the Fast Fourier Transform (FFT) instead of a direct Discrete Fourier Transform (DFT) for signal processing.

- Reducing Redundant Computations: Identify and eliminate calculations whose results are already known or can be reused. Use common subexpression elimination.

- Minimizing Memory Accesses: Memory access is slow and power-hungry. Design algorithms that minimize reads and writes to memory, emphasizing data locality.

- Data Type Optimization: Use the smallest possible bit-widths for variables that still maintain the required precision. This directly reduces the size of registers, adders, multipliers, and interconnects in the datapath. For example, if an 8-bit value is sufficient, don't use a 32-bit register.

- Parallelism Exposure: Structure the algorithm to expose maximum inherent parallelism. This is critical for mapping to parallel hardware architectures in SPPs.

- Example: For our GCD, Euclid's algorithm is efficient. An inefficient GCD algorithm (e.g., iterating from min(A,B) down to 1) would lead to drastically larger and slower hardware.

Detailed Explanation

In this chunk, we focus on optimizing the original program or algorithm to achieve better performance in embedded systems. The principle is straightforward: choosing or developing more efficient algorithms can lead to significant improvements in power consumption, performance, and area used. For instance, instead of straightforward methods that could take longer or require more power, one can consider options like the Fast Fourier Transform that work faster by reducing the amount of calculations. We can also refine algorithms to reduce unnecessary computations and ensure they work faster and use less memory. A real-life analogy for this principle might be like choosing the fastest route for a trip instead of just driving without a clear plan—it saves time and fuel, just as optimized algorithms save processing time and power.

Examples & Analogies

Imagine trying to organize a crowded event. If you're just calling each person one by one to notify them of a change, it will take forever—this is akin to a brute-force algorithm. Instead, if you send a text to them all at once, using a group message, you’re using a more efficient approach. Similarly, complex algorithms can be like sending that group message, cutting down on time and effort exponentially compared to calling each person individually.

Architectural Refinements of the FSMD

Chapter 2 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

2.4.2.2 Optimizing the FSMD: Architectural Refinements

State Merging/Reduction:

- Concept: If two or more states in your FSMD perform identical sets of operations on the datapath and have identical transitions for all possible input conditions, they are considered equivalent.

- Benefit: Merging equivalent states reduces the total number of states in the FSM, which means fewer flip-flops for the state register and simpler next-state and output logic, leading to smaller area and potentially faster operation.

- Methods: Formal state minimization algorithms (e.g., implication table method, partitioning algorithm) can systematically identify equivalent states.

Re-timing (or Register Balancing):

- Concept: This technique involves moving registers across combinational logic blocks to reduce the critical path delay, thereby allowing for a higher clock frequency.

- Benefit: Improves maximum clock frequency (performance).

Detailed Explanation

This chunk deals with optimizing the Finite State Machine with Datapath (FSMD). Important strategies include state merging and re-timing. State merging involves identifying states within the FSMD that essentially perform the same operations and reducing them to a single state. This helps decrease the total number of states in the FSM and lends itself to more straightforward design and faster operation. Re-timing focuses on moving registers through the design to improve timing and execution speed by shortening the delay paths that signals need to traverse. In essence, it's akin to smoothing a bumpy road by removing speed bumps, allowing for a faster journey. By applying these refinements, designers can create more efficient systems that operate within tighter performance constraints.

Examples & Analogies

Consider an airplane's flight path management. If two planes are taking very similar routes and can be coordinated as one at certain points of their journey, that would save time, fuel, and air traffic complexities—this is like merging states. Similarly, think about traffic signals that monitor the flow of cars on busy streets. If they are spaced too far apart and cars have to stop frequently, it takes longer for everyone to get through. By adjusting the signals or re-timing them so that cars can pass through more continuously, the overall traffic flows better in a similar way to re-timing register paths in an FSMD.

Resource Management in Datapaths

Chapter 3 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

2.4.2.3 Optimizing the Datapath: Resource Management

Resource Sharing (Time-Multiplexing):

- Concept: If an algorithm performs the same operation (e.g., addition) in different states or at different times, instead of dedicating a separate adder for each instance, a single adder can be shared among them.

- Benefit: Reduces area (fewer functional units) and potentially power (only one unit is active at a time).

Pipe-lining:

- Concept: Dividing a long combinational operation into smaller stages, with registers placed between each stage.

- Benefit: Dramatically increases throughput, allowing higher data rates.

Detailed Explanation

This chunk focuses on optimization techniques specific to datapath design in embedded systems. Resource sharing or time-multiplexing enables the use of a single functional unit (like an adder) for multiple operations during different cycles, which conserves both area and power. Pipeline design further enhances efficiency by segmenting tasks into smaller, manageable stages, which allows for multiple operations to process simultaneously. Think of this as an assembly line in a factory; instead of one worker assembling the entire product from start to finish, the product moves through different stations where each worker handles just part of the task, speeding up the overall manufacturing process.

Examples & Analogies

Imagine you have a group of friends working on a project. If every friend tries to do every part of the project from start to finish without dividing tasks, they will take a long time to finish. However, if one friend handles the planning, another does the research, and another focuses on presentation, the project completes much faster because each is focusing on a specific segment. This division of labor is akin to pipelining in a datapath—the more efficiently the work is divided, the quicker the overall project is done.

Key Concepts

-

Optimization at Algorithm Level: The choice of an efficient algorithm greatly influences performance.

-

Finite State Machine and Datapath: Critical for establishing control flow and optimizing resource usage.

-

Datapath Optimization: Techniques for resource management can enhance performance and reduce size.

-

Controller Optimization: Efficient control logic significantly impacts the overall design effectiveness.

-

Power Optimization: Essential in embedded systems for reducing energy consumption.

Examples & Applications

Using Fast Fourier Transform instead of Discrete Fourier Transform to improve computational speed.

Sharing an adder in the datapath for various operations to save area and power.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

For algorithms that run great, choose wisely and don’t hesitate, efficiency will elevate, making speed and power resonate.

Stories

Once upon a time, an embedded system struggled under the weight of a slow algorithm. When it found a better way, it transformed into a powerful device that won competitions and delighted users everywhere!

Memory Tools

Remember the acronym FCT for Fast Complexity Transformation in algorithm selection!

Acronyms

STAMP - Share To Alleviate Maximum Power, a reminder for resource sharing in datapath optimization.

Flash Cards

Glossary

- Finite State Machine (FSM)

A computational model consisting of a finite number of states, transitions between those states, and actions associated with the states.

- Algorithm Complexity

A measure of the amount of time or space an algorithm takes to complete based on the input size.

- Resource Sharing

The practice of utilizing the same hardware resources for different tasks to minimize area and costs.

- State Encoding

The method of representing states in a finite state machine using binary codes.

- Clock Gating

A power-saving technique that disables clock signals to inactive components.

Reference links

Supplementary resources to enhance your learning experience.