Design Considerations for Achieving Parallelism in AI Applications

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Hardware Selection for Parallel Processing

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today, we are going to talk about hardware selection in parallel processing systems for AI applications. Why do you think selecting the right hardware is important?

I think it affects the performance, right? Different types of tasks need different hardware.

Exactly! For example, GPUs are great for tasks that involve matrix calculations. Can anyone tell me what a TPU specializes in?

TPUs are designed specifically for deep learning tasks!

Correct! And FPGAs allow for custom logic which is useful in edge computing. Just remember: GPUs for graphics, TPUs for training, FPGAs for flexibility. Let’s remember this with the acronym 'GTF' - Graphics, Training, Flexible.

Got it! GTF for hardware!

Great! Let’s move on to the next key concept: memory architecture.

Memory Architecture and Data Movement

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now let's discuss memory architecture. What do you think are the advantages of shared memory systems?

They let all processors access the same memory, which is faster!

Exactly, but they can also create contention. So, what about distributed memory systems? Why might you choose that?

Each processor has its memory, so there's less contention, but it might take longer to communicate between them.

Very astute! This balance is crucial for performance. To help us remember the pros and cons, we can use the mnemonic: 'SHARE for Shared Memory - Speed and Hum, At the Risk of Excess' and 'DICE for Distributed - Delay In Communication Easily'.

Nice! I can use those!

Great! Now let’s move on to load balancing.

Load Balancing and Task Scheduling

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Load balancing is essential in parallel processing. Why do we need to prevent some processors from being overloaded?

If some are overloaded, they'll slow down the whole system!

Exactly! We want all processors to be working effectively. Can anyone tell me the difference between static and dynamic load balancing?

Static load balancing does not change during processing, while dynamic can adjust based on current loads!

Correct! Remember the acronym 'BALANCE' - Balance Always Leads to Accurate, New Calculative Efficiency - for effective load management.

That’s catchy!

Glad you like it! Next, let's discuss scalability.

Scalability

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Scalability is critical for handling increases in data loads. What does horizontal scaling involve?

Adding more nodes to a system!

Great! And what about vertical scaling?

It’s upgrading individual units like adding more cores or RAM!

Exactly! Remember this with 'H for Horizontal - Hug more nodes!' and 'V for Vertical – Value added to units'.

Those are fun!

Now, let’s summarize the key points over our sessions today.

Recap and Key Points Summary

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

To recap, we've discussed hardware selection, memory architecture, load balancing, and scalability in parallel processing for AI. Do you remember 'GTF', 'SHARE/DICE', 'BALANCE', and our scaling acronyms?

Yes! Each acronym has specific tips for remembering key aspects!

I like how you used those to tie everything together!

Excellent! With these concepts, you're well on your way in understanding how to design effective parallel systems for AI applications!

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

The section covers critical aspects of designing parallel processing systems for AI, including hardware selection, memory architecture, load balancing, and scalability, explaining their impact on performance and efficiency in executing AI tasks.

Detailed

In designing effective parallel processing systems for AI applications, several fundamental considerations must be accounted for to maximize performance and efficiency. These considerations include:

- Hardware Selection: Choosing the right hardware such as GPUs, TPUs, or FPGAs determines the system's capability to handle specific AI workloads efficiently. GPUs excel in handling matrix operations, while TPUs are optimized for deep learning tasks, and FPGAs offer customizable processing for various applications.

- Memory Architecture and Data Movement: Effective management of how data is stored and moved between processing units and memory is critical to minimizing performance bottlenecks. Options such as shared and distributed memory systems can significantly affect the efficiency of parallel computations.

- Load Balancing and Task Scheduling: Ensuring tasks are evenly distributed across processors, avoiding situation where some are overloaded while others are idle, enhances resource utilization. Dynamic load balancing and efficient task scheduling are essential practices in achieving this.

- Scalability: Scalable systems can adjust to growing data and computational demands by allowing additional resources (horizontal or vertical scaling) to be incorporated without performance degradation. This is vital as AI models become more complex and data-intensive.

These foundational elements of parallel processing design are crucial in leveraging the full potential of AI technologies and ensuring that applications can meet the evolving demands of data handling and processing.

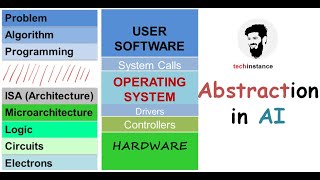

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Hardware Selection

Chapter 1 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

The hardware used in parallel processing systems plays a significant role in their performance:

- GPUs: Graphics Processing Units are designed for parallel processing and are highly effective for AI workloads that involve matrix operations, convolutions, and other data-heavy tasks.

- TPUs: Tensor Processing Units, developed by Google, are specifically designed for AI tasks, particularly deep learning. TPUs are optimized for high throughput and low-latency processing, enabling faster training and inference.

- FPGAs: Field-Programmable Gate Arrays offer flexible parallelism by allowing custom logic to be programmed for specific AI tasks. FPGAs are used in applications where low latency and high performance are critical, such as edge computing.

Detailed Explanation

In parallel processing systems, choosing the right hardware is crucial for optimal performance. GPUs, TPUs, and FPGAs each serve different roles:

1. GPUs are powerful for handling numerous tasks at once, making them ideal for operations like matrix calculations in AI applications. They excel in environments where multiple calculations are needed simultaneously.

2. TPUs, specifically engineered for AI tasks, help in training deep learning models faster by handling high volumes of data with minimal delay.

3. FPGAs provide flexibility, as they can be tailored to specific AI needs, particularly useful in scenarios where quick processing and low latency are essential, like real-time image processing in drones.

Examples & Analogies

Imagine needing to build a house. You wouldn't just want one type of tool (like a hammer); instead, you'd use various tools for different tasks (screwdrivers, saws, etc.). Similarly, in AI processing, having the right hardware tools (GPUs for speed, TPUs for specific tasks, and FPGAs for flexibility) helps achieve the best results.

Memory Architecture and Data Movement

Chapter 2 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Efficient memory access and data movement are essential for high-performance parallel processing. Data must be transferred between processing units and memory in a way that minimizes bottlenecks and latency. Memory architectures like shared memory and distributed memory affect how efficiently parallel systems can communicate.

- Shared Memory: In shared-memory systems, all processing units access the same memory, which can reduce communication time but may introduce contention.

- Distributed Memory: In distributed-memory systems, each processing unit has its local memory, and communication between units must be managed through interconnects, which can introduce latency.

Detailed Explanation

Memory architecture plays a key role in how quickly data can be accessed and processed by different parts of a system. There are two main types:

1. Shared Memory systems allow all processors to tap into a common memory pool, which can speed up communication for tasks needing quick data exchange. However, it can also lead to 'traffic jams' if multiple processors try to access the same data simultaneously.

2. Distributed Memory systems assign individual memory to each processor. While this can avoid traffic jams, it introduces delays that occur when data needs to be communicated between processors, which could slow down processing times.

Examples & Analogies

Think of a library as shared memory where multiple readers can access the same book simultaneously, but if too many want to read it at once, it can become chaotic. Now consider individual books in different homes (distributed memory). Each person can read at their own pace, but if they want to share knowledge, they need to talk and send messages back and forth, which takes extra time.

Load Balancing and Task Scheduling

Chapter 3 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Load balancing is essential to ensure that computational resources are used efficiently. In parallel processing systems, tasks must be distributed evenly across processing units to prevent some units from being underutilized while others are overloaded.

- Dynamic Load Balancing: This approach adjusts the workload based on the current load of each processing unit, ensuring efficient resource utilization.

- Task Scheduling: Efficient task scheduling ensures that tasks are assigned to the appropriate processing units at the right time, minimizing idle time and ensuring that the system can process data as quickly as possible.

Detailed Explanation

Load balancing and task scheduling help make sure that all parts of a system work together smoothly:

1. Load Balancing takes into account the current activity level of each processing unit and redistributes tasks to ensure all units are busy but not overwhelmed—like evenly dividing cake pieces among guests so that everyone enjoys it at the same time.

2. Task Scheduling refers to deciding which task goes to which processor and when, reducing waiting time and maximizing workflow efficiency. It's like organizing a team project where tasks are assigned based on each person's strengths to streamline the overall progress.

Examples & Analogies

Imagine a restaurant kitchen. If one chef (processing unit) has too many orders at once, while another is idle, service suffers. A good manager (load balancer) assigns orders based on capacity, ensuring swift service. Additionally, scheduling meal prep in advance allows for smooth service—no waiting, everything is ready when diners arrive!

Scalability

Chapter 4 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Scalability is critical for parallel processing systems, especially as AI models grow in size and complexity. A scalable system can add more processing units or memory to handle increased data and computational demands without compromising performance.

- Horizontal Scaling: This involves adding more nodes to a distributed system to increase computational power.

- Vertical Scaling: Vertical scaling involves upgrading individual processing units (e.g., using GPUs with more cores or adding more memory to a system).

Detailed Explanation

Scalability is about how well a system can grow and manage increased workload without losing efficiency. Two main forms include:

1. Horizontal Scaling adds more machines to a system to handle more tasks simultaneously. It's like expanding a factory by building more assembly lines.

2. Vertical Scaling upgrades existing machines to perform better without changing the overall number of machines. It’s like upgrading a delivery truck to have a larger capacity or better engine for faster deliveries.

Examples & Analogies

Consider a city growing in population. Horizontal scaling relates to building more roads or streets to manage increased traffic, while vertical scaling would mean adding more lanes to existing roads to handle more vehicles efficiently. Both strategies aim to improve traffic flow, just like a scalable system aims to manage more data and tasks efficiently.

Key Concepts

-

Hardware Selection: Choosing the right computing hardware is crucial for optimizing performance based on specific AI tasks.

-

Memory Architecture: Structuring data storage effectively can greatly influence processing speed and efficiency.

-

Load Balancing: Distributing workloads evenly prevents overloading certain processors while others remain underutilized.

-

Scalability: Systems must be able to grow to accommodate increasing data and workload demands.

Examples & Applications

Using a GPU for deep learning tasks involving matrix calculations where many operations can happen simultaneously.

Employing dynamic load balancing to adjust workloads based on the real-time performance metrics of each processor.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

When building a system to run AI quick, remember GTF for every task pick!

Stories

Imagine a fair where each food stall has a line, some take longer, but if everyone is balanced, the wait becomes fine!

Memory Tools

DICE for Distributed Memory - Delay In Communication Easily.

Acronyms

BALANCE

Balance Always Leads to Accurate

New Calculative Efficiency.

Flash Cards

Glossary

- Hardware Selection

The process of choosing appropriate computing hardware, such as GPUs, TPUs, or FPGAs, to execute specific types of AI tasks effectively.

- Memory Architecture

The structure and organization of data storage in a computing system that impacts data access speeds and processing performance.

- Load Balancing

The technique of distributing workloads across multiple processing units to achieve optimal resource utilization and prevent overload on any single unit.

- Scalability

The capability of a system to grow and manage increased data loads by adding resources without compromising performance.

Reference links

Supplementary resources to enhance your learning experience.