Power Consumption

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Understanding Power Consumption

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today, we will explore power consumption, particularly in parallel processing systems like GPUs and TPUs. Can anyone tell me why power consumption is important in these systems?

It’s important because it affects the efficiency and operational costs of running AI applications.

Exactly! High power consumption can make running large-scale AI tasks very costly. Now, why might this be a bigger challenge in edge AI applications?

Because those devices have limited power sources, like batteries.

Great observation! Energy efficiency becomes crucial in these scenarios. Let’s remember this with the acronym E.E, for 'Energy Efficiency'. It’s vital for optimizing both performance and power use.

So, we need to find ways to manage our power consumption effectively?

Exactly! Summarizing, we need to balance performance demands with power usage efficiently. We'll revisit this concept as we move forward!

Challenges with High-Performance Hardware

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Let’s talk more about the challenges that high-performance hardware, like GPUs and TPUs, present in terms of power consumption. Who can detail why this hardware is so power-hungry?

They handle a lot of computations at once, which takes a lot of energy.

Correct. The massive parallelism they achieve comes at a cost. There’s a concept called Amdahl's Law which suggests diminishing returns with more resources added. What do you think this means for our power management?

It means sometimes it's not worth adding more processors if the power consumption outweighs the gains from added speed.

Exactly right! This further complicates how we manage power—aiming for energy savings without losing too much performance. Remember, maximize efficiency without compromising performance!

Strategies for Optimizing Power Use

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now that we understand the challenges, let’s explore some strategies for optimizing power use in these systems. Can anyone propose an approach?

We could use dynamic scaling to adjust power based on the workload.

Good idea! Dynamic scaling is a powerful method. By adjusting computational resources based on real-time needs, we manage energy use very effectively. Can anyone think of another strategy?

Using more energy-efficient AI algorithms or models could help too.

Exactly! Designing algorithms that require fewer resources is a critical factor in managing power. Remember to think about both hardware choices and algorithmic efficiency when considering power consumption!

Impact of Power Consumption on AI Advancements

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Let’s discuss the wider implications of power consumption. How might it affect the future of AI development?

If we can't solve the power issue, it might slow down the development of new AI technologies.

That’s a crucial point! Sustainability in technology is more important than ever. As developers, we need to be conscientious about our power usage while pushing AI forward. Wrap up today by thinking about how solutions in power efficiency can pave the way for future innovations.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

The section addresses the issue of power consumption in parallel processing systems, including GPUs and TPUs used in AI applications. It emphasizes the need for energy efficiency and the challenges associated with maintaining high performance while minimizing energy use.

Detailed

Power Consumption in Parallel Processing Systems

Power consumption is a critical challenge when implementing parallel processing systems, especially those powered by high-performance hardware like GPUs (Graphics Processing Units) and TPUs (Tensor Processing Units). As these technologies are designed for heavy computational loads in AI applications, they inherently consume significant amounts of power. This section explores the complexities of managing power consumption within the context of AI, emphasizing the effort required to balance energy efficiency with performance demands. In scenarios such as edge AI applications, where devices are constrained by available power, implementing strategies to optimize energy use without sacrificing computational efficacy becomes crucial.

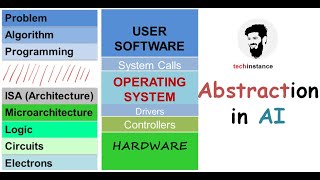

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Significant Power Consumption

Chapter 1 of 2

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Parallel processing systems, particularly those using high-performance hardware like GPUs and TPUs, can consume significant amounts of power.

Detailed Explanation

This chunk highlights that systems designed for parallel processing, such as those using Graphics Processing Units (GPUs) and Tensor Processing Units (TPUs), can draw a lot of power. The reason for this high power consumption is that these hardware units perform many calculations at the same time (in parallel), which requires more energy compared to traditional processing. The focus is on understanding that powerful systems tend to use more energy.

Examples & Analogies

Imagine a large vehicle, like a bus or a truck, that requires more fuel than a small car because it carries more passengers. Similarly, powerful processing units need more 'fuel' (electricity) to carry out complex computations quickly. Just as you'd expect a big vehicle to have higher fuel needs, we expect high-performance AI processing units to demand more energy.

Challenge of Energy Efficiency

Chapter 2 of 2

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Ensuring energy efficiency while maintaining high performance is a challenge, especially in edge AI applications with power constraints.

Detailed Explanation

This chunk conveys the difficulty of balancing energy consumption with high performance in AI systems. Particularly in edge AI applications—where devices are often limited by battery life and need to operate without constant power supply—finding solutions that use less power but still perform well becomes crucial. The idea is to reduce energy use while maximizing the capability to carry out computations.

Examples & Analogies

Consider a smartphone that wants to run powerful applications but only has a small battery. It must use its battery wisely to prolong usage time while still delivering good performance. If the phone drains the battery too quickly due to heavy processing tasks, it won’t be useful for everyday activities. Similarly, AI systems at the edge face this balancing act between power and performance.

Key Concepts

-

Power Consumption: The total energy used by processing units during operations.

-

Energy Efficiency: The approach to maximize performance while minimizing energy waste.

-

Amdahl's Law: A principle determining the limits of improvement through parallel processing.

-

Dynamic Scaling: An adaptive approach to match resource allocation with workload demands.

Examples & Applications

A cloud data center optimizing GPUs to reduce energy costs during low-traffic hours illustrates effective energy management.

Using algorithms designed for energy efficiency, such as pruning in deep learning, can promote sustainability in AI technology.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

In processing power, let’s not waste, energy efficiency we must embrace.

Stories

Imagine a town where power is gold. Everyone must share to keep things bold. If one house wastes, the whole town suffers; energy balance makes the good life buffer.

Memory Tools

Remember 'E.E.A.D' for Energy Efficiency, Amdahl's Law, and Dynamic scaling to keep your system in command.

Acronyms

Use 'PEACE' standing for Power, Efficiency, Amdahl’s law, Consumption, and Energy for your power optimization strategies.

Flash Cards

Glossary

- Power Consumption

The amount of energy consumed by a system during its operation, particularly relevant in high-performance computing applications.

- Energy Efficiency

The practice of using less energy to provide the same level of performance in computing systems.

- Amdahl's Law

A principle that states the potential speedup of a program using parallel processing is limited by the portion of the program that cannot be parallelized.

- Dynamic Scaling

An approach where computational resources are adjusted dynamically based on current workloads to improve energy efficiency.

Reference links

Supplementary resources to enhance your learning experience.