Introduction to Parallel Processing Architectures for AI

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Overview of Parallel Processing

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today, we're diving into parallel processing, which is essential for AI. Can anyone tell me why handling large datasets requires parallel processing?

Because regular processing would take too long to handle all that data at once!

Exactly! Parallel processing allows us to execute multiple tasks at the same time. This leads to faster training and inference of AI models. Let's break down the key architectures used in parallel processing.

What types of architectures are we talking about?

There are mainly SIMD and MIMD architectures. SIMD stands for Single Instruction, Multiple Data, while MIMD stands for Multiple Instruction, Multiple Data. Remember, SIMD processes the same instruction across multiple data points, while MIMD handles different instructions on different data simultaneously. This distinction is crucial!

Can you give us an example of SIMD?

Sure! In a neural network, matrix multiplications often use SIMD because the same operation applies to all elements in the matrices. Any questions before we summarize?

Not from my side!

Great! To summarize, parallel processing allows simultaneous execution of tasks, crucial for AI's efficiency, with SIMD and MIMD being the primary models.

Applications of Parallel Processing in AI

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now that we've covered the architectures, let’s discuss their applications in AI. Can anyone think of a situation where parallel processing is vital?

Training deep learning models requires a lot of computations, right?

Absolutely! Training deep neural networks involves adjusting weights through backpropagation, which is computationally intense. Using parallel processing, especially with GPUs, accelerates this process. Why do we prefer GPUs over CPUs?

Because GPUs are optimized for parallel tasks.

Exactly, they can handle thousands of operations concurrently. Beyond deep learning, what other applications can you think of?

How about real-time AI like autonomous vehicles?

Spot on! Real-time inference for tasks like image processing in self-driving cars or robotics requires quick processing, which parallel architectures provide. Let’s summarize: GPUs enable faster model training, and parallel processing supports applications from deep learning to real-time inference.

Design Considerations in Parallel Processing

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Let’s explore what goes into designing these parallel processing systems. What do you think is the most important factor to consider?

Maybe the hardware selection?

Yes! Choosing the right hardware, such as GPUs or TPUs, greatly affects performance. Another factor is memory architecture. What can you tell me about shared vs. distributed memory?

In shared memory, all processors access the same memory, but in distributed memory, each has its own memory.

Correct! Shared systems reduce communication time, but distributed systems scale better. Can anyone remember how load balancing plays a role?

It ensures tasks are distributed evenly to prevent some processors from being overloaded.

Perfect! Good design choices can optimize efficiency and scalability. To summarize, effective design considers hardware selection, memory architecture, and load balancing to enable powerful AI systems.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

Parallel processing architectures enable simultaneous execution of multiple computations, which is crucial for artificial intelligence applications that require processing vast amounts of data and performing extensive calculations. This section highlights the principles of parallel processing, its applications in AI, and design considerations necessary for effective implementation.

Detailed

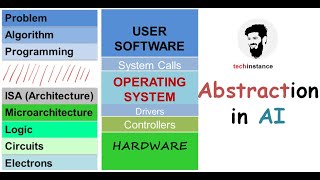

Introduction to Parallel Processing Architectures for AI

Parallel processing involves executing multiple computations or tasks simultaneously, a necessity for artificial intelligence (AI) due to the significant computational power required for tasks like training deep learning models and processing large datasets. This section delves into the underlying principles of parallel processing architectures, exploring classification models such as Single Instruction, Multiple Data (SIMD) and Multiple Instruction, Multiple Data (MIMD). Additionally, the applications of these architectures in AI systems are discussed, focusing on their critical roles in deep learning, large-scale data processing, and real-time inference.

The chapter also considers the design challenges that arise when implementing parallel processing systems, including hardware selection, memory architecture, load balancing, and scalability. Understanding these design principles is vital for creating efficient AI systems capable of handling complex computational demands.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Definition of Parallel Processing

Chapter 1 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Parallel processing refers to the simultaneous execution of multiple computations or tasks.

Detailed Explanation

Parallel processing is a computing technique where many calculations or processes are carried out simultaneously instead of sequentially. This means that instead of performing one operation at a time, multiple operations can be done at once. This method is particularly beneficial in the context of artificial intelligence (AI), as it allows for efficient handling of large amounts of data.

Examples & Analogies

Think of parallel processing like a team of chefs in a kitchen. Instead of one chef preparing all the dishes one by one, they divide the tasks among themselves. One chef might chop vegetables, while another cooks pasta, and another bakes the bread—all at the same time. This group effort results in a meal ready much faster than if one chef did everything.

Importance of Parallel Processing for AI

Chapter 2 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

In the context of AI, parallel processing is critical for handling large datasets, training complex models, and speeding up inference.

Detailed Explanation

AI applications, especially those involving deep learning, require massive computational resources. They need to process vast datasets and perform numerous calculations simultaneously. Parallel processing helps to address these needs by distributing tasks across multiple processors, allowing for faster data processing, model training, and inference operations.

Examples & Analogies

Imagine you are a student working on a large project that involves research, writing, and presentation preparation. If you were to do everything alone, it would take a long time to finish. However, if you divided the project among several friends, one could research while another writes and another makes the presentation slides. Completing the project becomes much quicker, just as parallel processing accelerates AI tasks.

Role of Parallel Processing Architectures

Chapter 3 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Parallel processing architectures, which use multiple processors or cores to perform computations in parallel, provide the necessary computational resources for efficient AI processing.

Detailed Explanation

Parallel processing architectures consist of systems designed to handle multiple processes at once. They use multiple computing units such as processors or cores, allowing for efficient processing of data. This architecture is particularly useful for AI tasks, where processing speed and capacity are crucial.

Examples & Analogies

Consider the architecture of a modern train station. If a train station has many platforms and trains (similar to processors), it can handle a large number of passengers (data) quickly and efficiently. If there were only one platform, passengers would have to wait in long lines, which is not efficient. In the same way, parallel processing architectures help manage large volumes of AI tasks effectively.

Overview of Chapter Content

Chapter 4 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

This chapter explores the principles of parallel processing architectures, their applications in AI circuits, and the design considerations and challenges in achieving parallelism for AI applications.

Detailed Explanation

The chapter will delve into the fundamental concepts of how parallel processing architectures work, how they are applied in artificial intelligence circuits, and the various design aspects that must be considered when developing systems that use parallel processing. It also discusses the challenges encountered when trying to implement parallelism in AI applications.

Examples & Analogies

Think of this chapter as a road map for a journey. It outlines the important mountains (principles), valleys (applications), and roadblocks (challenges) you will encounter along the way. Just as a road map guides a traveler, this chapter guides readers through the complex world of parallel processing in AI.

Key Concepts

-

Parallel Processing: The ability to execute multiple computations at once, crucial for AI tasks.

-

SIMD vs. MIMD: Two types of architectures, SIMD performs the same operation across data, while MIMD operates on different instructions for different data.

-

Applications in AI: Parallel processing is essential for deep learning, real-time inference, and handling large datasets.

Examples & Applications

In deep learning, a NEURAL NETWORK training process leverages SIMD to perform matrix multiplications rapidly.

For autonomous vehicles, a MIMD architecture allows processors to handle image recognition and navigation tasks simultaneously.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

Parallel tasks, side by side, Loads of data, we can ride.

Stories

Imagine a busy kitchen where multiple chefs are cooking various meals at once. Some chefs chop vegetables, while others boil pasta—this is like MIMD, as they each handle different tasks simultaneously.

Memory Tools

P.A.R.A.L.L.E.L - Parallelism Allows Rapid And Large computations for AI Efficiency and Learning.

Acronyms

<p class="md

text-base text-sm leading-relaxed text-gray-600">S (Single)</p>

Flash Cards

Glossary

- Parallel Processing

The simultaneous execution of multiple computations or tasks.

- AI (Artificial Intelligence)

Simulation of human intelligence processes by machines, especially computer systems.

- SIMD (Single Instruction, Multiple Data)

An architecture that executes the same instruction on multiple data points.

- MIMD (Multiple Instruction, Multiple Data)

An architecture where different processors execute different instructions on different data.

- Deep Neural Networks (DNNs)

A type of neural network with multiple layers that can learn complex patterns.

- GPU (Graphics Processing Unit)

A hardware component optimized for parallel processing, particularly suited for AI applications.

- TPU (Tensor Processing Unit)

A custom hardware accelerator designed by Google to speed up machine learning workloads.

Reference links

Supplementary resources to enhance your learning experience.