Microarchitecture Factors Affecting Performance

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Superscalar Design

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today we're diving into superscalar design. This design allows a processor to execute more than one instruction during a single clock cycle. Can anyone explain how this might improve performance?

Could it mean that the processor can complete tasks faster by handling multiple instructions at once?

Exactly! This capability increases the overall throughput. Think of it like a highway with multiple lanes; more cars can travel at once. What’s another feature that helps performance?

Out-of-order execution? It sounds like it helps with efficiency by not having to order everything strictly.

Great point! Out-of-order execution improves throughput by utilizing processing unit resources more effectively. By allowing instructions to execute as soon as the required resources are available, we reduce idle times.

So, while one instruction waits, others can still be processed?

Exactly, keeping the pipeline full and efficient. Lastly, remember superscalar can be thought of with the acronym 'PAR', which stands for 'Parallelism', 'Efficiency', and 'Resource utilization'.

Branch Prediction and Prefetching

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now, let's talk about branch prediction. It anticipates the direction of branches to minimize pipeline stalls. How does that sound?

So if it guesses wrong, it has to start over, right? That sounds costly!

You're right! Mis-predicted branches can result in wasted cycles. But good predictions can enhance performance significantly. And what about instruction prefetching?

Isn't prefetching about getting the next instructions before they are needed?

Exactly! By fetching instructions early, the processor minimizes delays. Pairing these techniques really boosts efficiency! Remember 'BIPP': 'Branch prediction & Instruction Prefetching for Performance Peaks'.

NEON SIMD Unit

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Finally, let's discuss the NEON SIMD unit. Why do you think it's important for media and ML applications?

Because it can handle multiple data simultaneously, right?

Exactly! SIMD stands for Single Instruction, Multiple Data. This enhances operations like image processing by executing the same instruction across many data points at once.

Does that mean processing will be much faster for certain tasks?

Yes! This capability is especially useful in fields like multimedia and machine learning. Remember 'SEE' - ' SIMD Enhances Efficiency'!

I’ll remember these concepts thanks to those memory aids!

Fantastic! Remembering these key features will help us understand Cortex-A performance better.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

The section highlights several key enhancements in Cortex-A microarchitecture, such as superscalar design, out-of-order execution, branch prediction, prefetching, and SIMD capabilities, and explains how each contributes to improved performance.

Detailed

Microarchitecture Factors Affecting Performance

The performance of Cortex-A processors is significantly influenced by its microarchitecture features. Here are some important aspects:

Superscalar Design

This allows multiple instructions to be processed in a single clock cycle, improving the instruction throughput.

Out-of-Order Execution

By executing instructions as resources are available rather than strictly in the order they appear, this feature increases throughput and reduces idle times in the processing pipeline.

Branch Prediction

This anticipates the direction of branches in code execution to minimize costly pipeline stalls that occur when incorrect branches are followed.

Instruction Prefetching

This technique is used to fetch instructions into the cache before they are needed for execution, thereby minimizing wait times resulting from cache misses.

NEON SIMD Unit

This is an advanced vector processing unit that enhances capabilities in processing media data and machine learning applications, allowing for parallel execution of multiple data operations.

Overall, these features work together to enhance the performance efficiency of Cortex-A processors, making them suitable for a variety of applications in mobile computing.

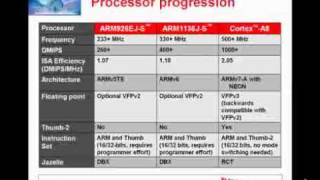

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Superscalar Design

Chapter 1 of 5

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Superscalar design

Allows multiple instructions per cycle

Detailed Explanation

A superscalar design means that the processor can execute more than one instruction in a single clock cycle. This is accomplished by having multiple execution units within the processor. This feature enhances performance because it allows the processor to handle more tasks simultaneously, increasing the overall throughput of the system.

Examples & Analogies

Think of a superscalar design like having multiple cash registers open at a grocery store. If only one register is open, customers have to wait in line one at a time. However, if multiple registers (execution units) are open, many customers can be processed at once, reducing wait time and speeding up the entire checkout process.

Out-of-Order Execution

Chapter 2 of 5

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Out-of-order execution

Increases throughput

Detailed Explanation

Out-of-order execution allows a processor to make use of instruction cycles that would otherwise be wasted. Instead of executing instructions in the order they appear, the processor can rearrange the execution sequence to make better use of available execution units. As a result, this leads to higher utilization of processor resources, allowing more instructions to be processed in a shorter amount of time, which increases overall performance.

Examples & Analogies

Imagine a chef in a kitchen who cooks dishes in the order they are received. If one dish takes longer than expected, the chef could fall behind. However, if the chef can start preparing simpler dishes (like salads) while waiting for another dish to finish (like a roast), he completes more orders overall. This is similar to how out-of-order execution optimizes the workflow in a processor.

Branch Prediction

Chapter 3 of 5

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Branch prediction

Reduces pipeline stalls

Detailed Explanation

Branch prediction is a technique used in processors to guess the direction of branch instructions (decisions that can lead the program down different paths). Accurately predicting which path a branch will take allows the processor to continue processing instructions without waiting, thereby reducing stalls that can occur when the processor has to pause to determine which instructions to execute next.

Examples & Analogies

Consider driving a car and reaching a fork in the road where one path leads to a store and the other leads home. If you can anticipate which direction you will turn based on your route, you can accelerate smoothly rather than hesitating at the fork. Just like a driver who makes a quick decision can continue traveling efficiently, processors that use effective branch prediction can avoid delays and improve speed.

Instruction Prefetching

Chapter 4 of 5

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Instruction prefetching

Minimizes cache miss delays

Detailed Explanation

Instruction prefetching is a technique where the processor anticipates which instructions it will need to execute next and fetches them into the cache before they are actually needed. This reduces the time the processor might spend waiting for instructions to be fetched from slower memory, thus minimizing delays and enhancing overall performance.

Examples & Analogies

Think of instruction prefetching like a reader flipping pages in a book. If a reader knows the storyline, they might anticipate what happens next and start turning the pages ahead of time. Similarly, in instruction prefetching, the processor 'turns the pages' ahead to access the necessary instructions quickly, saving time and maintaining a smooth flow of execution.

NEON SIMD Unit

Chapter 5 of 5

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

NEON SIMD unit

Enables vector processing for media and ML apps

Detailed Explanation

The NEON SIMD (Single Instruction, Multiple Data) unit in Cortex-A cores is designed to enhance performance for applications that require processing of multiple data sets simultaneously, such as multimedia processing and machine learning. This means that the processor can execute a single instruction on multiple pieces of data at once, significantly speeding up tasks that involve operations on large datasets.

Examples & Analogies

You can think of NEON SIMD like a factory assembly line where workers are hired to perform the same task on multiple products at once. If each worker (processing core) can handle the same operation on several products (data points) simultaneously, production is faster. This is how NEON SIMD speeds up complex tasks by efficiently processing many data elements in parallel.

Key Concepts

-

Superscalar Design: Enables multiple instructions per cycle for increased throughput.

-

Out-of-Order Execution: Enhances efficiency by executing instructions as resources are available.

-

Branch Prediction: Reduces pipeline stalls by anticipating instruction direction.

-

Instruction Prefetching: Minimizes wait times by fetching instructions early.

-

NEON SIMD Unit: Facilitates parallel processing for multimedia and ML applications.

Examples & Applications

In a single clock cycle, a superscalar processor could execute both an addition and a multiplication instruction simultaneously.

Branch prediction can allow a processor to continue executing subsequent instructions rather than stalling to wait for direction on a branch.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

In a pipeline, don't fall behind,

Stories

Imagine a busy restaurant kitchen where chefs can prepare multiple dishes at once (superscalar) and they switch to the next dish as ingredients are ready (out-of-order execution). They also guess which dish will be ordered most often (branch prediction) and chop veggies before they're needed (instruction prefetching).

Memory Tools

Remember 'BOSS': 'Branch prediction, Out-of-order execution, Superscalar for Speed!'

Acronyms

Use 'SPOON'

Superscalar

Prefetching

Out-of-order execution

NEON for optimization in networks.

Flash Cards

Glossary

- Superscalar Design

An architecture that allows multiple instructions to be executed in parallel during a single clock cycle.

- OutofOrder Execution

A method that enables execution of instructions as resources are available rather than strictly in order.

- Branch Prediction

A technique that guesses the direction of branches to reduce pipeline stalls.

- Instruction Prefetching

Fetching instructions into cache before they are needed to minimize wait times due to cache misses.

- NEON SIMD Unit

An advanced vector processing unit that enhances processing capabilities for media and machine learning applications.

Reference links

Supplementary resources to enhance your learning experience.