Performance Metrics for Cortex-A Architectures

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Introduction to Cortex-A Architectures

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today we will explore Cortex-A architectures. Can anyone tell me what Cortex-A processors are typically used for?

They are used in mobile devices like smartphones and tablets.

Exactly! They offer a blend of performance and energy efficiency. They support both 32-bit and 64-bit architectures, which is crucial for modern applications. Let's remember the acronym PPA for Performance, Power Efficiency, and Area.

What does the balance in PPA mean for developers?

Great question! It means that developers must consider how much performance they need versus the power they can afford to use, especially in battery-powered devices.

So to recap, Cortex-A processors are most common in smartphones and tablets, focusing on high performance while maintaining energy efficiency.

Key Performance Metrics

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Next, let’s talk about how we evaluate the performance of these processors. Do any of you remember the key performance metrics?

Clock speed, CPI, and IPC?

Correct! Let’s break these down. Clock speed measures how fast the CPU processes instructions. What happens if the clock speed is increased?

The execution becomes faster, but it might use more power.

Exactly! Now, CPI tells us the average cycles needed per instruction. A lower CPI is better. Can anyone calculate execution time based on the formula: Execution Time = Instruction Count × CPI × Clock Cycle Time?

If I have 100 instructions, a CPI of 2, and a clock cycle time of 0.01 seconds, the execution time would be 100 × 2 × 0.01 = 2 seconds.

Perfect! Finally, IPC is about how many instructions are processed in one clock cycle. So a higher IPC signifies better throughput. Let’s summarize: we assess performance through clock speed, CPI, and IPC, and they relate closely to execution efficiency.

Microarchitecture Factors Affecting Performance

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now, let's dive into the microarchitectural factors that impact performance. Who can name some features of Cortex-A cores?

Superscalar designs and out-of-order execution!

Absolutely! Superscalar design allows multiple instructions to be executed at once. This leads to increased performance. Remember that 'O' in OOE stands for Out-of-Order Execution, which helps maintain high throughput by allowing the CPU to schedule instructions based on availability rather than strict order.

What about those pipeline stalls?

Good point! Branch prediction helps reduce those stalls significantly. Can anyone explain how instruction prefetching helps?

It minimizes cache misses by fetching instructions before they're needed.

Exactly. Finally, features like the NEON SIMD unit enhance performance for multimedia applications. To sum up today's lesson, microarchitecture factors like superscalar design and out-of-order execution are crucial for maximizing performance.

Cache and Memory Hierarchy

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Next, let’s discuss cache and memory hierarchy. Why do you think cache size matters?

A larger cache means faster access to instructions and data?

Right! The L1 cache can be between 16 to 64 KB, providing the fastest access. What about the L2 cache?

It’s shared among cores and larger, between 256 KB to 2 MB!

Exactly that! And what is L3 cache for?

It's optional and shared by all cores in higher-end chips?

Exactly! Cache hit rates directly impact memory latency, and a high cache hit rate means fewer delays. Let’s summarize: Cache size and design play crucial roles in enhancing the performance of Cortex-A architectures.

Benchmarking and Performance Comparisons

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Finally, let's look at benchmarking performance. Who can name some benchmarking tools for evaluating Cortex-A performance?

Like CoreMark and Geekbench?

Correct! CoreMark assesses embedded core performance, while Geekbench gauges overall CPU handling of integers, floats, and cryptography tasks. How do these tools help us?

They help us compare different architectures and their performance in real scenarios.

Absolutely! And let's compare the Cortex-A cores. For instance, the Cortex-A57 runs up to 2.0 GHz with a focus on high performance, while the Cortex-A53 may run at 1.5 GHz, emphasizing energy efficiency. Summarizing, benchmarking tools allow us to evaluate and compare the performance of various Cortex-A cores effectively.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

In this section, we explore the Cortex-A family of ARM-based processors, focusing on key performance metrics such as clock speed, CPI, IPC, and power efficiency. The influence of microarchitecture features, cache design, and benchmarking methods are also discussed, along with comparisons of different Cortex-A cores and their real-world performance considerations.

Detailed

Performance Metrics for Cortex-A Architectures

Introduction to Cortex-A Architectures

Cortex-A processors are ARM-based architectures optimized for high performance and energy efficiency, primarily used in mobile and embedded systems. They support 32-bit and 64-bit architectures, striking a balance between performance, power efficiency, and area (PPA).

Key Performance Metrics

Several metrics are vital for assessing Cortex-A processor performance:

- Clock Speed (GHz): The frequency at which instructions are executed; higher speeds increase execution but also power consumption.

- CPI (Cycles Per Instruction): Represents the average number of cycles required per instruction, determined by the formula:

Execution Time = Instruction Count × CPI × Clock Cycle Time

Lower CPI values indicate better performance.

- IPC (Instructions Per Cycle): Measures the number of instructions executed in a cycle; higher IPC signifies better utilization of resources.

Microarchitecture Factors Affecting Performance

Cortex-A cores adopt architectural enhancements, including:

- Superscalar design - enables executing multiple instructions per cycle.

- Out-of-order execution - improves throughput.

- Branch prediction - minimizes stalls.

- Instruction prefetching - reduces cache miss delays.

- NEON SIMD unit - supports vector processing for multimedia applications.

Cache and Memory Hierarchy

Cache size plays a crucial role in performance:

- L1 Cache (I+D): 16–64 KB (fast access for instructions and data).

- L2 Cache: 256 KB–2 MB (shared, faster than RAM).

- L3 Cache: (optional, shared by all cores for higher-end chips).

Cache hit rates are directly related to memory latency and execution speed.

Power Efficiency and Performance per Watt

Cortex-A designs frequently employ dynamic voltage and frequency scaling (DVFS) for power management, and optimized pipeline stages help reduce energy use.

Benchmarking Cortex-A Performance

Key benchmarking tools evaluate various performance metrics across areas such as embedded core performance, general CPU tasks, workload simulation, and mobile system performance, assessing throughput, memory performance, and multi-threading efficiency.

Performance Comparisons of Cortex-A Cores

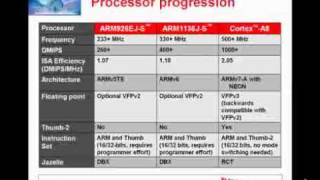

A comparison of various Cortex-A cores highlight their architectures, maximum frequencies, and key features:

- Cortex-A53: ~1.5 GHz - energy-efficient.

- Cortex-A57: ~2.0 GHz - high-performance design.

- Cortex-A75: ~2.6 GHz - optimized IPC.

- Cortex-A78: ~3.0 GHz - flagship mobile.

- Cortex-A510: ~2.0 GHz - efficiency-oriented.

Factors Influencing Real-World Performance

Real-world performance affected by:

- Type of workload (e.g., multimedia vs. compute).

- Thermal throttling - impacts sustained performance.

- OS and scheduler - influences core utilization.

- Compiler optimization - enhances performance and efficiency.

Summary of Key Concepts

Cortex-A evaluation utilizes metrics like clock speed, CPI, IPC, and power efficiency. Microarchitecture features enhance performance, while cache design and hierarchy are crucial for sustainable operation.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Introduction to Cortex-A Architectures

Chapter 1 of 7

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Cortex-A processors are a family of ARM-based processors designed for high-performance, energy-efficient computing in mobile, embedded, and IoT systems.

- Widely used in smartphones, tablets, and Linux-based embedded systems.

- Support both 32-bit (ARMv7-A) and 64-bit (ARMv8-A/ARMv9-A) architectures.

- Balance performance, power efficiency, and area (PPA).

Detailed Explanation

Cortex-A processors are specialized chips made by ARM. They excel in tasks requiring both speed and low power consumption, making them perfect for mobile devices like smartphones and tablets. These processors support two types of computing architectures: 32-bit and 64-bit, allowing them to run a wide range of applications. The design aim is to balance three important factors: performance (how fast they work), power efficiency (how much energy they use), and area (the physical space the chip occupies).

Examples & Analogies

Think of Cortex-A processors like a highly skilled chef working in a small kitchen (the chip). The chef is quick (high-performance), uses energy-efficient appliances (power efficiency), and organizes the kitchen well (area), allowing them to create delicious meals (applications) efficiently.

Key Performance Metrics

Chapter 2 of 7

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Evaluating Cortex-A processor performance involves analyzing several core metrics:

1. Clock Speed (GHz)

- Frequency at which the processor executes instructions.

- Higher clock speed → faster instruction execution, but may increase power usage.

2. CPI – Cycles Per Instruction

- Average number of clock cycles needed per instruction.

- Formula:

Execution Time = Instruction Count × CPI × Clock Cycle Time

- Lower CPI indicates better performance.

3. Instructions Per Cycle (IPC)

- Number of instructions completed in one clock cycle.

- Higher IPC shows better utilization of execution units.

Detailed Explanation

To assess how well Cortex-A processors perform, we look at several metrics. First is the Clock Speed, measured in GHz, which tells us how quickly a processor can execute instructions. While a higher clock speed can mean faster task execution, it can also lead to higher power consumption. Next, we have CPI, or Cycles Per Instruction, which represents the average number of clock cycles that the processor needs to execute a single instruction. There's a formula to calculate the execution time based on instruction count, CPI, and clock cycle time. Finally, IPC, or Instructions Per Cycle, indicates how many instructions can be processed in one clock cycle; a higher IPC means the processor is making better use of its capabilities.

Examples & Analogies

Consider a factory as a way to understand these metrics. The clock speed is like the speed of the conveyor belt moving products; faster speeds mean more products can be made in a given time. CPI represents the efficiency of workers (machine cycles needed for each product), where fewer cycles mean better efficiency. IPC is how many products (instructions) workers can complete at once; more workers (higher IPC) working together lead to faster throughput.

Microarchitecture Factors Affecting Performance

Chapter 3 of 7

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Cortex-A cores include various architectural enhancements:

- Superscalar design: Allows multiple instructions per cycle.

- Out-of-order execution: Increases throughput.

- Branch prediction: Reduces pipeline stalls.

- Instruction prefetching: Minimizes cache miss delays.

- NEON SIMD unit: Enables vector processing for media and ML apps.

Detailed Explanation

The performance of Cortex-A processors is significantly influenced by their microarchitecture features. The Superscalar design permits multiple instructions to be processed simultaneously, which boosts performance. Out-of-order execution allows instructions to be run as resources become available, rather than strictly in the order they appear, enhancing throughput. Branch prediction guesses which way a branch will go in the code before this decision is known, helping avoid delays. Instruction prefetching fetches instructions ahead of time to prevent delays caused by cache misses. Finally, the NEON SIMD unit lets the processor handle multiple data points in a single instruction for tasks like media processing and machine learning, further enhancing performance.

Examples & Analogies

Imagine a busy restaurant staff. The Superscalar design is like having multiple chefs who each handle different parts of a meal at the same time. Out-of-order execution is similar to a chef preparing ingredients whenever they are ready rather than in a fixed order. Branch prediction can be likened to staff anticipating what the next order will be, preparing in advance to reduce waiting times. Instruction prefetching is like having ingredients ready ahead of time, so there's no delay when it's time to cook. Finally, NEON SIMD functions like an efficient assembly line, where one person handles multiple tasks simultaneously.

Cache and Memory Hierarchy

Chapter 4 of 7

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Efficient cache design greatly enhances Cortex-A performance:

Cache Size Role

- L1 (I + D): 16–64 KB each. Fast access to instructions/data.

- L2 Cache: 256 KB–2 MB. Shared among cores (faster than RAM).

- L3 Cache: Optional. Shared by all cores (in higher-end chips).

- Cache hit rates directly influence memory latency and execution speed.

Detailed Explanation

The cache and memory hierarchy is critical for performance in Cortex-A architectures. The L1 cache, divided into instruction and data caches, is very fast but has limited capacity (16-64 KB). It provides quick access to the most frequently used data and instructions. The L2 cache, which is larger (256 KB–2 MB), is shared among the cores and supplies data to the L1 cache when needed, offering faster access than RAM. Some processors also feature an L3 cache, which is even larger and shared among all cores in higher-end chips. It's important to maintain high cache hit rates, meaning that the processor successfully finds the data it needs in the cache rather than going to slower memory, which influences overall execution speed.

Examples & Analogies

Think of cache memory like a restaurant pantry. The L1 cache is the small section right next to the kitchen where the most frequently used ingredients are kept for quick access. L2 cache is like a larger pantry that stores more ingredients, shared by the entire kitchen team, allowing chefs to quickly retrieve items that may not fit in the immediate area. An optional L3 cache can be seen as a bulk storage room for less frequently accessed goods. Just as chefs want to maximize the frequency of grabbing ingredients from the pantry (cache hit rates), processors aim to minimize delays by having the necessary data readily available in the caches.

Power Efficiency and Performance per Watt

Chapter 5 of 7

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

ARM Cortex-A designs are optimized for performance per watt:

- Dynamic voltage and frequency scaling (DVFS) adjusts power consumption dynamically.

- Efficient pipeline stages and simplified instructions reduce energy use.

- Crucial for battery-powered devices and thermally constrained systems.

Detailed Explanation

Cortex-A processors are engineered to provide the best performance per watt, which is essential in battery-powered devices like smartphones. Dynamic Voltage and Frequency Scaling (DVFS) allows the processor to adjust its voltage and frequency based on current needs, reducing power consumption when full performance isn’t necessary. This is coupled with efficient pipeline stages that optimize how instructions are executed and simplified instructions that reduce the complexity and energy required to execute tasks. This focus on power efficiency is crucial in environments where heat and battery life are concerns.

Examples & Analogies

Consider a car that adjusts its speed based on the road conditions. Just like how the car will slow down to save gas in less demanding situations, DVFS enables processors to throttle back their power usage when full speed isn't necessary. Similarly, an efficient pipeline is like an optimized engine design that reduces fuel consumption while maximizing output. This ensures devices can last longer on a charge, like a car going further without needing a refill.

Benchmarking Cortex-A Performance

Chapter 6 of 7

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Common tools and metrics:

Benchmark Focus Area

- CoreMark: Embedded core performance.

- Geekbench: General CPU (integer, float, crypto).

- SPEC CPU: Workload simulation, compute-intensive apps.

- AnTuTu: Mobile system performance.

Metrics Evaluated:

- Integer and floating-point throughput.

- Memory and I/O performance.

- Multi-threaded vs. single-threaded efficiency.

Detailed Explanation

To measure the performance of Cortex-A processors, several benchmarking tools and metrics are used. CoreMark focuses on the performance of embedded cores and is often used for efficiency in those contexts. Geekbench offers a more general view by assessing a range of CPU capabilities, including integer and floating-point performance. SPEC CPU focuses on simulating real-world workloads, especially for compute-intensive applications. AnTuTu evaluates mobile system performance across various metrics. These benchmarks help assess aspects like throughput for different types of data, performance of memory and input/output operations, and how well a processor performs under multi-threading versus single-threading scenarios.

Examples & Analogies

Benchmarking a processor is like testing a car's mileage and performance in different conditions. Just as a car can be assessed on its speed, fuel efficiency, and ability to handle various terrains, Cortex-A processors are evaluated based on distinct performance metrics relevant to their intended uses. Each benchmarking tool highlights different strengths and weaknesses, similar to how different tests reveal various aspects of a car's capabilities.

Factors Influencing Real-World Performance

Chapter 7 of 7

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Considering various factors that affect real-world performance:

- Workload Type: Multimedia, ML, or OS tasks affect utilization.

- Thermal throttling: Sustained performance depends on heat dissipation.

- Operating System and Scheduler: Core utilization depends on task assignment.

- Compiler optimization: Efficient binaries improve pipeline and cache behavior.

Detailed Explanation

Real-world performance of Cortex-A processors is impacted by various factors beyond just technical specifications. The type of workload—whether it’s multimedia (video processing), machine learning tasks, or operating system functions—can influence how efficiently the processor is utilized. Thermal throttling can occur when the processor generates too much heat and slows down to prevent damage, thus affecting sustaining performance. The operating system and its scheduler also play a crucial role in how tasks are assigned to cores, impacting core utilization. Lastly, compiler optimizations that produce efficient binaries can enhance pipeline processing and cache behavior, improving overall execution.

Examples & Analogies

Think of running a marathon versus sprinting. The type of race (workload type) can determine how much energy you need to use. If it's hot (thermal throttling), you might need to slow down to avoid overheating. In the same way, the coach (operating system) decides where to send runners (assign tasks) and how well they're trained (compiler optimization) makes all the difference in how well they perform.

Key Concepts

-

Cortex-A Processors: Family of ARM processors for high-performance applications such as mobile devices.

-

Key Performance Metrics: Includes clock speed, CPI, and IPC that measure processors' operational efficiency.

-

Microarchitecture Features: Enhanced mechanisms like out-of-order execution and superscalar designs enhance performance.

-

Cache and Memory Hierarchy: The design and size of cache memory significantly affect overall performance.

-

Power Efficiency: Essential in mobile contexts, considering dynamic voltage and frequency scaling.

Examples & Applications

Cortex-A53: A processor best suited for devices requiring low power usage but maintaining reasonable performance.

Cortex-A78: Known for superior IPC, making it ideal for flagship mobile devices that require robust processing power.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

For Cortex-A processors, swift and bright, clock speed and CPI make performance light.

Stories

Imagine Cortex-A as a team of runners, where each runner represents a CPU instruction. The faster they run (higher clock speed), the more laps (instructions) can be completed, but too many laps can make them tired (higher power usage).

Memory Tools

Remember 'CIP' - Clock speed, Instructions processed (IPC), and CPI. These are key metrics for evaluating Cortex-A performance!

Acronyms

PPA - Performance, Power Efficiency, Area! Keep this in mind when thinking about Cortex-A architectures.

Flash Cards

Glossary

- CortexA

A family of ARM-based processors designed for high-performance, energy-efficient computing.

- CPI

Cycles Per Instruction; the average number of clock cycles needed per instruction.

- IPC

Instructions Per Cycle; the number of instructions completed in one clock cycle, indicating resource utilization.

- Superscalar Design

An architectural feature allowing multiple instructions to be executed simultaneously in a single clock cycle.

- OutofOrder Execution

A method of instruction scheduling that allows instructions to be processed in an order different from their original order to enhance throughput.

- Branch Prediction

A technique used to predict the direction of branch instructions to reduce delays in instruction pipeline.

- NEON SIMD

A Single Instruction Multiple Data (SIMD) architecture extension for ARM processors, optimizing multimedia and machine learning applications.

- DVFS

Dynamic Voltage and Frequency Scaling; a power management technique that adjusts the voltage and frequency according to workload requirements.

- Cache Hit Rate

The percentage of memory access requests that are successfully served by the cache.

Reference links

Supplementary resources to enhance your learning experience.