Designing Memory Hierarchy

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Memory Hierarchy Levels

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today's class is about memory hierarchy. Can anyone tell me what levels of memory hierarchy you know?

I think there is cache and main memory.

Great! So we have registers, cache, main memory, and secondary storage. Let’s break them down. Registers are the fastest. Can anyone explain why?

Because they are closest to the CPU, right?

Exactly! Now, what about cache? How does it help improve performance?

It stores frequently accessed data to speed up access times.

Yes! Cache reduces the time it takes to access data from main memory. Remember: 'Cache saves time, makes performance shine!'

And what about main memory?

Good question! Main memory stores the data and programs that the CPU is currently using. It’s slower than cache but much larger.

In summary, the levels of memory hierarchy are critical for determining a system’s overall performance. We have registers as the fastest, followed by cache, main memory, and secondary storage.

Cache Design

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Let’s dive into cache design. Can anyone tell me what cache aims to achieve?

To reduce latency?

Correct! Reducing latency is a key goal, but it also needs to be fast. What factors affect cache performance?

The size of the cache and the speed of accessing it?

Yes! Additionally, organizations like direct-mapped, fully associative, and set-associative determine how cache may store data. Can anyone think of a way to remember these types?

We could use an acronym, like 'DFA' for Direct, Fully, Associative.

Wonderful! Let’s summarize: Effective cache design involves reducing latency and optimizing for speed while utilizing effective mapping techniques.

Virtual Memory and MMUs

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now, let’s talk about virtual memory. Who can explain its importance?

It helps with running larger programs than the physical memory can hold?

Exactly! It allows the system to use hard drive space as additional RAM. How does the MMU play a role in this?

The MMU translates virtual addresses to physical addresses.

Yes! MMUs are essential for managing how virtual memory works. Remember the phrase: 'MMUs Map Memory Use.' Can anyone summarize the key roles of virtual memory and MMUs?

Virtual memory allows us to run larger applications, while MMUs handle the translation of addresses.

Perfect! This session reinforces that virtual memory and MMUs are crucial to managing and optimizing performance in computer systems.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

The section elaborates on the various levels of memory hierarchy, including registers, cache, main memory, and secondary storage. It covers cache and virtual memory design as well as the role of Memory Management Units (MMUs) in translating addresses, emphasizing their collective importance in optimizing speed and minimizing latency.

Detailed

Designing Memory Hierarchy

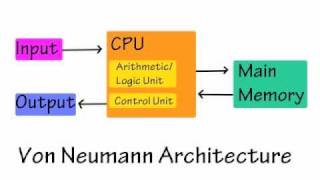

The design of memory hierarchy plays a significant role in the overall performance of computer systems. It consists of several levels:

1. Registers: The fastest type of memory, located within the CPU, providing quick access to frequently used data.

2. Cache: A smaller, faster type of volatile memory that stores copies of frequently accessed data from main memory to reduce latency.

3. Main Memory: Also known as RAM, this is the primary storage used by the CPU to hold data and instructions that are actively in use.

4. Secondary Storage: Non-volatile storage such as HDDs and SSDs used for long-term data storage.

The section further delves into the intricacies of cache design, emphasizing the need to optimize for speed while minimizing latency, which can significantly affect system performance. Additionally, it discusses virtual memory design, illustrating how it allows systems to use disk space as an extension of RAM. Finally, it highlights the function of Memory Management Units (MMUs) that translate virtual addresses into physical addresses, ensuring smooth execution of large programs and extensive data sets.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Memory Hierarchy Levels

Chapter 1 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Registers, cache, main memory, and secondary storage

Detailed Explanation

The memory hierarchy in a computer system is a structured way of organizing memory that helps optimize performance. At the top, we have registers, which are the fastest types of memory used by the CPU for immediate data access. Below that is cache memory, which is smaller and faster than main memory, designed to temporarily hold frequently accessed data to speed up processing. Main memory (also known as RAM) holds data that is actively being used by the system but is slower in comparison to cache. Finally, secondary storage, such as hard drives or SSDs, is used for long-term data storage but has higher latency. The key point is that by organizing memory in this way, systems can balance speed and cost, ensuring that the most frequently needed data is the quickest to access.

Examples & Analogies

Think of the memory hierarchy like a library. If you need a book immediately, you would go to the front desk where the librarian (registers) keeps copies of the most popular books. If that book is checked out, you might find another copy on a nearby shelf (cache). If you need a general book, you would search through the main sections of the library (main memory). Lastly, for rare or old books, you might check the archives (secondary storage), which takes longer to access but contains information that is still important.

Cache Design

Chapter 2 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

How to design caches to optimize speed and minimize latency

Detailed Explanation

Cache design is critical in ensuring that the CPU can access data quickly without having to rely on slower main memory. Effective cache design includes choosing the right size, associativity, and replacement policies. Size refers to how much data the cache can hold; larger caches can store more data but may also be slower. Associativity describes how the cache organizes data: higher associativity means the cache can hold more items in a way that reduces the chance of a cache miss (when data isn’t found in the cache). Replacement policies dictate which data to remove when the cache is full, balancing speed and efficiency. The goal of these design choices is to reduce latency, which is the delay before data processing begins.

Examples & Analogies

Consider a chef in a busy restaurant kitchen. If all the ingredients are put away in a pantry (main memory), it takes longer for the chef to prepare a dish. To speed things up, the chef keeps frequently used ingredients (cache) on the countertop where they’re easily accessible. The size of the countertop can be thought of as the cache size—the larger it is, the more ingredients that can be kept handy, but if it’s too large, it can become cluttered and take time to access various items. The way ingredients are organized also matters: keeping them grouped (associativity) allows the chef to quickly find what they need without searching through unrelated items.

Virtual Memory Design

Chapter 3 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

The role of virtual memory in managing large programs and data sets

Detailed Explanation

Virtual memory is a crucial technology that allows a computer to compensate for physical memory shortages by temporarily transferring data from the RAM to disk storage. This process gives the illusion that the system has more memory than it physically does. It allows larger applications and multiple applications to run concurrently without running out of RAM. When a program needs data that isn't currently in RAM but is in virtual memory, the system retrieves it from disk storage, although this is slower than accessing data in RAM. This management involves keeping track of which parts of memory are in use and ensuring that data is swapped in and out efficiently.

Examples & Analogies

Imagine a bookshelf that can only hold a certain number of books (RAM). When you want to read a large number of books (programs), you can keep only a few on the shelf while others are stored in boxes (disk storage) elsewhere. When you want to read a book that’s not on the shelf, you can take that book out of the box and put another book back into storage. This way, even if the shelf is full, you can still read all the books you need, just by swapping them in and out as necessary.

Memory Management Units (MMUs)

Chapter 4 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Translating virtual addresses to physical addresses

Detailed Explanation

Memory Management Units (MMUs) are specialized hardware components that handle the translation of virtual addresses (used by programs) to physical addresses (used by the hardware) in memory. When a program wants to access data, it uses a virtual address that doesn’t correspond directly to a physical location in RAM. The MMU translates this address to the actual physical address in memory, allowing the CPU to retrieve the correct data. This process also enables features like memory protection, ensuring different programs do not interfere with each other’s memory space, which contributes to system stability and security.

Examples & Analogies

Think of the MMU as a translator at an international airport. Passengers (programs) have tickets written in their own languages (virtual addresses), but the airport system (physical memory) only recognizes tickets in the local language. The translator (MMU) helps convert the tickets so that each passenger can successfully navigate to their gate (the correct location in memory) without confusion, ensuring a smooth and safe travel experience.

Key Concepts

-

Memory Hierarchy: The structured arrangement of different types of memory from fastest to slowest.

-

Cache Design: The methodology in which cache memory is structured to optimize speed and reduce latency.

-

Virtual Memory: A memory management capability that allows the execution of programs that may not entirely fit in physical memory.

-

Memory Management Units: Hardware components that manage the mapping of virtual addresses to physical addresses.

Examples & Applications

Example of memory hierarchy can be visualized as a pyramid with registers at the top, followed by cache, main memory, and secondary storage at the bottom.

In a real-world application, a computer running a large software suite may use virtual memory to extend RAM capabilities by utilizing part of the hard drive.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

Cache saves time, makes performance shine!

Stories

Imagine a library where the fastest readers have a small collection of books (registers) close to them, while others have to go to a larger room (cache) or even another building (secondary storage) to find what they need.

Memory Tools

Remember: 'CRM' for Cache, Registers, Main Memory.

Acronyms

Use 'CRMS' for Cache, Registers, Main, Secondary when thinking about memory hierarchy.

Flash Cards

Glossary

- Registers

The fastest type of memory, located inside the CPU.

- Cache

A smaller, faster type of volatile memory that temporarily holds copies of frequently used data.

- Main Memory

The primary storage (RAM) used by the CPU for active data and instructions.

- Secondary Storage

Non-volatile storage such as hard drives or SSDs for long-term data retention.

- Virtual Memory

An extension of physical memory that allows the system to use disk space for application data.

- Memory Management Unit (MMU)

Hardware that translates virtual addresses into physical addresses for the CPU.

Reference links

Supplementary resources to enhance your learning experience.