Multithreading - 9

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Introduction to Multithreading

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today we're discussing multithreading, which allows multiple threads to run simultaneously in a program. Can anyone guess why this might be important?

Is it to make programs run faster?

Exactly! It improves CPU utilization by performing tasks concurrently. Remember the acronym 'CUP' - for 'Concurrent Use of Processor'.

So, what's the main difference between a thread and a process?

Great question! Threads share the same memory space within a process, while processes are independent and have their own memory space. Think of threads as workers in the same office, while processes are separate offices.

I see! So that makes communication between threads easier, right?

Exactly, but it also poses risks of data corruption without proper synchronization. We'll dive into that soon.

Multithreading Models

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now let's explore different multithreading models. What’s the many-to-one model?

That sounds like many threads linked to a single kernel thread?

Correct! This model is simple but limits CPU utilization. Can anyone explain the one-to-one model?

It maps each user thread to a kernel thread, maximizing the use of multiple cores!

Exactly! And many-to-many threads allow multiple user threads across multiple kernel threads, which adds flexibility. Remember, 'Flexibility Brings Efficiency'!

Thread Creation and Management

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Let's discuss thread management. Who can tell me how threads are created?

I think they are created using system calls like pthread_create?

Correct! After creation, they need scheduling. What strategies do we have?

Preemptive and cooperative scheduling!

Good job! Preemptive scheduling allows the OS to interrupt threads, while cooperative scheduling requires threads to yield control voluntarily. Always remember 'PP-CY' - for 'Preemptive before Cooperative Yield!'

Thread Synchronization

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

We're moving on to synchronization. Why is it crucial in multithreaded environments?

To avoid race conditions, right?

Exactly! Race conditions can lead to unpredictable behavior. Can anyone explain what a mutex does?

A mutex ensures only one thread accesses a resource at a time!

Great! Mutex stands for 'Mutual Exclusion'. It's a way to protect shared data!

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

This section covers the fundamental concepts of multithreading, including its definition, models, thread creation and management, synchronization methods, and its distinctions from multiprocessing. Additionally, it explores challenges faced in multithreading and modern architectural support.

Detailed

Multithreading

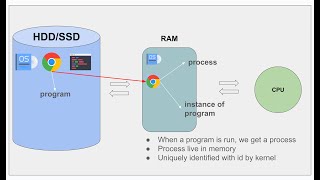

Multithreading is a programming technique that enables concurrent execution of multiple threads within a single process. Each thread shares the process resources but operates independently, allowing for efficient CPU utilization. The purpose of multithreading is to enhance application responsiveness and improve resource management.

Multithreading Models

Multithreading can be modeled in various ways, including:

1. Many-to-One: Multiple user threads are mapped to a single kernel thread, which limits processor utilization.

2. One-to-One: Each user thread corresponds to a kernel thread, maximizing multi-core system efficiency.

3. Many-to-Many: Allows multiple user threads to be managed by multiple kernel threads, enhancing workload balance.

4. Hybrid Model: Combines features of the one-to-one and many-to-many models for improved scalability and resource management.

Thread Creation and Management

Threads can be created and managed by the operating system with various techniques:

- Creation: Typically via system calls like pthread_create in POSIX or CreateThread in Windows.

- Scheduling: Managed through preemptive or cooperative strategies to optimize CPU time allocation.

- Termination: Ensuring resource deallocation after thread completion.

Thread Synchronization

It is essential to synchronize threads to avoid race conditions and ensure data coherence. Mechanisms include:

- Mutexes: Ensure exclusive access to shared resources.

- Semaphores: Signal and control access to shared resources.

- Monitors and Condition Variables: Facilitate higher-level synchronization constructs requiring certain conditions before execution.

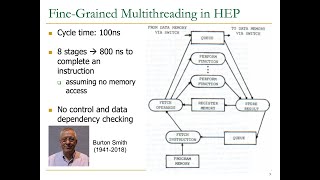

Multithreading in Modern Architectures

With advancements like simultaneous multithreading (SMT) and multicore processors, modern systems leverage multithreading through enhanced parallel execution, improving computational throughput and performance.

Multithreading vs. Multiprocessing

While multithreading allows tasks to share memory space, multiprocessing isolates tasks with independent memory, posing a tradeoff between performance efficiency and computing isolation.

Challenges in Multithreading

Key challenges include thread contention, scalability issues, and the complexity of debugging multithreaded applications.

Thread Pools and Work Queues

Efficient management techniques like thread pools (groups of pre-allocated threads) and work queues enhance task execution without the overhead of constant thread creation and destruction.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Introduction to Multithreading

Chapter 1 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Multithreading is a technique that allows a single processor or multiple processors to run multiple threads concurrently. Each thread is an independent unit of execution that can perform a part of a task, and multithreading enables efficient use of CPU resources.

Definition of Multithreading

The concurrent execution of more than one sequential task, or thread, within a program. Threads share the same process resources but have their own execution paths.

Purpose of Multithreading

Multithreading improves the efficiency of programs by making better use of CPU resources and enabling tasks to be completed concurrently rather than sequentially.

Advantages

Increased CPU utilization, better responsiveness in interactive applications, and more efficient resource management.

Detailed Explanation

Multithreading allows a computer to perform multiple tasks seemingly at the same time. Instead of waiting for one task to finish before starting another, the computer can manage many threads simultaneously, improving overall efficiency.

- Definition: Multithreading is about having many threads (the smallest sequence of programmed instructions) running at the same time. Although these threads run concurrently, they share the same resources and memory space but maintain separate execution paths.

- Purpose: The primary goal of multithreading is to enhance program performance by utilizing the CPU more effectively. When several threads work at once, tasks complete faster because the CPU is never idle, provided there are tasks to process.

- Advantages: Benefits include better CPU usage, quicker response times in applications (especially important for user interfaces), and improved management of resources, all leading to faster completion of tasks and better experiences for users.

Examples & Analogies

Think of an efficient chef in a kitchen. Instead of cooking one dish completely before starting another, the chef manages several pots on the stove at once. While waiting for water to boil, they chop vegetables, and while the soup simmers, they prepare dessert. Each dish is like a thread running concurrently, and the chef effectively uses their time and resources to serve the meal faster.

Multithreading Models

Chapter 2 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

There are different models of multithreading based on how threads are managed and executed.

Single Threading

In a single-threaded model, only one task is executed at a time, with no concurrency. All tasks are executed in sequence.

Multithreading Models:

- Many-to-One: Multiple user-level threads are mapped to a single kernel thread. This model is simple but cannot fully utilize multiple processors.

- One-to-One: Each user-level thread is mapped to a single kernel thread. This model allows full utilization of multi-core systems, as each thread can be executed independently on different cores.

- Many-to-Many: Multiple user threads are mapped to multiple kernel threads. This model provides flexibility by balancing the workload across multiple processors and threads.

- Hybrid Model: Combines features from the one-to-one and many-to-many models, allowing for greater scalability and resource management.

Detailed Explanation

Multithreading can be implemented in several ways, which affects how well threads can interact and utilize the computer’s resources:

- Single Threading: Only one task is performed at a time, making it straightforward but inefficient compared to multithreading.

- Many-to-One: In this model, many user threads are associated with one kernel thread. This simplicity makes it easy to manage, but the system cannot leverage the capabilities of multiple processors effectively, leading to reduced performance in multi-core environments.

- One-to-One: This mapping allows each thread to operate independently, making better use of advanced multi-core processors as they can run on different cores simultaneously, enhancing performance significantly.

- Many-to-Many: Here, multiple user threads can be mapped to multiple kernel threads, enabling better load balancing and resource customization depending on system capabilities.

- Hybrid Model: This combines the best features of the previous models, enhancing scalability and allowing for more efficient resource management across various use cases.

Examples & Analogies

Imagine an assembly line at a factory:

- With single threading (single task), only one product is made at a time.

- Many-to-One is like having multiple workers doing the same job but handing off to a single supervisor.

- One-to-One resembles a line where every worker does their job separately and independently, increasing total production.

- The Many-to-Many model is like having several stations where jobs can be balanced among multiple workers (supervisors), improving efficiency and flow.

Thread Creation and Management

Chapter 3 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Threads need to be created, managed, and synchronized during execution. The operating system (OS) plays a crucial role in managing these tasks.

Thread Creation

Threads are created by the OS or by the main program. A new thread can be created using system calls such as pthread_create (in POSIX systems) or CreateThread (in Windows).

Thread Scheduling

The OS scheduler determines which thread runs at any given time, managing the CPU time allocated to each thread. Scheduling strategies include:

- Preemptive Scheduling: The OS can interrupt a running thread to allocate time to another thread.

- Cooperative Scheduling: Threads voluntarily yield control to the OS or to other threads.

Thread Termination

When a thread finishes execution, it must be properly terminated, releasing resources like memory and processor time. This can be done either by the thread completing its task or by explicitly calling a termination function.

Detailed Explanation

Managing threads involves several key actions to ensure that they function effectively:

-

Thread Creation: A thread is typically initiated by the OS or the main application using specific functions, like

pthread_createin POSIX systems for Linux orCreateThreadin Windows. - Thread Scheduling: The OS has a scheduler that decides which threads will run and in what order. There are two main types of scheduling:

- Preemptive Scheduling: The OS can pause a thread to give another one time to run, helping ensure that all threads get the CPU time they need.

- Cooperative Scheduling: Threads wait for each other to yield control voluntarily, which can be less efficient since one thread could hog the CPU if it doesn’t yield.

- Thread Termination: When a thread's work is finished, it needs to terminate properly to release system resources. This can happen naturally when the task completes or through an explicit command to end its execution.

Examples & Analogies

Consider a restaurant kitchen as an analogy for thread management:

- Thread Creation: A chef assigns tasks to sous-chefs (threads) to handle various duties (making appetizers, main courses, etc.)

- Thread Scheduling: The head chef decides who cooks and when (like the OS scheduler). Sometimes they might interrupt a sous-chef to ask them to help on another dish.

- Thread Termination: Once a sous-chef is done cooking their dish, they must clean up (release resources) and signal that they are finished.

Thread Synchronization

Chapter 4 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

In multithreaded programs, threads often need to communicate or share data. Synchronization ensures that shared resources are accessed in a controlled manner to avoid race conditions and data corruption.

Race Condition

A situation where the outcome of a program depends on the timing of thread execution, leading to unpredictable behavior.

Critical Section

A section of code that can only be executed by one thread at a time to ensure consistency when accessing shared data.

Synchronization Mechanisms:

- Mutexes (Mutual Exclusion): A mutex is a locking mechanism that ensures only one thread can access a shared resource at a time.

- Semaphores: A signaling mechanism used to control access to shared resources by multiple threads. Semaphores are often used for managing limited resources like database connections or threads.

- Monitors: A higher-level synchronization construct that combines a mutex with condition variables, allowing threads to wait for certain conditions before proceeding.

- Condition Variables: Used in conjunction with mutexes to allow threads to wait for certain conditions to be met before resuming execution.

Deadlock

A situation where two or more threads are blocked forever because each is waiting on a resource held by another. Deadlock prevention and detection mechanisms are essential in multithreaded systems.

Detailed Explanation

When multiple threads operate, the need for synchronization arises to maintain data integrity, as shared resources are at risk of conflicting accesses:

- Race Condition: This occurs when the output of a program is affected by the sequence or timing of thread execution, leading to unpredictable results and bugs.

- Critical Section: Specific parts of the code (the critical section) must be accessed by only one thread at a time to ensure that shared resources are not simultaneously modified, which helps preserve data consistency.

- Synchronization Mechanisms: Several tools help manage this:

- Mutexes provide locking mechanisms that prevent multiple threads from accessing a resource simultaneously.

- Semaphores control access to shared resources and help manage thread interactions.

- Monitors offer a higher-level option, combining mutexes with condition variables to manage how threads wait for actions to complete.

- Condition Variables allow threads to pause until specific criteria are met, facilitating controlled access to resources.

- Deadlock: This dangerous situation happens when threads are stuck indefinitely waiting for each other to release resources. Managing and detecting deadlocks is crucial for system stability.

Examples & Analogies

Imagine a library as a metaphor:

- Race Condition: If two people try to check out the last copy of a book at the same time, only one can actually succeed.

- Critical Section: The desk where borrowing happens is like a critical section; only one librarian can service a patron at a time.

- Mutex: When the librarian serves a patron, the desk is locked (mutex) for that process.

- Semaphore: If there are multiple book copies, a semaphore helps track how many are available.

- Deadlock: If two librarians require the signature of the other person before completing lending, they'll end up waiting forever without finishing any transaction.

Key Concepts

-

Multithreading: A technique allowing multiple threads to run concurrently within a single process.

-

Thread Creation: Involves creating threads using system calls, managed by the operating system.

-

Thread Synchronization: Techniques that ensure safe access to shared resources in a multithreaded environment.

-

Thread Pool: A pre-defined pool of threads that can be reused for executing tasks, reducing overhead.

Examples & Applications

Web servers that handle multiple requests simultaneously use multithreading to improve response times.

A video processing application that divides the work across multiple threads to speed up processing results.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

Threads that run in a tidy space, keep our programs at a fast pace!

Stories

Imagine a busy restaurant kitchen where multiple chefs (threads) prepare different dishes (tasks) on the same counter (memory). Proper coordination is key to ensure no dish clashes, just like synchronization in multithreading.

Memory Tools

'MST - Mutex, Semaphore, Thread Pool' helps to remember key synchronization constructs in multithreading.

Acronyms

To remember thread models

'MOM & MOMH' - for 'Many-to-One

One-to-One

Many-to-Many

Hybrid'.

Flash Cards

Glossary

- Multithreading

The concurrent execution of multiple threads within a single program, sharing the same process resources.

- Thread

An independent unit of execution within a program.

- Race Condition

A scenario where the outcome of a program is affected by the timing of thread execution.

- Mutex

A locking mechanism that ensures exclusive access to a shared resource.

- Semaphore

A signaling mechanism to control access to shared resources by multiple threads.

- Thread Pool

A set of pre-created threads available to execute tasks, reducing the overhead of thread creation.

- Thread Scheduling

The method by which an operating system decides which thread to run at a given time.

- Preemptive Scheduling

A scheduling strategy where the OS can interrupt running threads to allocate CPU time to others.

- Cooperative Scheduling

A scheduling strategy where a thread voluntarily relinquishes control of the CPU.

- Critical Section

Code sections that must not be executed by more than one thread at a time.

Reference links

Supplementary resources to enhance your learning experience.