Scalability

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Understanding Scalability

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today, we will discuss scalability in multithreaded applications. To start, what do you think scalability means in the context of multithreading?

I think it means how well a program can handle more threads without slowing down.

Exactly! Scalability is about maintaining performance as we increase the number of threads. It’s crucial for applications expected to handle variable workloads.

But what kind of issues can affect scalability?

Great question! Common issues include lock contention and the overhead from context switching between threads. These can all degrade performance.

So, are there ways to improve scalability in our programs?

Yes, adopting efficient thread management practices and ensuring proper synchronization can greatly enhance scalability. Let's remember the acronym 'SCALE' for the key principles: Synchronization, Contention management, Allocation strategies, Load balancing, and Efficiency.

Challenges of Scalability

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now, let’s take a look at some specific challenges associated with scalability. Who can name one?

I think lock contention is one?

Correct! Lock contention occurs when threads are competing for the same locked resource, which can hinder performance. Any others?

What about context switching? That sounds like it could slow things down too.

Absolutely! Context switching is the process of storing and restoring the state of a thread, and too many switches can introduce significant overhead.

How do we know whether our application is scalable enough?

Monitoring tools can help analyze performance as you increase thread counts. Performance metrics and response times are key indicators to watch.

Enhancing Scalability

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Finally, let’s talk about strategies to enhance scalability. What are some methods you think we could apply?

We could minimize lock contention by using finer-grained locks?

Exactly! Finer-grained locks allow multiple threads access to different sections of the same resource. Any other strategies?

What about load balancing? Can that help too?

Right! Proper load balancing can distribute tasks more evenly across threads, enhancing the overall efficiency and responsiveness of the application.

So, we have to continually assess and adapt our strategies for better scalability?

Exactly, scalability is not just a feature; it's an ongoing effort in the development of efficient applications. Remember, effective scalability can be the difference between success and failure in performance-intensive applications.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

This section discusses scalability as one of the key challenges in multithreaded programming. As the number of threads increases, managing synchronization and resource allocation becomes more complex, which may lead to performance bottlenecks.

Detailed

Scalability in Multithreading

Scalability, a crucial aspect of multithreading, is defined as the capability of a program to maintain performance levels and efficiency as the number of threads increases. In the context of multithreaded applications, scalability concerns emerge due to factors such as lock contention, synchronization complexities, and overhead caused by context switching.

As more threads are created, the likelihood of contention for shared resources increases, which can slow down execution time and decrease responsiveness. Efficient scalability is vital for applications expected to perform under varying loads, and understanding how to manage threads effectively can help developers design systems that scale smoothly with the demand. Ultimately, scalability can significantly impact the success of applications in high-demand environments.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Scalability Challenges

Chapter 1 of 2

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

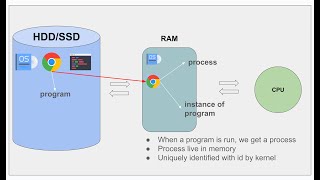

As the number of threads increases, managing synchronization, data sharing, and task allocation becomes more complex.

Detailed Explanation

Scalability refers to the ability of a software system to handle a growing amount of work or its potential to accommodate growth. When we increase the number of threads in a multithreaded program, several challenges arise. Primarily, the program must effectively manage how threads synchronize with each other (to avoid conflicts over shared resources), how they share data, and how tasks are allocated among them. As you introduce more threads, the complexity of ensuring that they work together without issues increases significantly.

Examples & Analogies

Think of a bakery that operates with only one baker. If they increase the number of orders (tasks) but do not hire more bakers (threads), the existing baker can become overwhelmed, leading to mistakes like mixing up orders. Thus, to effectively manage the increasing workload (scalability), the bakery might need to implement a system to coordinate the bakers and the orders, similar to how a multithreaded application has to manage its threads.

Limitations of Scalability

Chapter 2 of 2

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

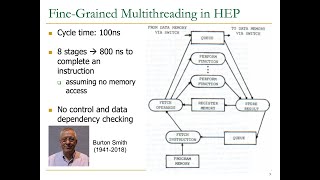

A program’s scalability is limited by factors such as lock contention and the overhead of context switching.

Detailed Explanation

Lock contention occurs when multiple threads attempt to access the same resources at the same time. This can lead to performance bottlenecks when threads are forced to wait for locks (controlled access to resources), ultimately slowing down the program. Additionally, context switching—the process of storing the state of a thread so that it can be resumed later—adds overhead to the system. Excessive context switching can waste CPU time as the operating system spends more time switching between threads rather than executing their tasks.

Examples & Analogies

Imagine a busy intersection managed by traffic lights (locks). If too many cars (threads) arrive from different directions, they must wait for the light to change to proceed. This creates a bottleneck. If the traffic lights take too long to change (context switching delay), it becomes frustrating for drivers. Similarly, a multithreaded program can slow down when too many threads compete for resources.

Key Concepts

-

Scalability: The ability of a system to manage more threads efficiently.

-

Lock Contention: A significant challenge when multiple threads compete for access to shared resources.

-

Context Switching: The overhead of saving and restoring thread states, affecting performance.

Examples & Applications

A web server handling multiple requests can illustrate scalability when it efficiently manages an increasing number of incoming threads without significant performance lag.

In a financial application processing transactions in parallel, the system must remain responsive as the load increases, demonstrating optimal scalability.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

Scalability's a must, in threads we trust; when numbers grow, keep performance in tow.

Stories

Imagine a busy restaurant where chefs (threads) have to share ingredients (resources). If they work together but stay organized, they can serve more customers (handle increased load) efficiently.

Memory Tools

Remember 'SCALE' for the strategies: Synchronization, Contention management, Allocation, Load balancing, Efficiency.

Acronyms

SCALE

Synchronization + Contention + Allocation + Load balancing + Efficiency.

Flash Cards

Glossary

- Scalability

The capability of a system to handle a growing amount of work or its potential to accommodate growth without compromising performance.

- Lock Contention

A condition where multiple threads try to access a shared resource and compete for locks, potentially degrading performance.

- Context Switching

The process of storing and restoring the state of a thread to allow multiple threads to share a single CPU resource.

Reference links

Supplementary resources to enhance your learning experience.