Thread Synchronization

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Introduction to Thread Synchronization

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Welcome, everyone! Today, we're discussing thread synchronization. Can anyone tell me why synchronization is important in multithreaded programs?

I think it's to prevent conflicting operations on shared data?

Exactly! It prevents race conditions, where the outcome depends on timing. Great start! Let's talk about what a race condition actually is.

Isn't that when two or more threads try to use the same resource at the same time, leading to issues?

Right again! That's the essence of it. To ensure our threads don't step on each other's toes, we use synchronization mechanisms. Let's explore a few of them!

Critical Sections and Mutexes

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

One effective way to handle critical sections is by using mutexes. Who can explain what a critical section is?

It's a section of code that can only be executed by one thread at a time, right?

Correct! Mutexes help achieve that. They act like locks on the critical section. Why do you think we need to secure access to this section?

To make sure that only one thread changes shared data at once, preventing data corruption!

Exactly! So, to summarize: Mutexes lock access to ensure that critical sections are not executed concurrently.

Semaphores and Condition Variables

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Next, let's consider semaphores. Can anyone describe what they are?

They're signaling mechanisms that control access to resources?

Exactly! They're used to manage access to a limited number of resources. Now, how about condition variables? What role do they play?

They help threads wait for particular conditions to be true before they proceed?

Spot on! So when combined with mutexes, they make a powerful synchronization tool known as monitors. Remember, effective synchronization can prevent risks like deadlocks. Let's talk about that next.

Understanding and Preventing Deadlocks

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now let's delve into deadlocks. Who can explain what a deadlock is?

It's when two threads are waiting for each other to release resources, right?

Perfect! A deadlock effectively freezes those threads. Why is that an issue in multithreading?

Because it can lead to the program being stuck forever, right?

Exactly! Prevention can involve strategies like avoiding circular waits or using timeouts. So remember: deadlock can cripple a program, making understanding it vital.

Review and Recap

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Let's summarize what we learned: synchronization in multithreading is critical, with key mechanisms like mutexes and semaphores preventing race conditions and managing access to shared resources.

And critical sections are where we keep data safe by allowing only one thread at a time!

Deadlocks are a risk if threads are not carefully managed.

Great recap! Always remember: managing threads effectively is essential to maintaining a smooth and reliable multithreaded application.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

Thread synchronization ensures that threads in a multithreaded environment can safely share data and resources without leading to data corruption or unexpected behavior, addressing issues like race conditions and deadlocks through mechanisms such as mutexes, semaphores, and monitors.

Detailed

Thread Synchronization

Thread synchronization is a vital concept in multithreading, providing a framework to manage how threads communicate and share resources. In multithreaded programs, shared resources must be accessed in a controlled manner to avert race conditions, where the execution order of threads leads to unpredictable results.

Key concepts include:

- Race Condition: Occurs when the program's outcome depends on the sequence of thread execution, potentially causing data corruption.

- Critical Section: A portion of code that accesses shared resources and must be executed by only one thread at a time.

- Synchronization Mechanisms:

- Mutexes: Locking systems to ensure only one thread can access a resource at a time.

- Semaphores: Signals that control access to shared resources, effectively managing limited resource availability.

- Monitors: High-level constructs that use mutexes and allow threads to wait for conditions.

- Condition Variables: Allow threads to wait for specific conditions before proceeding.

- Deadlock: A situation where two or more threads are stuck in perpetual waiting due to circular resource dependencies. Addressing deadlocks involves strategies for prevention or detection.

Understanding these elements is fundamental for building stable and efficient multithreaded applications.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Introduction to Thread Synchronization

Chapter 1 of 5

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

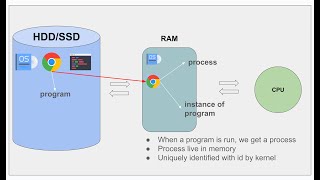

In multithreaded programs, threads often need to communicate or share data. Synchronization ensures that shared resources are accessed in a controlled manner to avoid race conditions and data corruption.

Detailed Explanation

In a multithreaded environment, it's common for multiple threads to require access to shared data or resources (like files or memory spaces). Without proper control over how these threads access shared resources, there may be conflicts known as race conditions. Synchronization techniques are employed to coordinate threads in a way that prevents such conflicts, ensuring data integrity and consistent behavior across the program.

Examples & Analogies

Think of a shared printer in an office, where multiple employees want to print their documents. If everyone sends their documents to the printer at the same time without any coordination, the printer may not produce the documents correctly, mixing them up or spitting out garbled prints. To solve this problem, a receptionist (acting like a synchronization mechanism) takes print requests one at a time, ensuring each document is printed clearly and in order.

Race Condition

Chapter 2 of 5

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Race Condition: A situation where the outcome of a program depends on the timing of thread execution, leading to unpredictable behavior.

Detailed Explanation

A race condition occurs when two or more threads access shared data and try to change it at the same time. If the timing is right, one thread may interfere with another, leading to inconsistent or incorrect results. Since threads are executed concurrently, the outcome can vary based on the scheduling and timing of these threads, making the program behave unpredictably.

Examples & Analogies

Imagine a scenario where two chefs (threads) are trying to prepare a dish using the same ingredient at the same time. If the first chef adds the ingredient to the dish and the second chef tries to take it away before the first chef has finished mixing, the dish might end up either with too much or too little of that ingredient. This uncoordinated action results in a dish that does not taste as intended, similar to how race conditions lead to bugs in software.

Critical Section

Chapter 3 of 5

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Critical Section: A section of code that can only be executed by one thread at a time to ensure consistency when accessing shared data.

Detailed Explanation

A critical section is a part of the program where shared resources are accessed. To prevent race conditions, only one thread is allowed to execute the critical section at a time. This means that if Thread A is executing a critical section, Thread B must wait until Thread A has finished before it can access the same resource. This control helps maintain data integrity and ensures that the program behaves as expected.

Examples & Analogies

Consider a ticket counter at a concert. If multiple people (threads) try to buy tickets simultaneously without a proper queueing system (critical section), they might end up purchasing more tickets than are available. However, if the ticket counter allows only one person to complete their transaction at a time, it guarantees that ticket sales remain under control and accurate transactions are completed.

Synchronization Mechanisms

Chapter 4 of 5

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Synchronization Mechanisms:

- Mutexes (Mutual Exclusion): A mutex is a locking mechanism that ensures only one thread can access a shared resource at a time.

- Semaphores: A signaling mechanism used to control access to shared resources by multiple threads. Semaphores are often used for managing limited resources like database connections or threads.

- Monitors: A higher-level synchronization construct that combines a mutex with condition variables, allowing threads to wait for certain conditions before proceeding.

- Condition Variables: Used in conjunction with mutexes to allow threads to wait for certain conditions to be met before resuming execution.

Detailed Explanation

Different synchronization mechanisms help manage access to shared resources effectively.

- Mutexes provide a lock that ensures that only one thread is working with the resource at any given time. This effectively prevents race conditions.

- Semaphores are more complex and allow multiple threads to access a limited number of instances of a resource, making them suitable for scenarios where you have a pool of resources.

- Monitors provide a higher-level abstraction that combines a mutex with condition variables, making it easier for threads to wait for certain conditions before they can access shared resources.

- Condition Variables are additional tools that allow threads to pause until certain conditions are met, further enhancing coordination between threads.

Examples & Analogies

Think of a library as a shared resource. A mutex is like a sign at the entrance that allows only one person to enter the reading room at a time. A semaphore could represent a limited number of seats in that library; if all seats are taken, no one else can enter until someone leaves. A monitor would be a system where anyone who enters must sign up and wait until it is their turn to use a computer. Finally, condition variables would allow people to wait in line until we announce or signal that a computer is available.

Deadlock

Chapter 5 of 5

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Deadlock: A situation where two or more threads are blocked forever because each is waiting on a resource held by another. Deadlock prevention and detection mechanisms are essential in multithreaded systems.

Detailed Explanation

Deadlock occurs when two or more threads are stuck indefinitely, each waiting for resources that the others are holding. This creates a circular dependency, preventing any of the threads from proceeding. For example, if Thread A is holding Resource 1 and waiting for Resource 2, while Thread B is holding Resource 2 and waiting for Resource 1, neither thread can continue, resulting in a deadlock. Thus, mechanisms must be implemented to prevent such situations or to detect and recover from deadlocks.

Examples & Analogies

Imagine two cars at a narrow intersection, where Car A is trying to go left and requires the space occupied by Car B, which is trying to go right but needs the space occupied by Car A. Both cars are waiting for the other to move, creating a deadlock. To resolve this, traffic lights or a traffic officer can be used to control movement, similar to deadlock detection and resolution strategies in software.

Key Concepts

-

Thread Synchronization: Managing how threads communicate and share resources safely.

-

Race Condition: An issue that arises when multiple threads manipulate shared data, leading to unpredictable results.

-

Critical Section: Code sections that must be executed by only one thread at a time to ensure data safety.

-

Mutex: A mechanism used to lock critical sections, ensuring only one thread can access the resource.

-

Semaphore: A signaling tool that can allow multiple threads but manage limited resource access.

-

Monitor: A high-level construct that combines mutexes and conditions for effective synchronization.

-

Deadlock: When two or more threads are permanently blocked, waiting for each other to release resources.

Examples & Applications

In a banking application, when two threads try to update the same account balance, a race condition might occur if they modify the value simultaneously, causing an incorrect balance.

Using a mutex to secure the withdrawal function in the banking application ensures that no two threads can access the updating code at once, maintaining data integrity.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

In threading, do not collide, stay clear of data with a mutex guide!

Stories

Once upon a time in Threadland, two threads wanted to share a treasure but kept fighting over it until a wise monitor settled the debate, allowing one at a time to claim their share.

Memory Tools

M-S-C-D for Mutex, Semaphore, Condition, and Deadlock—key terms in synchronization!

Acronyms

R-C-M

Race Condition

Critical Section

Mutex—remember these for safe threads!

Flash Cards

Glossary

- Race Condition

A situation in concurrent computing where the outcome of a program depends on the timing of thread execution, potentially leading to unpredictable behavior.

- Critical Section

A part of the code that accesses shared resources and must not be executed by more than one thread at a time.

- Mutex

A locking mechanism that ensures only one thread can access a shared resource at a time.

- Semaphore

A signaling mechanism to control access to a common resource by multiple threads.

- Monitor

A synchronization construct that combines a mutex with condition variables, allowing threads to wait for conditions.

- Condition Variable

Synchronization primitives that allow threads to wait for certain conditions to be satisfied.

- Deadlock

A condition where two or more threads are unable to proceed because each is waiting for resources held by another.

Reference links

Supplementary resources to enhance your learning experience.