Thread Contention

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Understanding Thread Contention

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Alright class, today we're diving into thread contention. Can anyone tell me what they think thread contention means?

Is it when multiple threads try to do the same job at the same time?

Exactly! When multiple threads try to access the same resources, such as CPU time or memory, it creates contention. Why do you think this might be a problem?

It could slow down the system if they are all fighting for resources.

Yes! That's a great point. High contention can significantly degrade performance.

Impact of Thread Contention on Performance

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

So in terms of performance, why is managing thread contention important?

If threads are constantly waiting for resources, it might make the program slow.

Correct! When threads contend heavily, it can lead to decreased throughput and can even make the application unresponsive. This is why efficient thread scheduling is crucial.

What kinds of scheduling strategies can help?

Good question! Two common strategies are preemptive and cooperative scheduling. Preemptive allows the OS to interrupt running threads, while cooperative relies on threads voluntarily yielding control.

Scalability and Thread Contention

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

As we scale up the number of threads, what challenge do we face with regard to contention?

It might get harder to manage all the threads and their synchronization.

You're spot on. Increased thread counts can lead to more complex synchronization and greater lock contention. This directly impacts the scalability of applications.

So, to keep performance, we need a balance in managing threads?

Precisely! Maintaining a balance ensures that threads operate effectively without excessive waiting times.

Mitigating Thread Contention

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now that we've covered the issues related to thread contention, how might we mitigate these problems?

Using lock-free data structures might help avoid contention?

Absolutely! Lock-free mechanisms can significantly reduce contention problems. Additionally, proper scheduling algorithms can help distribute load effectively.

What about testing? Can it help us with thread contention?

Yes, effective testing strategies, including race condition checkers, can help identify contention issues before they affect performance.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

This section discusses thread contention in multithreading, highlighting its impact on performance and the importance of scheduling and resource management to mitigate contention issues. The significance of effective thread management techniques is emphasized for optimal system performance.

Detailed

In this section, we explore thread contention, which refers to the situation when multiple threads attempt to access shared resources concurrently, leading to competition that can degrade performance. The significance of understanding thread contention lies in the fact that as programs scale and the number of threads increases, contention can exacerbate, making effective scheduling and resource management critical. We discuss how to manage thread contention through techniques like efficient scheduling strategies, such as preemptive or cooperative scheduling, to ensure that resources are allocated suitably among competing threads. Furthermore, we emphasize the scalability challenges associated with higher thread counts, underscoring that a balance must be struck to manage synchronization, data sharing, and task allocation. Ultimately, addressing thread contention is vital for maintaining performance, responsiveness, and reliability in multithreaded applications.

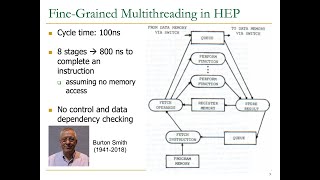

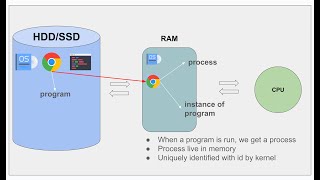

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Understanding Thread Contention

Chapter 1 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Thread Contention: When multiple threads compete for the same resources (e.g., CPU time, memory), performance may degrade. This requires efficient scheduling and resource management strategies.

Detailed Explanation

Thread contention occurs when several threads are trying to access the same resource simultaneously, such as memory or CPU time. Unlike a single-threaded application where tasks are executed sequentially, in multithreading, multiple threads can run in parallel, which can lead to competition for the same resources. When this happens, the overall performance can slow down because the threads have to wait for access, leading to inefficiency. To manage this contention, efficient scheduling algorithms must be implemented, which prioritize which threads get access to the resource and at what time.

Examples & Analogies

Consider a busy restaurant where only one chef can use the same stove at a time. If multiple chefs want to cook their dishes simultaneously, they'll have to wait for their turn to use the stove, causing delays in service. This is similar to thread contention where multiple threads must wait for access to shared resources.

Effects on Performance

Chapter 2 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Performance may degrade due to thread contention, requiring efficient scheduling and resource management strategies.

Detailed Explanation

When threads experience contention, not only does it slow down the individual thread's execution, but it can also lead to a phenomenon known as context switching, where the operating system must frequently switch between threads. This context switching itself consumes time and resources, further degrading performance. Thus, managing this contention efficiently is critical, as it can determine the overall responsiveness and efficiency of applications that rely on multithreading.

Examples & Analogies

Imagine a computer where multiple users are accessing and saving documents in a shared drive simultaneously. If the server managing the drive is unable to handle this many requests at once, users will notice lag, delays, and potential errors. Efficient resource management, like limiting the number of active users or optimizing connection protocols, can help to mitigate these performance issues.

Resource Management Strategies

Chapter 3 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

This requires efficient scheduling and resource management strategies.

Detailed Explanation

To address thread contention, various strategies can be employed for scheduling and resource management. This includes prioritizing certain threads over others, implementing locking mechanisms to ensure only one thread can access a resource at a time, or even redistributing tasks to minimize conflict. These strategies aim to optimize the thread usage and improve overall performance by minimizing wait times for threads while accessing shared resources.

Examples & Analogies

Think of a library with limited computers. If several students need to use the computers for research, the librarian might set up a sign-up sheet to schedule who uses the computers and when. This organized approach helps manage computer usage more efficiently, allowing all students access without overwhelming the system.

Key Concepts

-

Thread Contention: The competition among multiple threads for the same resources, which can degrade performance.

-

Scalability: The ability to efficiently handle increased numbers of threads while maintaining performance.

-

Scheduling Strategies: Techniques like preemptive and cooperative scheduling to manage thread execution.

Examples & Applications

A web server handling multiple requests simultaneously may experience thread contention when all requests attempt to access the same database resource at the same time.

An online gaming application may face performance issues due to thread contention when multiple players' actions trigger updates in a shared game state.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

When threads contend, performance may bend; to share resources is the key to mend.

Stories

Imagine a busy restaurant where multiple waiters (threads) try to serve the same table (resource); without a good plan, chaos ensues as each one fights to take their customer's order, leading to frustrated diners (users).

Memory Tools

Remember the acronym 'SMART' for managing thread contention: Schedule, Mitigate, Analyze, Reduce, Test!

Acronyms

SCT

Schedule threads wisely

Control access with locks

Test for contention issues.

Flash Cards

Glossary

- Thread Contention

A situation where multiple threads compete for the same resources, affecting performance.

- Preemptive Scheduling

A scheduling strategy where the OS can interrupt a running thread to allocate CPU time to another thread.

- Cooperative Scheduling

A scheduling strategy where threads voluntarily yield control to the OS or other threads.

- Scalability

The ability of a system to handle an increased number of threads without performance degradation.

Reference links

Supplementary resources to enhance your learning experience.