Multicore

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Introduction to Multicore Processors

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today, we're discussing multicore processors. Can anyone start by telling me what they think a multicore processor is?

Is it just a processor with more than one core?

Exactly, a multicore processor contains multiple independent processing units, called cores, that can work on tasks simultaneously. This allows for improved performance.

Why do we need multicore processors?

Great question. As we reached physical limitations with single-core clock speeds, multicore processors allow us to achieve better performance without excessive power consumption or heat generation. Remember the acronym PACE: Performance, Efficiency, Multitasking, and Parallel Workloads.

What about the benefits?

Benefits include enhanced performance, energy efficiency, improved multitasking capabilities, and better handling of parallel workloads. Let's summarize: multicore processors boost overall system capabilities.

Core Architecture in Multicore Systems

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Moving on to core architecture, can anyone explain symmetric multiprocessing?

Is that when all cores share memory equally?

Yes! In SMP systems, all cores have equal access to shared memory and I/O resources, facilitating efficient parallel processing. And what about asymmetric multiprocessing?

That's where one main core controls everything, right?

Correct! The master core takes charge of more complex tasks while others handle simpler duties. It's crucial for optimizing system resources. Let's also remember AMP for Asymmetrical Management of Processors.

What about heterogeneous multicore systems?

Excellent point! They combine different types of cores, like high-performance and energy-efficient cores, enhancing both performance and power efficiency. The ARM big.LITTLE architecture is a key example.

Parallelism in Multicore Systems

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now let's dive into parallelism in multicore systems. Who can explain what instruction-level parallelism is?

Is it when multiple instructions are executed at the same time?

Precisely! ILP focuses on parallelism within a single instruction stream. And how about task-level parallelism?

That's running different tasks or threads at the same time!

Spot on! That’s where multicore processors shine, as they can execute multiple threads from various processes simultaneously, boosting throughput. And what's the significance of data-level parallelism?

I think it's performing the same operation on multiple pieces of data at once, like in vector processing?

Exactly! SIMD exemplifies DLP. Keeping these distinct types of parallelism straight is essential, so let's recap: ILP, TLP, and DLP all enhance processing capabilities at different levels.

Memory Management in Multicore Systems

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Next, we need to tackle memory management in multicore systems. What’s the difference between shared and private memory?

Shared memory means all cores can access the same memory, right?

Correct! Shared memory is common in SMP, while private memory assigns each core its own cache. This setup affects data sharing tasks. And how do we ensure cache coherence?

By using protocols like MESI?

Absolutely! MESI helps to keep the cores' caches updated to avoid inconsistencies. Synchronization is also crucial to maintain data integrity. Can anyone explain the role of locks in this context?

Locks make sure only one thread accesses shared resources at a time.

That’s right! Effective synchronization techniques like locks, semaphores, and atomic operations help prevent data corruption. Remember these methods for managing shared resources!

Challenges in Multicore Architectures

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Lastly, let’s discuss some challenges that multicore architectures face. Who can tell me what Amdahl's Law refers to?

It's about the limitations on speedup due to the sequential parts of a program.

Exactly! This emphasizes the challenge of achieving perfect parallelism. What other challenges exist, like managing concurrency?

There are issues with synchronization and keeping memory consistent, right?

Very true. Also, heat dissipation becomes a concern as more cores are added due to rising temperatures. And how do we tackle software scalability with multicore systems?

The software needs to be designed with parallelism in mind to fully benefit from multicore processors.

Fantastic! To conclude, while multicore processors have immense potential, we must navigate these complexities to fully utilize their capabilities.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

This section covers the architecture and functionalities of multicore processors, exploring the intricacies of core intercommunication, parallel task execution, and memory management. Key concepts such as symmetric and asymmetric multiprocessing, thread-level parallelism, and synchronization methods are discussed, alongside the benefits and challenges posed by multicore architectures.

Detailed

Detailed Summary of Multicore Processors

Multicore processors are designed to improve modern computing efficiency by integrating multiple processing units, or cores, onto a single chip. These architectures allow for simultaneous execution of tasks, enhancing performance, power efficiency, and multitasking capabilities. The section begins with defining multicore processors and outlining their motivation, particularly as single-core speeds reach physical limitations.

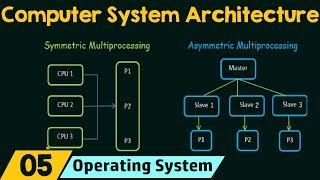

Core Architecture

The architecture of multicore systems includes symmetric multiprocessing (SMP), where all cores share memory, and asymmetric multiprocessing (AMP), where a master core delegates tasks to slave cores. Heterogeneous multicore designs, such as ARM's big.LITTLE, balance performance with power efficiency.

Parallelism in Multicore Systems

Parallelism, a cornerstone of multicore functionality, is explored in various forms: instruction-level parallelism (ILP), task-level parallelism (TLP), and data-level parallelism (DLP). The use of multithreading enables efficient use of processor resources for concurrent executions.

Memory Management & Synchronization

The complexity of memory management increases with multiple cores, necessitating effective techniques for cache coherence and memory consistency. Synchronization mechanisms, including locks and semaphores, are essential to avoid data races.

Communication and Load Balancing

Inter-core communication employs different architectures (e.g., bus, ring, mesh) to promote efficient data sharing. Load balancing strategies (static and dynamic) ensure optimal task distribution.

Power Efficiency & Challenges

Multicore processors are generally more power-efficient due to features such as dynamic voltage and frequency scaling (DVFS), yet they come with challenges, including Amdahl’s Law, concurrency issues, and heat dissipation.

Future of Multicore Processors

Looking ahead, multicore technology is evolving towards heterogeneous computing and quantum computing, suggesting a trajectory of even more specialized cores within future processors.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Introduction to Multicore Processors

Chapter 1 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Multicore processors have become the standard for modern computing, offering improved performance and efficiency by incorporating multiple processing units (cores) on a single chip.

● Definition of Multicore Processors: A multicore processor is a single computing component with multiple independent processing units, known as cores, that can execute tasks in parallel.

● Motivation for Multicore Architectures: As clock speeds of single-core processors approached physical limits, multicore processors were introduced to increase performance without further increasing power consumption or heat dissipation.

● Benefits of Multicore Processing: Enhanced performance, energy efficiency, improved multitasking, and better handling of parallel workloads.

Detailed Explanation

Multicore processors contain multiple cores, which are independent processing units within a single chip. This design allows them to handle multiple tasks simultaneously, significantly improving performance compared to processors with only one core. The shift to multicore processors was driven by the slowing increase of clock speeds in single-core processors due to physical limitations, like power consumption and heat generation. Multicore architectures enhance multitasking capabilities and efficiency, enabling better overall system performance.

Examples & Analogies

Think of a multicore processor like a team of chefs in a restaurant kitchen. Instead of one chef preparing the entire meal alone (single-core processing), multiple chefs can work together, each handling a separate dish. This way, the entire meal is prepared faster and more efficiently, just like how multicore processors complete multiple computing tasks at once.

Core Architecture in Multicore Systems

Chapter 2 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Each core in a multicore processor is essentially an independent processor capable of executing instructions concurrently. This section covers the architecture of multicore systems.

● Symmetric Multiprocessing (SMP): A type of multiprocessing where all cores have equal access to the system’s memory and I/O resources. This setup allows for effective parallel processing.

● Asymmetric Multiprocessing (AMP): A system where one core, known as the master or primary core, controls the tasks while other cores (slaves) handle simpler, auxiliary tasks.

● Heterogeneous Multicore: Systems with cores of different types, such as a combination of high-performance cores and energy-efficient cores, to optimize both performance and power consumption. An example is ARM's big.LITTLE architecture.

● Interconnection of Cores: Cores in a multicore system are connected through a shared system bus, ring architecture, or mesh network, depending on the design. The interconnection affects the performance and efficiency of parallel execution.

Detailed Explanation

In multicore systems, each core functions independently, allowing them to execute tasks at the same time. There are different types of core architectures: in Symmetric Multiprocessing (SMP), all cores share equal access to memory, which enhances their ability to work together. In Asymmetric Multiprocessing (AMP), one primary core dictates tasks while others assist with simpler ones. Heterogeneous multicore systems include variously optimized cores, promoting power efficiency and performance. The interconnection between cores—whether through a shared bus, ring, or mesh network—impacts how efficiently they can communicate and collaborate on tasks.

Examples & Analogies

Imagine a factory with different sections. In SMP, each worker operates independently but can access the same tools and materials easily. In AMP, there’s a lead worker assigning tasks, while others work on simpler jobs. Heterogeneous multicore is like having specialized workers: some are very skilled at difficult tasks while others handle routine operations efficiently. Just as efficient layout designs in a factory affect how quickly products are assembled, the way cores are interconnected influences their overall performance.

Parallelism in Multicore Systems

Chapter 3 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Parallelism is the ability of a system to perform multiple tasks simultaneously. This section discusses how multicore systems exploit parallelism.

● Instruction-Level Parallelism (ILP): Exploiting parallelism within a single instruction stream (previously discussed in earlier chapters).

● Task-Level Parallelism (TLP): Multiple tasks (or threads) run in parallel. Multicore processors allow these tasks to be executed simultaneously, improving throughput.

● Data-Level Parallelism (DLP): Performing the same operation on multiple data elements concurrently. Examples include vector processing and SIMD (Single Instruction Multiple Data) operations.

● Multithreading: A technique where multiple threads are executed concurrently. Multicore processors can execute multiple threads from the same process or from different processes in parallel.

Detailed Explanation

Parallelism refers to the capability of systems to perform many tasks at once. There are several aspects of parallelism in multicore systems: Instruction-Level Parallelism (ILP) refers to executing multiple operations from a single instruction stream simultaneously. Task-Level Parallelism (TLP) allows different threads or tasks to run at the same time on multiple cores. Data-Level Parallelism (DLP) applies when performing the same action on a list of items concurrently, such as processing images. Multithreading enables multiple threads to execute simultaneously on multicore processors, improving the overall efficiency and speed of programs.

Examples & Analogies

Think of parallelism like a construction site where workers each carry out different tasks simultaneously. Some workers may be laying foundations (task-level), while others are installing windows at the same time (data-level). Multithreading is akin to parallel teams working on multiple houses being built at once. By operating simultaneously, the entire project is completed much faster compared to waiting for each task to be finished one by one.

Thread-Level Parallelism (TLP)

Chapter 4 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Thread-Level Parallelism refers to the ability of a processor to run multiple threads concurrently.

● Single-Core vs. Multi-Core TLP: On a single-core processor, multiple threads can be run, but they are executed in a time-sliced manner (context switching). In multicore processors, each core can run a separate thread simultaneously, allowing true parallelism.

● Hyper-Threading: Intel’s Hyper-Threading technology allows a single core to execute multiple threads, simulating multiple logical cores within a physical core. This is different from true multicore processors, but it can still improve performance by better utilizing CPU resources.

Detailed Explanation

Thread-Level Parallelism (TLP) involves executing multiple threads at once. On single-core processors, multiple threads must share the processing time, which is done through context switching, leading to delays. In contrast, multicore processors allow each core to independently handle its own thread. Hyper-Threading is a technology that enables a single core to mimic two logical cores, leading to better utilization of resources but not achieving the full benefits of physical multicore architectures.

Examples & Analogies

Consider two scenarios: a single chef in a kitchen (single-core) can only make one dish at a time but alternates tasks to maximize efficiency. In contrast, with a team of chefs (multicore), each chef can focus on their own dish simultaneously, speeding up the meal preparation process. Hyper-Threading is like giving each chef an assistant, allowing them to work on two tasks at once. While it's not as fast as having more chefs, it still improves productivity.

Key Concepts

-

Multicore Processor: Offers enhanced processing power by integrating multiple cores into one chip.

-

Symmetric Multiprocessing (SMP): Every core has equal access to the system's memory.

-

Asymmetric Multiprocessing (AMP): A central core manages tasks, while others handle auxiliary tasks.

-

Cache Coherence: Ensures consistency of data shared among different cores' caches.

-

Amdahl's Law: Highlights the limitations of parallelism due to sequential task dependencies.

Examples & Applications

A multicore processor can run video editing software while simultaneously allowing a user to browse the internet, leveraging multiple cores for better multitasking performance.

Using vector processing, a multicore processor can apply a graphical effect across multiple pixels of an image at once, demonstrating data-level parallelism.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

More cores mean better scores; tasks done quick, it's the multicore mix.

Stories

Imagine a concert where each musician represents a core. The more musicians you have, the more harmonious the music, just like multicore processors harmonize tasks for efficiency.

Memory Tools

Remember 'MCP' for Multicore's Capabilities: Multitasking, Control over core functions, and Performance increase.

Acronyms

SMP (Symmetric Multiprocessing) for sharing Memory equally among all cores.

Flash Cards

Glossary

- Multicore Processor

A processor that includes multiple independent processing units, or cores, integrated into a single chip.

- Symmetric Multiprocessing (SMP)

A multiprocessing architecture in which all cores have equal access to the system’s memory and I/O resources.

- Asymmetric Multiprocessing (AMP)

A multiprocessing architecture where one primary core controls the tasks while other cores manage simpler, auxiliary tasks.

- Heterogeneous Multicore

A multicore system that incorporates cores of different types, optimizing performance and power consumption.

- Cache Coherence

A mechanism ensuring that all cores have consistent views of shared data within their caches.

- Multithreading

A technique that allows multiple threads to be executed concurrently within a single core or across multiple cores.

- Dynamic Voltage and Frequency Scaling (DVFS)

A power management technique that dynamically adjusts the voltage and frequency of cores based on workload demands.

- Amdahl's Law

A principle stating that the maximum speedup of a program using parallel processing is limited by the portion of the program that can be executed sequentially.

Reference links

Supplementary resources to enhance your learning experience.