Single-Core vs. Multi-Core TLP

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Introduction to Thread-Level Parallelism

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today, we will explore Thread-Level Parallelism, or TLP. Can anyone tell me what TLP means?

Isn't it about running more than one thread at the same time?

Exactly! TLP is the ability of a processor to run multiple threads concurrently, which is crucial for achieving better performance. How do you think TLP differs in Single-Core and Multi-Core processors?

I think Single-Core processors use context switching to run threads one at a time.

And Multi-Core processors can run multiple threads at the same time?

Yes, you both are correct! In Single-Core systems, threads are executed in slices of time. This limits their overall efficiency. Let's explore how this impacts performance...

Single-Core Thread-Level Parallelism

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

So, in a Single-Core processor, context switching allows multiple threads to appear to run at the same time. It’s like sharing a single cake where everyone takes a slice one after the other. What are the downsides?

It could lead to a lot of overhead?

And maybe slower performance because of waiting times?

Great points! This overhead, due to constant switching, makes Single-Core systems less efficient for multi-threaded applications. Now let's look at how Multi-Core processors can change this.

Multi-Core Thread-Level Parallelism

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Multi-Core processors allow true parallel execution of threads. Each core can handle its own thread simultaneously. Can anyone explain how this improves performance?

I guess it allows more tasks to be done at the same time without waiting.

Yes, like having a team where everyone works on different jobs at once!

Exactly! This is crucial for tasks like data processing, gaming, and simulations. Now, how does Hyper-Threading fit into this whole picture?

Hyper-Threading Technology

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Intel's Hyper-Threading allows a single core to act like two logical cores. What do you think are the benefits and limitations of this?

It probably helps make better use of the core’s resources.

But it’s not the same as real multicore processing, right?

Correct! While it enhances performance, it doesn't achieve the true parallelism of dedicated cores. So, what's the overall takeaway for choosing between Single-Core and Multi-Core for TLP?

Summary of TLP Differences

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Let’s summarize what we've learned today about Single-Core and Multi-Core TLP. What key differences did we highlight?

Single-Core uses time-slicing through context switching.

Multi-Core allows for true parallelism by running threads simultaneously.

Hyper-Threading helps a single core act like it's handling more threads.

Excellent summary! Understanding these differences is crucial for optimizing software and hardware applications tailored for threading and parallelism.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

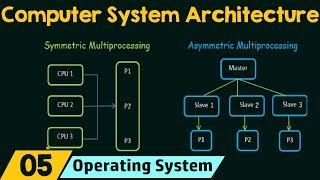

In this section, the differences between Single-Core and Multi-Core Thread-Level Parallelism are explored. Single-Core processors execute threads through context switching, while Multi-Core processors allow true parallel execution by running multiple threads simultaneously, enhancing performance and efficiency in multitasking environments.

Detailed

In the context of Thread-Level Parallelism (TLP), Single-Core systems can run multiple threads but do so in a time-sliced manner, referring to context switching where only one thread executes at a time. This limits the potential performance gains desired from parallel execution. Conversely, Multi-Core systems capitalize on having multiple independent cores, enabling true simultaneous execution of threads. This capacity significantly enhances overall throughput, allowing better performance in multitasking scenarios. Technologies like Intel's Hyper-Threading further illustrate these concepts, as they enable a single core to handle multiple threads, simulating additional logical cores, even though it is not as efficient as true multicore execution. Understanding these differences is crucial for optimizing computational performance and application development.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Single-Core TLP

Chapter 1 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

On a single-core processor, multiple threads can be run, but they are executed in a time-sliced manner (context switching).

Detailed Explanation

In a single-core processor, there's only one core available to execute tasks. When multiple threads need to be run, the processor switches between these threads rapidly. This is called context switching, where the state of an active thread is saved so the processor can pick up where it left off later. Although this allows multiple threads to appear to run at the same time, only one thread is actually being executed at any one moment.

Examples & Analogies

Think of a single-core processor like a chef in a small kitchen preparing a three-course meal. The chef can only work on one dish at a time. So, he works on the appetizer, then stops to work on the main course, and finally shifts to the dessert. While this allows all three courses to eventually be completed, they are not being worked on simultaneously.

Multi-Core TLP

Chapter 2 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

In multicore processors, each core can run a separate thread simultaneously, allowing true parallelism.

Detailed Explanation

With multicore processors, multiple cores are available for processing tasks. This means that different cores can execute different threads at the same time. True parallelism is achieved here because each core operates independently, managing its thread without needing to context switch, thus enhancing the system's overall performance and throughput.

Examples & Analogies

Imagine a restaurant now equipped with three chefs, where each chef can work on a different dish at the same time. Dish one, two, and three can be prepared all at once, significantly reducing the overall time it takes to complete the meal compared to just one chef having to do it all sequentially.

Hyper-Threading

Chapter 3 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Intel’s Hyper-Threading technology allows a single core to execute multiple threads, simulating multiple logical cores within a physical core.

Detailed Explanation

Hyper-Threading is a technology developed by Intel that allows a single core in a processor to handle two threads simultaneously. This isn't the same as true multicore processing, but it can still improve performance by making better use of the core's resources. When one thread is waiting for data, the second thread can use the core's resources, effectively doubling the workload that each core can handle, albeit not as effectively as true multi-core systems.

Examples & Analogies

Think of Hyper-Threading like a single chef who has two assistants. When the chef is busy preparing a complicated dish (one thread), the assistants can help with tasks that don't require the chef's immediate attention (the second thread), allowing the kitchen to function more efficiently without needing an extra chef present.

Key Concepts

-

Thread-Level Parallelism (TLP): The ability to run multiple threads simultaneously for improved performance.

-

Single-Core vs Multi-Core: Single-Core relies on context switching, while Multi-Core enables true parallel execution.

-

Hyper-Threading: A technology that simulates multiple threads on a single core to enhance resource utilization.

Examples & Applications

A Single-Core processor executing multiple threads by switching between them over time, simulating concurrency.

A Multi-Core processor running several threads at once, allowing true parallel execution for better performance in applications like gaming or data analysis.

In a multicore system, while one core processes a complex image filtering task, another core can simultaneously handle user input, improving the software's responsiveness.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

Cores that shine, working in line, Multi-Core is better for runtime!

Stories

Imagine a baker who can only bake one cake at a time. That's single-core processing! Now picture a team of bakers, each making their own cake at once. That's multi-core processing!

Memory Tools

To remember TLP's benefits: 'TLP Helps Perfect Tasks' - Think of Threads, Load, Performance.

Acronyms

TLP - Time to Level Up Processing!

Flash Cards

Glossary

- ThreadLevel Parallelism (TLP)

The capability of a processor to execute multiple threads simultaneously.

- SingleCore Processor

A CPU with a single core that executes instructions one at a time, possibly using context switching for multiple threads.

- MultiCore Processor

A CPU with multiple cores, allowing it to run multiple threads in parallel.

- Context Switching

The process of storing and restoring the state of a CPU so that multiple threads can be run sequentially on a single core.

- HyperThreading

Intel's technology that enables a single core to handle multiple threads as if it were two logical processors.

Reference links

Supplementary resources to enhance your learning experience.