Interconnection of Cores

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Introduction to Interconnection of Cores

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Welcome to our discussion on the interconnection of cores in multicore processors. Understanding how these cores connect is essential for appreciating their performance. Can anyone tell me why we need multiple communication methods for cores?

Because different tasks might require different speeds and efficiencies?

Exactly! Different methods cater to various workloads. Now, let’s discuss the first type: the shared system bus. Who can explain how this method works?

In a shared bus system, all cores connect to one bus, right? But that can cause delays if too many cores try to use it at once.

Spot on! This can create bottlenecks. But what do you think might happen in a system that uses a ring architecture instead?

I think since the cores are in a circle, communication might be faster since each core only connects to its neighbors?

Exactly right! This reduces some of the wait time seen in a shared bus. Great job! Let’s summarize the shared bus and ring architectures before moving on to mesh networks.

Discussing the Mesh Network

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now that we've talked about shared bus and ring methods, let's dive into mesh networks. What do you think a mesh network looks like, Student_4?

It's like a grid where each core connects to multiple other cores, right? It must help with communication speed.

Exactly! This structure allows more pathways for data to travel, significantly increasing efficiency. Can anyone share how that might influence the performance during high workloads?

More pathways mean that cores won't get overloaded as easily, so tasks can be completed faster!

Correct! Efficient communication translates to better performance, especially as tasks increase. Finally, let’s summarize our key points on interconnections.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

The interconnection of cores plays a critical role in multicore processors' performance. This section discusses various interconnection methods, including shared bus, ring architecture, and mesh networks, and how these affect the efficiency of parallel execution within multicore systems.

Detailed

Interconnection of Cores

In multicore processors, cores must effectively communicate with each other to execute tasks in parallel. This communication is facilitated through various interconnection methods, each with distinct implications for performance. The three main interconnection architectures are:

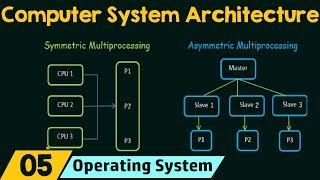

- Shared System Bus: All cores connect to a single bus that acts as a communication channel. While this is simple and cost-effective, it can lead to bottlenecks as all cores must wait for access to the bus, especially under heavy load.

- Ring Architecture: Cores are arranged in a circular layout where each core is connected to its neighbors. This topology allows communication to pass through adjacent cores, potentially reducing latency compared to a shared bus.

- Mesh Network: In this more complex structure, cores are interconnected in a grid-like topology, allowing for multiple paths for communication. This leads to improved performance, as it enhances bandwidth and reduces congestion, essential for high workloads.

Understanding these interconnection strategies is vital for optimizing multicore architectures and ensuring efficient parallel execution.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Interconnection Architecture

Chapter 1 of 2

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Cores in a multicore system are connected through a shared system bus, ring architecture, or mesh network, depending on the design.

Detailed Explanation

In a multicore system, the way cores communicate with each other is crucial for their performance. This communication can happen through a few different structures: a shared system bus, a ring architecture, or a mesh network. Each of these architectures has its own advantages and drawbacks. A shared bus allows multiple cores to connect to the same pathway, but this can lead to bottlenecks if many cores try to communicate at once. In contrast, a ring architecture connects each core to two others like a circle, creating a path for data but potentially increasing the time it takes for information to travel between non-adjacent cores. A mesh network connects all cores directly to several others, allowing for faster communication but requiring more complex wiring and circuitry.

Examples & Analogies

Imagine a group of friends (the cores) trying to play a game together. If they all have to speak to a single person (the shared bus) to pass messages, it can get crowded and slow down the game. In a ring (ring architecture), each friend has to hand the message around one by one, which can take time if the message has to go around the entire group. In a mesh network, everyone can talk directly to several friends, making it quicker for them to share information and collaborate efficiently.

Impact on Performance and Efficiency

Chapter 2 of 2

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

The interconnection affects the performance and efficiency of parallel execution.

Detailed Explanation

The method of interconnecting cores significantly influences how efficiently they can work together. For instance, if too many cores try to use the same pathway at once, it creates delays (also known as contention). On the other hand, a well-designed interconnection can minimize delays and maximize throughput, allowing cores to execute tasks in parallel more effectively. The right choice of architecture helps balance the communication needs with the processing capabilities of the cores.

Examples & Analogies

Think of a busy highway (the interconnection) during rush hour. If too many cars (data requests) try to merge onto one lane, traffic slows down, and some cars get stuck. However, if there are multiple lanes and exits (a good interconnection design), cars can move more freely, get to their destination faster, and allow more traffic to flow smoothly in parallel.

Key Concepts

-

Shared System Bus: A common communication method for cores, prone to bottlenecks.

-

Ring Architecture: A circular connection between cores allowing faster data exchange compared to a bus.

-

Mesh Network: A highly efficient grid layout enhancing communication between cores.

Examples & Applications

In a shared system bus configuration, all cores must compete for access to the bus, which can slow down processing during peak loads.

In a mesh network, if one core fails, other connections can still permit communication between remaining cores, showcasing redundancy.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

In a bus, all must race, sharing the same place, but in a ring they team, running their own dream; mesh it wide, connections collide!

Stories

Imagine a town where every house is connected by a single road (shared bus) - traffic gets jammed! Now imagine each house has multiple paths to each other (mesh), or they only talk to the next house over (ring); which one gets better deliveries?

Memory Tools

One Bus, Many Neighbors, Many Paths: This phrase helps remember the characteristics of bus, ring, and mesh connections.

Acronyms

B.R.M - Bus, Ring, Mesh

Remember the three main connection types for multicore processors.

Flash Cards

Glossary

- Shared System Bus

A communication method where all cores connect to a single bus, allowing access to shared resources but potentially causing bottlenecks.

- Ring Architecture

A connected circular design where each core communicates with its immediate neighbors, reducing wait times compared to a shared bus.

- Mesh Network

A grid-like interconnection where cores are linked to multiple others, enhancing bandwidth and performance, particularly under heavy loads.

Reference links

Supplementary resources to enhance your learning experience.