Core Architecture in Multicore Systems

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Symmetric and Asymmetric Multiprocessing

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Let's first talk about Symmetric Multiprocessing, or SMP. In SMP, all cores share the same memory and I/O resources, allowing them to work on tasks in parallel. Why do you think that might be beneficial?

Maybe because it allows better task distribution and faster processing?

Exactly! Now, how does Asymmetric Multiprocessing, or AMP, differ from this?

In AMP, there’s a master core that controls the others, while the other cores do simpler tasks.

Correct! AMP can be useful for specific tasks that require dedicated processing. Let's summarize: SMP is about equal access to resources, and AMP focuses on a master-slave relationship. Any questions?

How do we decide which architecture to use?

Great question! It often depends on the nature of the tasks we want to parallelize.

Heterogeneous Multicore Architectures

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now let's explore Heterogeneous Multicore architectures. What do you think distinguishes them from SMP and AMP?

They use different types of cores, right? Like high-performance and energy-efficient cores?

Exactly! ARM's big.LITTLE is a perfect example. Why do you think it's important to have different types of cores?

It allows for balancing performance with energy efficiency, depending on the task?

Precisely! By matching the core type to the workload, we optimize both performance and power consumption. Let's recap: Heterogeneous architectures offer flexibility by using varied core types for different tasks.

Interconnection of Cores

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Finally, let's discuss how cores connect within these architectures. Can anyone name the three common interconnection methods?

There's the shared system bus, ring architecture, and mesh network!

Well done! Each method has its advantages. For instance, the shared bus is simple, but what might be a downside?

It might be a bottleneck if too many cores try to communicate at once.

Exactly! How about the ring architecture?

It's efficient for neighborhood communications but could be slower for long-distance core communication.

Right again! And mesh networks minimize the distance between nodes, enhancing performance across the cores. Let’s summarize: the choice of interconnection method affects performance and efficiency. Any further questions?

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

In this section, we explore the architecture of multicore systems, detailing symmetric and asymmetric multiprocessing, heterogeneous multicore designs, and how cores interconnect to enable efficient parallel processing. Each architecture has its unique advantages and implications for performance and energy efficiency.

Detailed

Core Architecture in Multicore Systems

Multicore processors consist of multiple independent processing units, termed cores, capable of executing instructions concurrently. This section elaborates on the core architectures utilized in multicore systems:

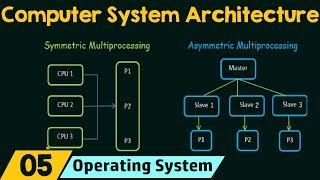

Symmetric Multiprocessing (SMP)

In SMP, all cores have equal access to the system’s shared memory and I/O resources, fostering effective parallel processing. This architecture is beneficial for tasks that can efficiently distribute workloads among all available cores.

Asymmetric Multiprocessing (AMP)

AMP features a primary core that controls task distribution, while subordinate cores handle simpler tasks, allowing for a structured delegation of workloads and varying core functionalities according to task complexity.

Heterogeneous Multicore Architectures

Heterogeneous systems integrate cores of different types, such as high-performance and energy-efficient cores. An example is ARM's big.LITTLE architecture, which optimizes performance and power consumption by assigning tasks to the most appropriate core type.

Interconnection of Cores

The interconnection topology greatly influences system performance and efficiency. Options for connecting cores include:

- Shared System Bus: Allows all cores access to a common bus for communication.

- Ring Architecture: Cores are interconnected in a circular layout, enabling straightforward communication between neighboring cores.

- Mesh Network: A more complex grid configuration that enhances communication efficiency among multiple cores.

Understanding these architectures is essential for optimizing parallel processing capabilities in modern computing systems.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Independent Cores

Chapter 1 of 5

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Each core in a multicore processor is essentially an independent processor capable of executing instructions concurrently.

Detailed Explanation

In a multicore processor, each core functions like its own processor. This means that they can handle different tasks at the same time or share tasks for greater efficiency. By allowing cores to operate independently, multicore architectures enable multiple instruction streams to be processed simultaneously, significantly improving performance over traditional single-core systems.

Examples & Analogies

Consider a team of chefs in a kitchen. Each chef (core) can work on different dishes at the same time. While one chef prepares a salad, another is cooking a steak, and yet another is baking a dessert. Just like in this kitchen, each core can focus on its own task concurrently, which allows the entire meal to be prepared faster.

Symmetric Multiprocessing (SMP)

Chapter 2 of 5

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

SMP is a type of multiprocessing where all cores have equal access to the system’s memory and I/O resources. This setup allows for effective parallel processing.

Detailed Explanation

Symmetric Multiprocessing (SMP) means that each core in the multicore processor has the same rights and access to memory and input/output resources. Because of this equality, tasks can be distributed among the cores without any one core becoming a bottleneck. This configuration encourages better load distribution and faster processing overall, as there’s no hierarchy that could slow things down.

Examples & Analogies

Imagine a library where each librarian (core) has equal access to all resources. They can independently assist patrons without one librarian waiting for another to finish their task. This efficiency in handling requests is similar to how SMP works in multicore systems, enabling all cores to work on their tasks simultaneously.

Asymmetric Multiprocessing (AMP)

Chapter 3 of 5

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

AMP is a system where one core, known as the master or primary core, controls the tasks while other cores (slaves) handle simpler, auxiliary tasks.

Detailed Explanation

In Asymmetric Multiprocessing (AMP), there is a clear distinction in roles among the cores. The primary core oversees task management and coordinates operations, while the other cores are assigned simpler tasks. This can enhance efficiency in specific applications where certain functions require more processing power or management, while others are less critical.

Examples & Analogies

Think of a conductor in an orchestra. The conductor (master core) directs the musicians (slave cores), focusing on leading the performance while the musicians play their assigned parts. This selective division of roles ensures that the entire performance (task processing) runs smoothly while maximizing the strengths of each individual musician.

Heterogeneous Multicore

Chapter 4 of 5

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Heterogeneous multicore systems have cores of different types, such as a combination of high-performance cores and energy-efficient cores, to optimize both performance and power consumption.

Detailed Explanation

Heterogeneous multicore architectures utilize different types of cores within the same processor. Some cores are designed for high performance, handling demanding tasks, while others are optimized for energy efficiency, taking care of simpler, less demanding tasks. This design helps balance power consumption and performance by assigning tasks to the most suitable core, thereby improving the overall efficiency of the system.

Examples & Analogies

Consider a car designed for different driving conditions. It has a powerful engine for speed on highways (high-performance core) and an eco-mode for city driving to conserve fuel (energy-efficient core). By using the right mode for the right condition, the car optimizes both performance and efficiency, just like heterogeneous multicore systems.

Interconnection of Cores

Chapter 5 of 5

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Cores in a multicore system are connected through a shared system bus, ring architecture, or mesh network, depending on the design. The interconnection affects the performance and efficiency of parallel execution.

Detailed Explanation

The way cores are interconnected in a multicore system is crucial for its performance. Common interconnection methods include a shared system bus, which allows all cores to communicate via a single channel, a ring architecture where each core links directly to two others, and a mesh network that connects each core to multiple others. The chosen architecture can significantly influence the speed at which cores share data and coordinate tasks, impacting the overall effectiveness of parallel processing.

Examples & Analogies

Think of different types of road systems connecting cities. A bus system (shared bus) allows all buses to travel along the same road, while a ring road allows cars to circulate continuously (ring architecture), and a network of highways connects multiple cities efficiently (mesh network). Just like these road systems, the structure that connects cores determines how quickly and effectively they can communicate and share resources with one another.

Key Concepts

-

Symmetric Multiprocessing (SMP): A method with equal memory access for all cores.

-

Asymmetric Multiprocessing (AMP): A structure where one core manages others for efficiency.

-

Heterogeneous Multicore: An architecture using different core types for tailored performance.

-

Interconnection Methods: Varying ways cores communicate, affecting system design and efficiency.

Examples & Applications

In SMP systems, when processing data in a multi-threaded application, all cores can access shared data simultaneously.

In AMP, a smartphone app might delegate graphics rendering to a powerful core while basic tasks run on energy-efficient cores, enhancing battery life.

ARM's big.LITTLE architecture allows a tablet to use a high-performance core for gaming while switching to energy-efficient cores for browsing.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

SMP is fair when cores are aware, AMP takes charge with tasks it can bar; Heterogeneous is the varied star!

Stories

Imagine a factory where different machines work together. Some machines focus on speed, while others save power. This is how heterogeneous multicore architectures function!

Memory Tools

SMP - Same, AMP - Asymmetric, Heterogeneous - Different types together! Remember S, A, D!

Acronyms

CORE

Cores Operate Responsively and Efficiently

to remember the function of cores in these architectures.

Flash Cards

Glossary

- Symmetric Multiprocessing (SMP)

An architecture in which all cores have equal access to shared memory and I/O resources.

- Asymmetric Multiprocessing (AMP)

An architecture with a primary core that controls other cores, which handle simpler tasks.

- Heterogeneous Multicore

Systems containing cores of different types designed to optimize performance and power efficiency.

- Interconnection

The method by which cores communicate and share data within a multicore system.

Reference links

Supplementary resources to enhance your learning experience.