Challenges in Multicore Architectures

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Understanding Amdahl’s Law

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Let’s start with Amdahl's Law. This law tells us that the speedup of a task using parallel processing is limited by the portions of the task that cannot be parallelized. Can anyone explain why this is important?

Is it because no matter how many cores we have, the sequential part creates a bottleneck?

Exactly! The performance gain is capped by the time taken to execute those sequential parts. That’s why it’s crucial to minimize the sequential sections of a program whenever possible.

So, if a program is 90% parallelizable, we could still only see a speedup of 10 times?

Correct! This is a great way to visualize Amdahl's Law. Remember this principle! It’s vital to designing effective multicore applications.

Concurrency Issues in Multicore Systems

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Next, let's delve into concurrency issues. When multiple threads are executing, what sorts of problems can arise?

There can be issues with data integrity, right? If two threads try to access the same data at the same time, it could lead to errors.

Exactly! This can lead to race conditions. To prevent this, synchronization methods must be employed. Can you name some synchronization techniques?

We learned about locks and semaphores in class!

Good memory! Locks and semaphores are essential for ensuring that threads do not interfere with each other when accessing shared resources.

Heat Management in Multicore Processors

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

With the addition of more cores, heat dissipation becomes a critical concern. Why do you think this is important?

Because overheating can damage the processor or reduce its efficiency?

Exactly! Efficient thermal management strategies are necessary to ensure that performance is not hindered. What solutions can we explore?

I think using better cooling systems or dynamically adjusting performance to reduce power could help.

Wonderful! Both of those are excellent strategies for managing heat in multicore systems.

Software Scalability Issues

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

The final topic we need to cover is software scalability. Why is it that some software doesn’t benefit from multicore architectures?

Some older software might be designed for single-core processors, so it can't take advantage of multiple cores.

Great point! Legacy software presents a massive challenge in scaling for multicore environments. What can developers do to overcome these challenges?

They could rewrite it to be parallelized or use frameworks that support multicore processing.

Exactly! Such adaptations are key to leveraging the benefits of multicore processors.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

The section discusses the inherent challenges of multicore architectures, including the limitations imposed by Amdahl's Law, the complexities of managing concurrency, the need for effective heat management due to increased core counts, and the difficulties in scaling software to utilize multicore systems efficiently.

Detailed

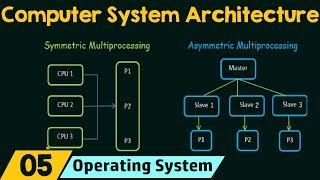

Challenges in Multicore Architectures

While multicore processors enhance computing performance through parallelism, they introduce significant challenges:

- Amdahl’s Law: This law illustrates that the overall speedup of a program using multiple processors is limited by its sequential portion. As such, achieving perfect parallelism is difficult, constraining the potential benefits of multicore systems.

- Concurrency Issues: With multiple threads operating on separate cores, managing synchronization, ensuring memory consistency, and dealing with deadlock becomes increasingly complicated. These factors necessitate careful programming and resource management.

- Heat Dissipation: The addition of more cores leads to greater heat generation, raising concerns about cooling and thermal management. Effective strategies must be implemented to prevent overheating and maintain optimal performance levels.

- Software Scalability: Not all software is designed to leverage the full capabilities of multicore systems. For applications to maximize performance on these architectures, they must be explicitly written to utilize parallel processing, posing challenges for legacy software and certain types of computation.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Understanding Amdahl’s Law

Chapter 1 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

● Amdahl’s Law: Amdahl’s law states that the maximum speedup of a program using multiple processors is limited by the sequential portion of the program. This highlights the difficulty in achieving perfect parallelism.

Detailed Explanation

Amdahl’s Law is a principle used in computer science to predict the theoretical maximum speedup of a task when using multiple processors. It suggests that even if you have many processors, the time saved by parallel processing is affected by the parts of the program that cannot be parallelized. For example, if a program has a portion that must run sequentially, increasing the number of processors will only help to a certain extent before you hit a limit. This is because there will always be a part of the program that has to be completed one step at a time, which slows down the overall performance, making it hard to achieve maximum efficiency.

Examples & Analogies

Imagine a team of cooks in a restaurant. If they are preparing a multi-course meal, they can split tasks like chopping vegetables, cooking, and plating dishes. However, some tasks, like simmering a sauce, need time and cannot be sped up no matter how many cooks you have. Even if you quadruple the number of cooks, the overall time is still constrained by those tasks that cannot run in parallel.

Concurrency Issues

Chapter 2 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

● Concurrency Issues: Managing multiple threads across multiple cores introduces challenges in synchronization, memory consistency, and deadlock management.

Detailed Explanation

When multiple threads run on different cores simultaneously, they often need to share data and resources. This can lead to concurrency issues. Synchronization is required to ensure that two threads do not try to read or write the same data at the same time, which could result in inconsistent data. Memory consistency problems can also arise when one core updates a piece of data but another core does not see this update immediately. Deadlocks occur when two or more threads are waiting for each other to release resources, effectively stopping progress. Addressing these issues requires careful design and implementation of algorithms.

Examples & Analogies

Consider a group of friends sharing a car. If two friends try to take the steering wheel simultaneously, it can lead to chaos. If they don’t agree on who drives or when to take turns, they might just end up stuck, not going anywhere, much like threads waiting on each other to release resources in deadlock situations.

Heat Dissipation Challenges

Chapter 3 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

● Heat Dissipation: As more cores are added to a processor, the amount of heat generated increases. Efficient thermal management techniques are required to prevent overheating.

Detailed Explanation

With more cores in a multicore processor, each core generates heat while executing tasks. As the number of cores increases, so does the total heat output of the processor. If not properly managed, this heat can lead to overheating, which can damage components and reduce performance. Efficient thermal management techniques, like heat sinks, fans, and thermal throttling, help to dissipate heat and maintain safe operating conditions.

Examples & Analogies

Think of a crowded kitchen with many chefs cooking at the same time; if they all turn on their stoves without proper ventilation, the kitchen will become uncomfortably hot. Just like chefs may need to open windows or use fans to cool down, processors require cooling systems to handle the heat generated from multiple cores working simultaneously.

Software Scalability Issues

Chapter 4 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

● Software Scalability: Not all software can effectively take advantage of multicore architectures. To fully utilize multicore processors, software must be written with parallelism in mind.

Detailed Explanation

Software scalability refers to the ability of software to effectively use the increasing number of cores in multicore processors. Unfortunately, not all applications can take advantage of multicore architectures if they are not designed with parallelism in mind. Programs that rely heavily on sequential processes may need to be rewritten or adjusted to split tasks into smaller parts that can run concurrently across multiple cores. This can be a significant challenge for developers.

Examples & Analogies

Imagine organizing a large event where tasks such as setting up, decorating, and catering can be done simultaneously. If you have an event plan that states 'set up before decorating,' you won’t be able to fully utilize all your helpers. For the event to run effectively, you need to assign tasks that can happen at the same time, similar to how software needs to be designed to allow various functions to work together on multiple cores.

Key Concepts

-

Amdahl's Law: The performance gain from parallelism is limited by the sequential portion of tasks.

-

Concurrency Issues: Problems arising from simultaneous thread execution, such as race conditions.

-

Heat Dissipation: The necessity of managing increased heat produced by additional cores.

-

Software Scalability: The challenge of ensuring software can utilize multicore processors effectively.

Examples & Applications

A program with 80% parallelizable code could only achieve a maximum speedup of 5 times due to the 20% sequential segment, as dictated by Amdahl's Law.

An application designed without considering threading can become significantly slower when multiple processors are involved because it cannot utilize the available cores effectively.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

When cores work in a band, heat will rise like the sand.

Stories

Imagine a busy restaurant as a metaphor for multicore processors: as more chefs (cores) enter the kitchen, too many of them trying to prepare the same dish (concurrency issues) can lead to chaos, and too much stove heat (heat dissipation).

Memory Tools

Remember 'HACS': Heat, Amdahl's Law, Concurrency, Scalability to cover multicore challenges.

Acronyms

Recall 'HACS' for Heat, Amdahl's Law, Concurrency, and Scalability.

Flash Cards

Glossary

- Amdahl’s Law

A formula used to find the maximum improvement of a system when only part of the system is improved.

- Concurrency Issues

Challenges that arise when multiple processes are executed simultaneously, including race conditions and deadlocks.

- Heat Dissipation

The process of releasing excess heat generated by the processor to prevent overheating.

- Software Scalability

The ability of software to efficiently utilize additional resources as they become available, especially in a multicore environment.

Reference links

Supplementary resources to enhance your learning experience.