Memory Management in Multicore Systems

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Shared vs. Private Memory

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Let's start by discussing memory in multicore systems. There are two main types of memory, shared and private. Can anyone tell me what shared memory means?

Isn't shared memory the kind where all cores can access the same memory space?

Exactly, Student_1! In shared memory systems, like SMP, all cores have access to the same main memory. Now, what about private memory?

I think private memory means that each core has its own cache and memory?

That's right, Student_2! In private memory systems, cores communicate through mechanisms like IPC. Can anyone think of a scenario where you would use shared memory over private memory?

Maybe in applications where lots of data needs to be accessed by all cores, like in a game engine?

Great example, Student_3! Shared memory is often beneficial for tasks that require high data sharing.

In summary, shared memory allows all cores access to the same data, while private memory provides each core its own space.

Cache Coherence

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Next, let's talk about cache coherence. Why might we need a cache coherence protocol in multicore systems?

To ensure that all cores see the same data, right?

Correct, Student_4! If one core updates data in its cache, all other cores should reflect that update. The MESI protocol is a common method used. What does MESI stand for?

Modified, Exclusive, Shared, and Invalid?

Yes! Great recall, Student_1. Each state helps manage how caches interact. Can someone explain what 'invalid' means in this context?

I think it means that a cache line is no longer valid and needs to be updated.

Exactly! The invalid state ensures consistency across caches. Remember, maintaining cache coherence is key to performance in multicore processors. Let's summarize: cache coherence protocols like MESI keep caches synchronized.

Memory Consistency

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Lastly, we need to cover memory consistency. Why is this concept critical in multicore systems?

I think it ensures that memory operations occur in a predictable order across all cores?

Precisely, Student_3! Memory consistency models define how and when updates from different cores become visible. What could happen if these models were not in place?

Programs could behave unpredictably, leading to bugs and race conditions.

Absolutely! Without proper memory consistency, we could run into severe synchronization issues. So to recap, memory consistency ensures that memory operations are visible and ordered in a predictable way.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

Memory management in multicore systems involves coordinating memory access and ensuring data consistency across cores. This section explains the concepts of shared versus private memory, the importance of cache coherence, and how memory consistency models function in multicore architectures.

Detailed

Memory Management in Multicore Systems

In multicore systems, efficient memory management is crucial due to the simultaneous access of multiple cores to the memory. With multicore architectures, we have two primary approaches to memory:

Shared vs. Private Memory

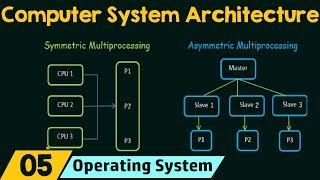

- Shared Memory: All cores can access the same memory space, commonly found in Symmetric Multiprocessing (SMP) systems.

- Private Memory: Each core has its own dedicated cache and memory, and they may communicate through interprocessor communication (IPC).

Cache Coherence

Since each core has a local cache, there exists a need for cache coherence protocols to maintain data consistency. When one core modifies data, these updates must be reflected in other cores with copies of that data. The prevalent protocol for ensuring cache coherence is MESI (Modified, Exclusive, Shared, Invalid).

Memory Consistency

Memory consistency models dictate the order in which memory updates are observed across cores. They ensure that the behavior of memory operations remains consistent, which is essential for correct program execution in a multicore environment.

In summary, memory management in multicore systems is vital for maintaining performance and data integrity by managing shared resources efficiently.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Shared vs. Private Memory

Chapter 1 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Shared Memory:

All cores in the system have access to the same memory space. This is common in SMP systems, where cores share access to the main memory.

Private Memory:

Each core has its own private cache and memory. Data sharing between cores happens through explicit mechanisms such as interprocessor communication (IPC).

Detailed Explanation

In multicore systems, the way memory is managed can greatly affect performance. There are two main types of memory management: shared and private. When using shared memory, all processor cores can access the same area of memory. This setup is typical in Symmetric Multiprocessing (SMP) systems, allowing for efficient data sharing but requiring careful coordination to avoid conflicts. In contrast, private memory means each core has its own separate memory as well as cache storage. Cores communicate and share data through specific methods called interprocessor communication (IPC). This differentiation is crucial for balancing speed and reliability in multicore systems.

Examples & Analogies

Think of shared memory like a communal whiteboard in an office where everyone can write and see information simultaneously. While it’s great for collaboration, it’s important to coordinate who gets to write to avoid confusion. Private memory, on the other hand, is like having individual notebooks. Each person writes down their thoughts and ideas privately, but if they want to share something, they need to discuss it separately with others. Both methods have their strengths and weaknesses, but they are suitable for different scenarios.

Cache Coherence

Chapter 2 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Cache Coherence:

In multicore systems, each core typically has its own local cache. Cache coherence ensures that when one core updates data in its cache, other cores with copies of the same data are updated accordingly. The most common protocol for cache coherence is MESI (Modified, Exclusive, Shared, Invalid).

Detailed Explanation

In systems with multiple cores, each core maintaining its own cache can lead to situations where cores might have outdated or conflicting data. Cache coherence is the mechanism that addresses these issues, ensuring that any change made to a piece of data in one core’s cache is reflected across all other cores that may have cached that data. The MESI protocol is a widely used method for maintaining cache coherence. It works by assigning specific states to cached data (Modified, Exclusive, Shared, Invalid) to manage how changes propagate throughout the system, thus preventing inconsistencies.

Examples & Analogies

Imagine a group of chefs working in a kitchen, each with their own recipe book. If one chef adjusts a recipe, they need to ensure that all other chefs note this change in their books to avoid making inconsistent versions of the dish. Similar to how cache coherence protocols like MESI manage the flow of information, the head chef ensures that any updates are communicated to all, maintaining the quality of the dishes served.

Memory Consistency

Chapter 3 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Memory Consistency:

Ensures that the order in which memory operations are observed across multiple cores is consistent. Memory consistency models define how updates to memory are propagated across cores.

Detailed Explanation

Memory consistency is a crucial concept in multicore systems. It deals with how operations performed on memory by one core are seen and interpreted by other cores. For a system to be consistent, it must have a defined model that dictates how updates and reads of memory happen across the cores. This ensures that all processors have a coherent view of the memory state, reducing the risks of anomalies like reading stale data, where one core may observe a different state of memory than another.

Examples & Analogies

Consider a group of friends planning an event over a group chat. If one friend mentions that the event is postponed, all others should see this message immediately. If some friends see an outdated message indicating the event is still on, it leads to confusion. Similarly, memory consistency models ensure all cores are aligned in terms of data visibility, preventing discrepancies in how they operate based on memory updates.

Key Concepts

-

Shared Memory: A common memory space accessible by all cores.

-

Private Memory: Each core has its own dedicated memory.

-

Cache Coherence: Mechanisms ensuring caches of different cores have consistent data.

-

MESI Protocol: A popular protocol for maintaining cache coherence.

-

Memory Consistency: Defines the order of memory operations' visibility across cores.

Examples & Applications

In shared memory systems, all cores can quickly access updated data, making it efficient for tasks requiring real-time collaboration.

In private memory systems, cores run tasks independently, minimizing cache coherence concerns but requiring explicit communication mechanisms.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

When cores share, memory aligns, / Keeping data from different lines.

Stories

Imagine a library with one checkout. Everyone has to share the books or wait for their turn. This is like shared memory. Now think of each person having their own book at home. That's private memory.

Memory Tools

To remember the MESI protocol: "Mice Eat Small Insects" - Modified, Exclusive, Shared, Invalid.

Acronyms

USE C for Memory Management

Understand Shared vs. Private

Cache Coherence

and Consistency.

Flash Cards

Glossary

- Shared Memory

A memory architecture where all cores have access to the same memory space.

- Private Memory

Memory architecture where each core has its own cache and memory.

- Cache Coherence

A mechanism ensuring that multiple caches maintain the same data consistency.

- MESI Protocol

A cache coherence protocol that stands for Modified, Exclusive, Shared, and Invalid states.

- Memory Consistency

Models that dictate the order of visibility for memory operations in a multicore system.

Reference links

Supplementary resources to enhance your learning experience.