Gram–Schmidt Orthogonalization Process

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Introduction to the Gram–Schmidt Process

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today we will explore the Gram–Schmidt process. This method takes a set of linearly independent vectors and transforms them into an orthonormal set. Why do you think orthonormal vectors are important?

Maybe because they make calculations simpler?

Exactly! Orthonormal vectors simplify many calculations in linear algebra and engineering. Can anyone recall what it means for vectors to be orthonormal?

It means they are orthogonal and each has a unit length, right?

Correct! The first step in the Gram–Schmidt process is to take our first vector and normalize it. Let's denote our first vector as v1 and derive u1.

Establishing the First Vector

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

To derive u1, we use the formula u1 = v1 / ||v1||. What do you think ||v1|| represents?

Isn't ||v1|| the norm of v1? It's like the length of the vector.

Exactly right! This norm is critical to ensure u1 is of unit length. Once we have u1, we can move on to the next vector in our set.

So, we repeat the process for each vector, right?

Yes! We apply the process iteratively. It's essential to ensure that each new vector we generate is orthogonal to all the previous ones.

Projecting and Creating Orthogonal Vectors

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

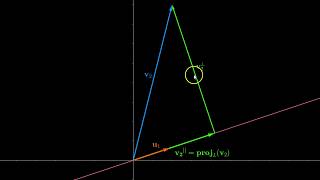

In our Gram–Schmidt process, after normalizing the first vector, we will project the next vector v2 onto u1 and subtract this projection from v2 to get w2. So, the formula is w2 = v2 - ⟨v2, u1⟩u1. Can anyone explain why we subtract this projection?

We subtract it to remove the part of v2 that isn’t in the direction of u1!

Exactly! This operation guarantees that w2 is orthogonal to u1. We will then normalize w2 to obtain u2. This process continues until we generate all required vectors. How does this method help in structural analysis?

It helps by ensuring the system is stable and minimizing error in calculations.

Importance of Gram–Schmidt Process

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now that we understand the Gram–Schmidt process, why do you think it is used in numerical algorithms like QR decomposition?

Because it helps to solve systems of equations more efficiently?

Absolutely! By converting to orthonormal sets, we can make many adjustments and simplify equations, which is especially useful in simulations. Can anyone summarize the steps involved in the Gram–Schmidt process?

First, we normalize the first vector, then project each following vector to ensure orthogonality, adjusting as we go!

Well said! This process is not just theoretical; its application in engineering is significant!

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

The Gram–Schmidt orthogonalization process systematically converts a linearly independent set of vectors into a corresponding orthonormal set by iteratively projecting vectors onto the previously formed orthonormal vectors. This process is crucial in numerical algorithms and applications like QR decomposition in structural analysis.

Detailed

Gram–Schmidt Orthogonalization Process

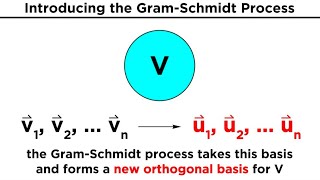

The Gram–Schmidt process is a fundamental algorithm in linear algebra that allows for the conversion of a linearly independent set of vectors

{v_1, v_2, ..., v_n} into an orthonormal set of vectors

{u_1, u_2, ..., u_n}. This process can be broken down into a sequence of steps, which involve setting the first vector of the orthonormal set as the normalized version of the first vector from the independent set:

Steps:

-

Set the first orthonormal vector as follows:

$$ u_1 = \frac{v_1}{\|v_1\|} $$ -

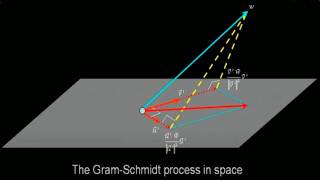

For each subsequent vector (from the second to the nth), project it onto the space spanned by the previously computed orthonormal vectors, and adjust it to ensure orthogonality:

$$ w_k = v_k - \sum_{j=1}^{k-1} \langle v_k, u_j \rangle u_j $$,

followed by normalizing it:

$$ u_k = \frac{w_k}{\|w_k\|} $$

The significance of the Gram–Schmidt process in engineering fields can’t be overstated—it underpins numerical algorithms like QR decomposition, which is utilized widely in structural analysis to ensure solutions are stable and accurate.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Introduction to Gram-Schmidt Process

Chapter 1 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

The Gram–Schmidt process is a method for converting a linearly independent set of vectors {v₁,v₂,...,vₙ} into an orthonormal set {u₁,u₂,...,uₙ}.

Detailed Explanation

The Gram-Schmidt process is an essential algorithm in linear algebra that transforms a set of linearly independent vectors into an orthonormal set. This means that the new set of vectors, {u₁, u₂, ..., uₙ}, maintains the direction of the original vectors but adjusts their lengths to be 1 and makes sure they're all perpendicular (orthogonal) to each other.

Examples & Analogies

Imagine you have three different roads (representing vectors) that intersect in a city. The Gram-Schmidt process helps to create new roads that go in the same direction as the original roads but connect at right angles (orthogonality) and are the same length (uniformity), making navigation simpler.

Step 1: Defining the First Vector

Chapter 2 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

- Set u₁ = ∥v₁∥.

Detailed Explanation

The first step involves defining the first vector of the orthonormal set, u₁. This is done by normalizing the first vector v₁, which means converting it into a unit vector with a length of 1. The notation ∥v₁∥ represents the norm (or length) of v₁. By dividing v₁ by its length, we ensure that u₁ points in the same direction as v₁ but has a length of exactly 1.

Examples & Analogies

Think of it like scaling a tall flagpole down to a standard size while keeping its direction the same. You'd want every flagpole (vector) to look uniform in height (length) but still represent the same location (direction).

Step 2: Defining Subsequent Vectors

Chapter 3 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

- For k = 2 to n, define:

k−1

X

wₖ = vₖ - ⟨vₖ, u⟩uⱼ

j=1

wₖ

uₖ = k

∥wₖ∥.

Detailed Explanation

In this step, for each vector vₖ (from the second vector to the nth), we need to create a new vector wₖ that is orthogonal to all previously defined u vectors. To achieve this, we subtract from vₖ the projection of vₖ onto each of the already defined u vectors. This allows wₖ to remain in the direction of vₖ but removes any components that would make it overlap with previous vectors. Finally, we normalize wₖ to create uₖ.

Examples & Analogies

Imagine you have an artist painting a landscape. When they add new elements (like trees or mountains), they must ensure that none of these new elements overlap with ones previously painted. By removing overlapping portions, the artist can paint new elements that are distinctly visible, ensuring each part of the landscape stands out.

Application of Gram-Schmidt Process

Chapter 4 of 4

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

This process is central to many numerical algorithms like QR decomposition used in structural analysis software.

Detailed Explanation

The Gram-Schmidt process is widely used in numerical methods for solving linear systems, particularly in QR decomposition, where matrices are factored into an orthogonal matrix (Q) and an upper triangular matrix (R). This factorization is crucial in many engineering applications, especially in solving systems of equations and optimizing structural designs.

Examples & Analogies

Think of QR decomposition as organizing a set of books (linear systems) on a shelf. You want to arrange the books (factors) in a specific order so that they don't fall over (structural stability). By using various organizational methods, like the Gram-Schmidt process, you can ensure that each book has a specific place on the shelf without overlapping, just like ensuring solutions do not overlap in numerical methods.

Key Concepts

-

Gram–Schmidt Process: A technique to create an orthonormal basis from a set of linearly independent vectors.

-

Orthonormal Basis: A basis composed of vectors that are mutually orthogonal and each normalized to have unit length.

-

Projection: A method of calculating the component of one vector in the direction of another vector.

Examples & Applications

If v1 = [1, 0] and v2 = [1, 1], then applying Gram–Schmidt will yield v1 as u1 = [1, 0] and u2 as the normalized version of [1, 1] - projection of u1.

Consider v3 = [0, 1]. Project v3 onto both u1 and u2, adjust to ensure orthogonality.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

To make vectors clear, their length we must steer, in Gram-Schmidt’s cheer, make orthonormal dear.

Stories

Imagine a group of friends, each representing a vector. They want to group together to form a perfectly balanced team (orthonormal set). They each find ways to modify themselves (normalize) to ensure they complement each other perfectly without conflicts.

Memory Tools

G.O.P.E. (Gram-Schmidt: Orthogonal Project and Normalize Every) to remember the steps in order!

Acronyms

O.N.V. (Orthogonality, Norm, Vector)

Key aspects to remember in the Gram–Schmidt process.

Flash Cards

Glossary

- Orthonormal Set

A set of vectors that are orthogonal to each other and each has a unit length.

- Linearly Independent Vectors

Vectors that cannot be expressed as a linear combination of each other.

- Projection

The orthogonal projection of one vector onto another.

- Norm

A measure of the length or size of a vector.

Reference links

Supplementary resources to enhance your learning experience.