Matrix Approach: Row Reduction

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Understanding Row Reduction

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today, we're going to explore how row reduction helps us determine linear independence of vectors. Can anyone remind me what linear independence means?

It means that no vector can be written as a linear combination of the others.

Exactly! Now, when we arrange these vectors as columns of a matrix, what do we do next?

We perform row reduction on the matrix.

Yes! This process ultimately helps us find the number of pivot columns. Let’s remember: 'Pivots equal independence!' as our aid. What do we check with the pivot columns?

If the number of pivot columns is equal to the number of vectors, they are independent!

Great! That’s a critical takeaway.

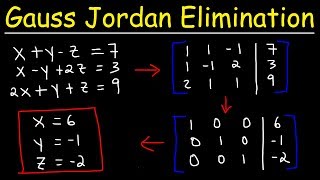

Performing Gaussian Elimination

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Let's discuss the steps in Gaussian elimination. Who can summarize how we begin this process?

We start with setting the matrix to zero using linear combinations.

Correct! After setting it up, we manipulate rows to achieve the upper triangular form. Where do we typically make our first pivot?

In the first column, we can start with the first non-zero entry!

Exactly! Each time we perform an operation, we check to see which entries become our pivots. We can remember this as 'Pivots pick the path'. Who can tell me the relevance of the last row after row reduction?

It tells us if there are any non-trivial solutions!

Well summarized!

Identifying Linear Dependence

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now that we have our pivot columns, let’s discuss what it means if they are fewer than the vector count. What does that indicate?

It means the vector set is linearly dependent.

Correct! We can recall 'Less pivots, more dependency'. Can anyone provide an example where we might see this in practice?

In engineering designs, if structural aspects share dependencies, we might overlook necessary supports.

Exactly! Dependencies can impact the integrity of designs. Let’s remember: 'Independence equals stability'.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

This section discusses how to determine the linear independence of a set of vectors by representing them as a matrix and employing row reduction techniques. The number of pivot columns identifies whether the vectors are independent or dependent.

Detailed

Matrix Approach: Row Reduction

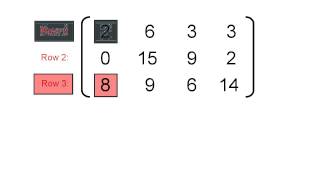

The matrix approach for assessing linear independence involves arranging vectors as columns of a matrix and performing Gaussian elimination to convert the matrix into its row echelon form (REF). This method is efficient and straightforward. To determine if a set of vectors is linearly independent, follow these steps:

- Form a matrix A with the vectors as columns:

A = [v₁, v₂, ..., vₙ]

where each vector vᵢ is a vector in R^m. - Perform Gaussian elimination to reduce the matrix A to its row echelon form (REF).

- Count the number of pivot columns in REF.

- If the number of pivot columns equals the number of vectors (n), the set is linearly independent.

- If the number of pivot columns is less than the number of vectors, the set is linearly dependent.

This process provides a systematic method for verifying the linear independence of vector sets, which is fundamental in various applications, particularly in linear algebra and civil engineering.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Introduction to Row Reduction

Chapter 1 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Let ⃗v 1,⃗v 2,...,⃗v n be vectors in Rm, and form the matrix:

A=[⃗v 1 ⃗v 2 ⋯ ⃗v n]

Detailed Explanation

In this section, we begin with a set of vectors, denoted as ⃗v1, ⃗v2, ..., ⃗vn, which belong to the m-dimensional real space (Rm). We organize these vectors into a matrix A where each vector forms a column of the matrix. This matrix is fundamental to the process of determining linear independence among the vectors.

Examples & Analogies

Think of these vectors as different ingredients in a recipe, and forming the matrix is like arranging these ingredients neatly on a table for cooking. Just as you need to know what ingredients (vectors) you have before starting a dish (solving a problem), we need to arrange our vectors into a matrix to analyze them.

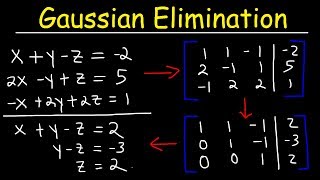

Gaussian Elimination

Chapter 2 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

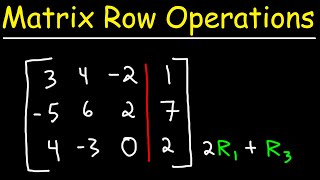

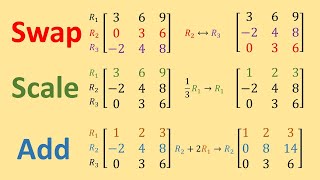

Steps:

1. Perform Gaussian elimination to reduce A to its row echelon form (REF).

Detailed Explanation

Gaussian elimination is a method used to simplify the matrix A into a form known as row echelon form (REF). In REF, all non-zero rows are above any rows of all zeros, and the leading coefficient (or pivot) of a non-zero row is always to the right of the leading coefficient of the previous row. This step is crucial as it allows us to easily identify dependencies among the vectors.

Examples & Analogies

Imagine you are sorting books on a shelf according to their height; taller books go on the top shelves and shorter ones below. This organization helps you quickly identify which books are stacked together and which are separate. Similarly, row reduction organizes the matrix to identify linear dependencies.

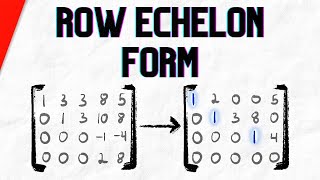

Count Pivot Columns

Chapter 3 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Count the number of pivot columns:

o If number of pivot columns = number of vectors → linearly independent.

o If number of pivot columns < number of vectors → linearly dependent.

Detailed Explanation

After reducing the matrix to REF, the next step involves counting the pivot columns. A pivot column is a column that contains a leading 1 in REF. If the number of pivot columns is equal to the number of vectors, this means that each vector contributes unique information, and therefore, they are linearly independent. If there are fewer pivot columns than vectors, it indicates that some vectors can be expressed as linear combinations of others, suggesting that the set is linearly dependent.

Examples & Analogies

Consider a classroom where each student represents a vector. If each student (vector) has a unique point of view (pivot) in a discussion (the matrix), then all opinions are independent. However, if multiple students end up saying similar things (fewer pivots), this indicates redundancy in their contributions (dependencies).

Key Concepts

-

Row Reduction: The process of transforming a matrix into row echelon form to assess linear independence.

-

Linear Independence: A set of vectors is independent if no vector can be expressed as a linear combination of the others.

-

Pivot Columns: Columns in a row-echelon form that indicate the number of linearly independent vectors.

Examples & Applications

Example where vectors are found independent through row reduction, showing all pivot columns.

Example where a set of vectors is dependent due to fewer pivot columns than vector count.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

If vectors stand alone in space, they won't be mapped to any place!

Stories

Imagine vectors as unique personalities at a party; none can replicate another's dance moves.

Memory Tools

PIVOT - Put Important Vectors Of Together.

Acronyms

RAMP - Reduce, Assess, Match, Pivot for independence.

Flash Cards

Glossary

- Row Echelon Form (REF)

A form of a matrix where all non-zero rows are above any rows of all zeros, and the leading coefficient of each non-zero row after the first occurs to the right of the leading coefficient of the previous row.

- Pivot Column

A column that contains a leading 1 in row echelon form; indicates a linearly independent vector.

- Gaussian Elimination

A method for solving systems of linear equations by transforming the matrix into row echelon form.

Reference links

Supplementary resources to enhance your learning experience.