Verification Algorithms for Functional Verification

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Equivalence Checking Algorithms

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today, we're going to explore Equivalence Checking Algorithms. Can anyone tell me why we need to check if two designs are equivalent?

I think it's to ensure that the design hasn’t changed after optimization?

That's right! When we optimize or synthesize our designs, we want to ensure that the output remains functionally the same. One common method for this is the Burch and Dill algorithm. Can anyone explain how it works?

Isn't it about comparing their state spaces?

Yes, exactly! It checks if for every state in one design, there is a corresponding state in the other design, maintaining equivalence. This is crucial because even small changes can lead to significant differences in functionality.

What happens if they are not equivalent?

Great question! If two designs are not equivalent, it means that the modifications might have altered the function, which can lead to failures in real-world applications. Thus, verifying equivalence is critical in the design process.

Simulation-Based Algorithms

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now let's move on to Simulation-Based Algorithms. What do you think is the main goal of running simulations on a design?

I believe it's to check if the design behaves correctly under different conditions?

Exactly! These algorithms use different sets of input vectors to simulate and observe outputs. Why do you think we would need a variety of input vectors?

To cover all possible scenarios, especially edge cases.

Correct! Coverage metrics help us determine how thorough our verification process has been. It ensures we catch unexpected behaviors that may not surface with just simple test cases.

What if the simulation shows unexpected results?

Then that indicates a discrepancy in the design that needs addressing before moving forward. Simulation helps pinpoint such issues and enhances the reliability of the VLSI design process.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

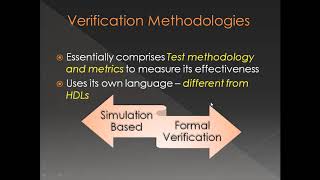

The section highlights two main categories of verification algorithms for functional verification: Equivalence Checking Algorithms, which compare the designs to ensure logical preservation after transformations, and Simulation-Based Algorithms that run multiple simulations to check design behavior against expected outputs.

Detailed

Verification Algorithms for Functional Verification

Functional verification is a crucial aspect of VLSI design, ensuring that the circuit behaves as intended. In this section, we delve into the algorithms that facilitate functional verification, specifically focusing on two major types: Equivalence Checking Algorithms and Simulation-Based Algorithms.

Equivalence Checking Algorithms

These algorithms are designed to ensure that logical integrity and function are preserved after design transformations, such as synthesis or optimization. A prime example of an equivalence checking algorithm is the Burch and Dill algorithm, which compares the state spaces of two designs to check for equivalence. This is essential in ensuring that when a design undergoes transformations, its intended functionality remains intact.

Simulation-Based Algorithms

Unlike equivalence checking, simulation-based algorithms validate the functional correctness of a design by conducting simulations with different input vectors. This method leverages coverage metrics to evaluate the thoroughness of the verification process. By identifying how varied input sets affect the circuit output, designers can ensure that the design operates correctly across multiple operating conditions.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Equivalence Checking Algorithms

Chapter 1 of 2

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

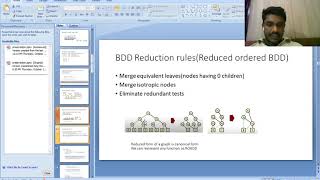

Equivalence Checking Algorithms: These algorithms ensure that the logic of the design is preserved after transformations like synthesis, optimization, or technology mapping. The most common algorithm used is the Burch and Dill algorithm, which checks whether two designs are equivalent by comparing their state space.

Detailed Explanation

Equivalence checking algorithms are crucial in the functional verification process. They confirm that the design's logic remains unchanged when modifications are made, such as during synthesis (the transformation of a high-level design into a lower-level form) or optimization (improving performance characteristics). A prominent example is the Burch and Dill algorithm that systematically compares two designs to verify their equivalency by examining their state space, effectively ensuring that they yield the same functions or results under the same conditions.

Examples & Analogies

Imagine updating a recipe for a cake. If you change a few ingredients or the cooking time, the goal is for the cake to still taste the same as the original. Similarly, equivalence checking algorithms ensure that even as a design evolves (like a recipe), it should still produce the same desired output (like the cake's flavor).

Simulation-Based Algorithms

Chapter 2 of 2

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Simulation-Based Algorithms: These algorithms rely on running simulations with different sets of input vectors to check if the design behaves as expected. Coverage metrics are used to determine the effectiveness of the verification process.

Detailed Explanation

Simulation-based algorithms work by executing the design in a controlled environment with various input vectors, which are standardized sets of test inputs. This helps verify whether the design behaves correctly under different scenarios—essentially checking if it produces the expected outputs for given inputs. To gauge the effectiveness of these simulations, engineers use coverage metrics, which help identify portions of the design that were tested and those that need further verification, ensuring that the design is thoroughly validated.

Examples & Analogies

Consider running multiple experiments to ensure a new gadget works correctly. You would test it under different conditions, like varying temperatures or power sources. Similarly, simulation-based algorithms systematically test a circuit’s response to ensure it performs reliably, just like testing the gadget in various real-world situations.

Key Concepts

-

Equivalence Checking: A method to verify that two designs retain the same functionality after changes.

-

Burch and Dill Algorithm: An algorithm to compare the state spaces of two designs for equivalence.

-

Simulation-Based Verification: Verification through diverse simulations to ensure designs meet expected behaviors.

-

Coverage Metrics: Metrics that measure the scope and thoroughness of the verification process.

Examples & Applications

After synthesizing a VLSI design, the Burch and Dill algorithm checks if the optimized version functions identically to the original design.

During functional verification, a simulation-based approach may use hundreds of random input vectors to validate the design's output against expected results across various scenarios.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

To check if two designs are the same, use equivalent checks, it's the name of the game!

Stories

Imagine two friends building LEGO castles; one made modifications. To ensure it was still the same castle, they used equivalence checking, ensuring each brick matched.

Memory Tools

Remember 'SPECC' for Simulation-based Verification: S=Scenarios, P=Patterns, E=Efficiency, C=Coverage, C=Checks.

Acronyms

Use 'EQS' for Equivalence Checks

E=Equivalent

Q=Quality

S=State-space.

Flash Cards

Glossary

- Equivalence Checking

A verification method used to confirm that two designs functionally match despite transformations.

- Burch and Dill Algorithm

An algorithm for checking the equivalence of two designs by comparing their state spaces.

- SimulationBased Verification

A technique that runs simulations with various input vectors to validate the functional operation of a design.

- Coverage Metrics

Measures used to assess the effectiveness and thoroughness of the functional verification process.

Reference links

Supplementary resources to enhance your learning experience.