Organization and Structure of Modern Computer Systems

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Introduction to Computer System Organization

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today, we’ll dive into computer system organization, which involves how hardware components are structured and interconnected to perform computations efficiently.

What exactly does computer organization focus on?

Great question! Computer organization focuses on interconnection, data flow, and control signals. It distinguishes between the architecture, which is conceptual, and the organization, which is more about practical implementation.

Can you give us an example of that?

Certainly! For instance, when we design a CPU, the architecture outlines how it should function, while organization determines how it is physically built and connected to memory and I/O devices.

How does this coordination work in practice?

Coordination is achieved through efficient design of buses, which connect the CPU, memory, and input/output units. This allows smooth data transfers and ensures that operations proceed without conflicts.

So, a well-organized system can execute tasks more effectively?

Exactly! A well-planned organization helps maintain high performance and efficiency in computing tasks.

To summarize, computer organization is all about structuring hardware components for efficient computation, focusing on the interconnectivity of the CPU, memory, and I/O systems.

Functional Units of a Computer

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

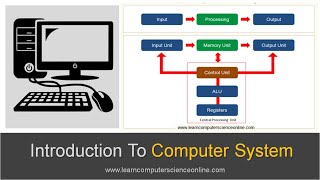

Now, let’s discuss the five main functional units of a modern computer: the input unit, output unit, memory unit, control unit, and arithmetic logic unit.

What does each unit do?

The input unit includes devices like keyboards and mice, allowing users to enter data; the output unit includes monitors and printers, which output information. The memory unit stores data and instructions needed for processing.

And what about the control unit and ALU?

Great follow-up! The control unit manages the execution of instructions and flow of data, while the arithmetic logic unit performs computations and logical operations.

How do these units work together?

They work in unison—data flows from the input unit, is processed in the memory and control units, and results are outputted through the output unit. It’s a seamless interaction crucial for computing.

That makes sense! Each unit has a specific role to play.

Exactly! Let's recap: input and output units handle user interaction, the memory unit stores data, while the control unit and ALU work together to process and execute instructions.

Von Neumann and Harvard Architecture

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now let's examine two fundamental architectures: Von Neumann and Harvard.

What’s the main difference between them?

The Von Neumann architecture features a single memory that stores both data and instructions, which can create a bottleneck since only one bus manages this traffic.

And the Harvard architecture?

The Harvard architecture uses separate memory spaces for data and instructions, allowing simultaneous data access and instruction fetch, which makes it faster, especially in embedded systems.

Why is that important?

It’s important because faster data access can lead to improved performance in applications like digital signal processing in microcontrollers, where efficiency is crucial.

Does one architecture have advantages over the other?

Yes, the Von Neumann architecture is simpler and widely used in general-purpose computers, while Harvard's complexity allows for faster processing in specific applications.

To summarize, Von Neumann has a single memory which can cause bottlenecks, while Harvard's dual memory system enhances parallel processing capabilities.

System Buses

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Let’s next focus on system buses, which are crucial communication pathways in computers.

What types of buses are there?

There are three main types: data buses for transferring data, address buses for specifying memory locations, and control buses that carry control signals.

How does the width of a bus affect performance?

Good question! The width, or number of bits the bus can transfer at a time, directly impacts data transfer speed. Wider buses can transmit more data simultaneously.

What about bus speed?

Bus speed is determined by the system clock rate. A faster clock allows quicker signal transfer between components.

And what’s the difference between synchronous and asynchronous buses?

Synchronous buses operate in sync with the clock, while asynchronous buses do not strictly depend on a clock cycle, allowing for more flexibility in how data transfers occur.

In summary, system buses are essential for communication within the computer, and their design affects overall system performance.

I/O Organization

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Finally, let’s talk about I/O organization and how data is transferred between the CPU and peripherals.

What methods are used for I/O data transfer?

There are three primary methods: programmed I/O, where the CPU polls devices for data; interrupt-driven I/O, where the device sends an interrupt signal to the CPU; and direct memory access (DMA), which allows data transfer without CPU intervention.

Which method is most efficient?

DMA is generally the most efficient because it minimizes CPU involvement, allowing it to perform other tasks simultaneously.

How does the choice of method impact performance?

The method of data transfer significantly affects system performance. For instance, interrupt-driven I/O can reduce polling overhead, making it faster than programmed I/O.

Sounds like planning I/O methods is crucial.

Absolutely! Effective I/O organization improves overall system responsiveness and efficiency. To summarize, I/O organization methods vary in efficiency and significantly impact computer performance.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

In this section, we explore the structural organization of modern computer systems, emphasizing key functional units such as the CPU, memory, and I/O systems. We differentiate between Von Neumann and Harvard architectures and delve into buses and programming models that facilitate data processing and transfer.

Detailed

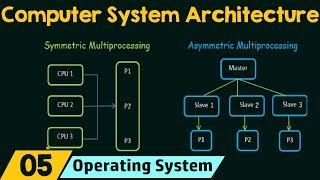

Organization and Structure of Modern Computer Systems

This section provides a comprehensive overview of how modern computer systems are organized and structured. It begins with a distinction between computer organization, which focuses on the arrangement and connection of hardware components, and architecture, which refers to the conceptual design. Key functional units of a computer are discussed, including the input and output units, memory unit, control unit, and arithmetic logic unit (ALU), all pivotal for computation.

Moreover, the section delves into different architectures like Von Neumann and Harvard. The Von Neumann architecture features a single memory for data and instructions, leading to potential bottlenecks, while Harvard architecture offers separate memories for data and instructions, allowing parallel processing. System buses are introduced as essential communication pathways within a computer, including data, address, and control buses, highlighting their characteristics.

The organization of the CPU is examined, discussing configurations such as single-core, multi-core, and superscalar processors, emphasizing the implications for processing efficiency. Control unit designs are explored, providing insights into hardwired and microprogrammed control mechanisms.

Furthermore, memory system organization is addressed with a focus on primary and secondary memory, cache, and virtual memory. Finally, the section discusses I/O organization, parallelism, and performance enhancements, providing a clear, structured framework essential for understanding modern computing systems.

Youtube Videos

![How does Computer Hardware Work? 💻🛠🔬 [3D Animated Teardown]](https://img.youtube.com/vi/d86ws7mQYIg/mqdefault.jpg)

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Introduction to Computer System Organization

Chapter 1 of 6

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Computer system organization refers to how hardware components are structured and connected to perform computation.

● It focuses on interconnection, data flow, and control signals.

● Differentiates between computer architecture (conceptual design) and organization (implementation).

● Ensures efficient coordination between CPU, memory, I/O, and buses.

Detailed Explanation

Computer system organization is all about how different hardware components of a computer system are arranged and how they work together to perform computations. It emphasizes the connections between components, how data flows through the system, and the control signals that ensure proper operation. It's important to distinguish organization from computer architecture; while architecture deals with the overall design and functionality of the system, organization is concerned with how those designs are put into practice. Good organization ensures that the CPU (Central Processing Unit), memory, input/output devices, and data buses work together efficiently.

Examples & Analogies

Think of a computer system like a factory. The factory's layout (how machines and workers are arranged) corresponds to the system organization, while the factory's blueprints (detailing processes and capabilities) relate to architecture. Just as a well-designed factory allows for smooth production, efficient computer organization allows for seamless data processing.

Functional Units of a Computer

Chapter 2 of 6

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Modern computers consist of five main functional blocks:

1. Input Unit – Devices like keyboard, mouse, scanner.

2. Output Unit – Devices like monitor, printer.

3. Memory Unit – Stores data and instructions.

4. Control Unit (CU) – Manages execution and data flow.

5. Arithmetic Logic Unit (ALU) – Performs arithmetic and logical operations.

Detailed Explanation

Modern computers are made up of five main functional units, each serving a specific role:

1. Input Unit: This includes devices like keyboards and mice that allow users to provide data and commands.

2. Output Unit: Includes devices such as monitors and printers that present the processed information to the user.

3. Memory Unit: This component holds data and instructions temporarily or permanently, facilitating quick access.

4. Control Unit (CU): This unit coordinates the activities of the other units, ensuring instructions are executed in the correct order.

5. Arithmetic Logic Unit (ALU): This part of the computer performs all the mathematical calculations and logical comparisons. Each unit works together to carry out the overall computing process.

Examples & Analogies

Imagine a restaurant kitchen. The input unit is like the servers bringing in orders, the output unit is the servers delivering finished meals, the memory unit represents the storage of recipes, the control unit is the head chef directing the process, and the ALU is the cooks preparing each dish. Each part of the kitchen must work together smoothly to provide a great dining experience.

Von Neumann Architecture

Chapter 3 of 6

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

● Most general-purpose computers use the Von Neumann model.

● Single memory for data and instructions.

● Sequential instruction execution.

● Bottleneck: One bus for data/instruction fetch and memory access.

Detailed Explanation

The Von Neumann architecture is a foundational model for most general-purpose computers. It uses a single memory space to store both data and instructions, making it straightforward but also causing a sequential approach to executing instructions—meaning one instruction is processed at a time. This can lead to a bottleneck, where the single bus responsible for transferring both data and instructions can become a limiting factor in performance. As a result, improving speed often involves addressing this bottleneck.

Examples & Analogies

Think of a busy road where cars (instructions) can only travel one at a time to a single destination (memory). Even though this road is efficient for small amounts of traffic, during rush hour, the traffic builds up, and cars take longer to get through. This congestion represents the bottleneck seen in Von Neumann architecture.

Harvard Architecture

Chapter 4 of 6

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

● Separate memory for data and instructions.

● Allows parallel instruction fetch and data access.

● Faster than Von Neumann in embedded systems.

● Used in DSPs and microcontrollers.

Detailed Explanation

The Harvard architecture is an alternative to the Von Neumann model and features separate memory spaces for data and instructions. This separation allows for simultaneous access, which leads to faster processing speeds, especially beneficial in embedded systems. This architecture is frequently used in Digital Signal Processors (DSPs) and microcontrollers, where efficient processing of data is crucial.

Examples & Analogies

Imagine a library where books (data) and magazines (instructions) are stored in separate rooms. When looking for both at the same time, you can retrieve information efficiently without waiting for one to finish. In this way, Harvard architecture makes computers faster by allowing multiple memory accesses.

System Buses

Chapter 5 of 6

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

A bus is a communication pathway between components.

1. Data Bus – Transfers data.

2. Address Bus – Specifies memory addresses.

3. Control Bus – Carries control signals (read/write/interrupt).

Bus Characteristics:

● Bus width (data/address width)

● Bus speed (clock rate)

● Synchronous vs. Asynchronous buses.

Detailed Explanation

In a computer system, buses act as highways for communication among different components. There are three main types of buses: 1. Data Bus: Transfers actual data between components. 2. Address Bus: Carries information about where data should go in memory. 3. Control Bus: Sends control signals that determine operations like reading or writing data. Bus characteristics, such as width (how much data they can transfer at once) and speed (how fast they operate), determine the efficiency of data communication.

Examples & Analogies

Think of a delivery service. The data bus is like the delivery trucks carrying packages (data) to specific addresses (address bus), and the control bus issues instructions for deliveries and pickups (control signals). Efficient buses help ensure that the delivery process runs smoothly and quickly.

CPU Organization

Chapter 6 of 6

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

CPU is the brain of the system and may be organized as:

1. Single-core – One processing unit.

2. Multi-core – Multiple cores for parallel processing.

3. Superscalar – Can execute more than one instruction per cycle.

Internal units include:

● Instruction Register (IR)

● Program Counter (PC)

● Accumulators

● ALU and Control Logic

Detailed Explanation

The CPU, often referred to as the brain of the computer, can be organized in several ways to increase efficiency and processing power. 1. Single-core: Contains one processing unit, handling tasks one at a time. 2. Multi-core: Includes multiple processing units, allowing for parallel task execution, making it faster and more efficient. 3. Superscalar architecture: Can process multiple instructions simultaneously within a cycle. Inside the CPU, several internal components work together, including the Instruction Register (IR) for holding current instructions, the Program Counter (PC) to track instruction sequences, accumulators for temporary data storage, and the ALU for performing calculations.

Examples & Analogies

Consider a conductor leading an orchestra. A single-core CPU is like a conductor directing just one musician at a time, while a multi-core CPU is like the conductor managing a full orchestra with many musicians playing together. A superscalar CPU is akin to an orchestra that can simultaneously play different parts of a symphony, creating a complex and rich sound efficiently.

Key Concepts

-

Computer Organization: The arrangement of hardware components for efficient processing.

-

Functional Units: Segments of a computer system, including input, output, memory, control, and ALU.

-

Von Neumann vs. Harvard Architecture: Different memory architectures impacting efficiency.

-

System Buses: Pathways that connect hardware components for data transfer.

-

I/O Organization: Methods for data transfer between CPU and peripherals.

Examples & Applications

Input devices include keyboards and mice, while output devices include monitors and printers.

In Von Neumann architecture, both instructions and data are stored in the same memory, leading to possible bottlenecks in data processing.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

In a computer's heart, where logic starts, the ALU plays its key parts. For input and output, devices twine, together they work, so systems align.

Stories

Imagine a computer as a factory; the input unit is the entrance where raw materials come in, the output is the shipping dock where finished products go out. The control unit is the manager ensuring everything runs smoothly, while the ALU is the worker performing calculations to produce the final product.

Memory Tools

To remember the five functional units, use 'I O M C A' (Input, Output, Memory, Control, ALU).

Acronyms

For bus types, think of the acronym 'DAC' - Data, Address, Control.

Flash Cards

Glossary

- Computer Organization

The arrangement of a computer's components and their interconnections for effective computation.

- Functional Units

The distinct sections of a computer system such as input, output, memory, control unit, and ALU.

- Von Neumann Architecture

A computer architecture that uses a single memory for data and instructions, leading to potential bottlenecks.

- Harvard Architecture

An architecture that uses separate memories for data and instructions, allowing simultaneous processing.

- Data Bus

The pathway for transferring data between components.

- Control Bus

A bus that carries control signals such as read/write commands.

- Direct Memory Access (DMA)

A method that allows peripherals to transfer data directly to memory without CPU involvement.

Reference links

Supplementary resources to enhance your learning experience.