Summary of Key Concepts

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

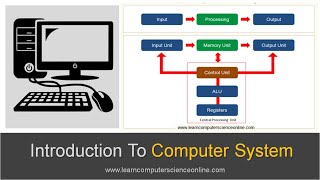

Functional Blocks of Modern Computers

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today, we're going to explore the functional blocks of modern computers. Can someone tell me what these blocks are?

Are they things like input and output units?

Absolutely! We have five main functional blocks: input unit, output unit, memory unit, control unit, and arithmetic logic unit, or ALU. These blocks work together to process information.

What's the role of the control unit?

Great question! The control unit manages execution and directs data flow. You can remember its function with the mnemonic 'C.E.D.': Control, Execute, Direct.

What does the ALU do?

The ALU performs all arithmetic and logical operations—think of it as the problem-solver of the CPU! Remember, 'A.L.U.' is for 'Arithmetic and Logic Unit.'

So, all these parts need to communicate well together?

Exactly! Coordination is key for efficiency. Let’s summarize the roles: Input and output units interact with users, memory stores data, the CU controls operations, and the ALU processes calculations.

Von Neumann vs. Harvard Architecture

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now, let's talk about the Von Neumann architecture compared to Harvard architecture. Who can tell me about Von Neumann?

It's the architecture that uses a single memory for data and instructions, right?

Correct! Because it uses one bus for fetching data and instructions, there's a bottleneck. Now, what about Harvard architecture?

Harvard has separate memories for data and instructions, so it can work faster!

Well done! Remember, Harvard architecture allows parallel access, making it beneficial for embedded systems.

Why is Von Neumann still important?

Good point! Von Neumann is widely used in general-purpose computers. To help remember, think of 'V.N. for versatile' and 'H.A. for high-speed!'

So, both architectures have their places in computing?

Exactly! Both architectures serve different purposes based on the system requirements. Let’s summarize: Von Neumann has one memory shared by data and instructions, while Harvard has separate pathways for each.

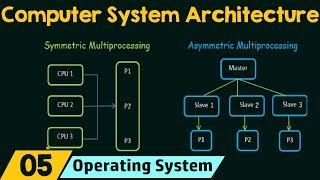

CPU Evolution

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Next, let’s discuss CPU evolution. Who can briefly describe the changes in CPU design?

CPUs went from single-core to multi-core to handle tasks better, right?

Exactly! Multi-core CPUs enable parallel processing, improving performance significantly. Remember, 'more cores mean more chore!'

What does it mean for a CPU to be superscalar?

Good question! A superscalar CPU can execute multiple instructions during the same clock cycle, leading to faster processing speeds. Picture an assembly line with multiple workstations!

Other than multicore, what else can improve performance?

Good thinking! Techniques like pipelining streamline instruction execution. You can remember this with 'pipeline for efficiency.' Let’s summarize: CPUs have evolved to cope with increased demands by using multi-core designs and superscalar execution.

Buses and Control Units

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Finally, let’s touch on buses and control units. Can someone explain the function of a bus?

It’s like a communication pathway between components, right?

Correct! There are three types: data bus, address bus, and control bus. Can anyone share what each does?

The data bus transfers data, the address bus specifies where to send it, and the control bus carries control signals!

Nicely summarized! Now, the control unit coordinates operations within the CPU. Think of it as the traffic conductor of data flow! Remember, 'C.U. is your Coordination Unit.'

What happens if there's a bottleneck in the bus?

Great concern! A bottleneck can slow down the entire system. To help, remember: 'no single lane for all the cars!' Let’s recap: buses enable communication between all parts, and the control unit orchestrates their coordination.

Importance of Parallelism and Pipelining

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now, let’s consider the role of parallelism and pipelining. What do these terms mean in computing?

Parallelism means executing multiple tasks at the same time.

Exactly! It improves overall system performance. Can anyone give examples of parallelism?

Instruction-level and thread-level parallelism!

Great! Now, what about pipelining?

Pipelining splits the instruction execution into stages, right?

Yes! Just like an assembly line in production—it allows for simultaneous processing of multiple instructions. To remember this, think 'pipeline to perform time!' Let’s summarize: both parallelism and pipelining are essential for high-speed computations, increasing efficiency and performance.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

The summary of key concepts highlights the organizational structure of modern computer systems, emphasizing functional blocks, the Von Neumann architecture, CPU evolution, and the role of buses and control units. It ensures an understanding of parallelism and pipelining as crucial for high-speed computing.

Detailed

Summary of Key Concepts

This section encapsulates the essential ideas discussed in the chapter regarding the organization and structure of modern computer systems. It outlines the following key concepts:

- Functional Blocks: Modern computers are organized into distinct functional blocks such as input units, output units, memory units, control units, and arithmetic logic units (ALU), each serving a specific role in computation.

- Von Neumann Model: This widely used architecture is characterized by a single memory space for both data and instructions, supporting sequential instruction execution. Although prevalent, it has limitations, such as the bottleneck created by sharing a bus for accessing memory and data.

- CPU Design Evolution: CPUs have transitioned from single-core designs to more elaborate multi-core architectures, enhancing computational capabilities through parallel processing and increasing overall performance.

- Buses and Control Units: Buses facilitate communication between computer components while control units regulate data and instruction flow within the system, coordinating all operations effectively.

- Parallelism and Pipelining: To achieve higher processing speeds, modern systems incorporate parallelism, enabling simultaneous execution of multiple tasks, and pipelining, which partitions instruction execution into stages for efficient processing.

Youtube Videos

![How does Computer Hardware Work? 💻🛠🔬 [3D Animated Teardown]](https://img.youtube.com/vi/d86ws7mQYIg/mqdefault.jpg)

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Modern Computer System Structure

Chapter 1 of 5

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

● Modern computer systems are built using organized functional blocks.

Detailed Explanation

Modern computer systems consist of several organized components known as functional blocks. These blocks interact to perform computations and manage data effectively. The organization of these blocks is crucial for the efficient operation of the computer, allowing tasks to be completed quickly and accurately.

Examples & Analogies

Imagine a factory assembly line. Each worker (functional block) has a specific job (task) to complete, and they rely on one another to assemble the final product. Just as the efficiency of a factory depends on how well the workers cooperate, the efficiency of a computer depends on how well its functional blocks are organized and work together.

Von Neumann Model Limitations

Chapter 2 of 5

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

● The Von Neumann model is common but has limitations.

Detailed Explanation

The Von Neumann model is a foundational architecture in computer science where a single memory space is used to store both data and instructions. Although widely used, it has significant limitations, such as a bottleneck that occurs when the CPU must wait to fetch both data and instructions from the same memory, which can slow down processing.

Examples & Analogies

Think of a single-lane road where cars (instructions and data) must share the same path. When many cars try to use it at once, traffic slows down. Similarly, the Von Neumann model can experience delays because both data and instructions are vying for the CPU's attention.

Evolution of CPU Design

Chapter 3 of 5

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

● CPU design has evolved from single-core to multicore for better performance.

Detailed Explanation

CPU design has greatly evolved over time. Initially, processors had a single core that handled one task at a time. Today, multicore processors are common, allowing multiple cores to perform different tasks simultaneously. This evolution significantly increases computational speed and efficiency.

Examples & Analogies

Imagine a restaurant kitchen. In the past, there was only one chef (single-core) who cooked one dish at a time. Now, there are multiple chefs (multicore) working together, each preparing different dishes concurrently, which speeds up service and makes the kitchen more efficient.

Coordination of Buses and Control Units

Chapter 4 of 5

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

● Buses and control units enable coordination between units.

Detailed Explanation

Buses and control units are essential for maintaining smooth communication within a computer system. Buses are pathways that transfer data and signals between different computer components, while control units orchestrate all operations, ensuring that data flows correctly between the CPU, memory, and peripherals.

Examples & Analogies

Consider a city's public transportation system. Buses (data buses) transport passengers (data) from one location to another, while the transit authority (control unit) manages schedules and routes, ensuring everything operates on time and efficiently.

Importance of Parallelism and Pipelining

Chapter 5 of 5

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

● Parallelism and pipelining are essential for modern high-speed computing.

Detailed Explanation

Parallelism allows multiple operations to be executed simultaneously, significantly improving processing speed. Pipelining is a technique where different stages of instruction execution are overlapped. Both techniques are crucial in modern computing to handle complex operations and improve overall performance.

Examples & Analogies

Think of an assembly line once again. If multiple workers can perform different tasks at the same time (parallelism) while others are already working on the next step (pipelining), the final product (computer operation) can be completed much faster, showcasing the need for both methods to enhance productivity.

Key Concepts

-

Functional Blocks: The key components of a computer system including input, output, memory, control, and processing units.

-

Von Neumann Architecture: An architecture model that combines data and instruction memory into a single space.

-

CPU Evolution: The transition from single-core CPUs to multi-core designs for enhanced performance.

-

Buses: The communication channels that connect different components of a computer system.

-

Parallelism: The simultaneous execution of tasks to improve performance.

Examples & Applications

The ALU in a CPU performs operations such as addition and logical comparisons, enabling the system to process commands effectively.

Modern smartphones often utilize both Von Neumann and Harvard architectures to balance general processing needs and faster execution in embedded systems.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

In a CPU, the control unit will guide, while ALU processes, side by side.

Stories

Imagine a factory where the control unit directs workers (functions), the memory stores raw materials (data), and the ALU builds products (computations).

Memory Tools

Remember 'IOM C.A.' for Input, Output, Memory, Control, and Arithmetic Logic units.

Acronyms

Use 'V. N. H.' to recall Von Neumann for versatility and Harvard for high-speed.

Flash Cards

Glossary

- Functional Unit

A distinct component of a computer system responsible for specific functions, such as input, output, memory, and processing.

- Von Neumann Architecture

A computer architecture design that uses a single memory for data and instructions, enabling sequential execution.

- Harvard Architecture

A computer architecture design that utilizes separate memory spaces for data and instructions, allowing parallel access.

- CPU (Central Processing Unit)

The main processing component of a computer, executing instructions and performing calculations.

- Bus

A communication pathway for transferring data, addresses, and control signals between computer components.

- Pipelining

A method in instruction execution where different stages of multiple instructions are processed simultaneously.

- Parallelism

The simultaneous execution of multiple processes or instructions to enhance performance.

Reference links

Supplementary resources to enhance your learning experience.