Role of Inverse Matrices in Solving Systems

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Introduction to Inverse Matrices

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today, we are focusing on the role of inverse matrices in solving systems of linear equations. Can anyone tell me what an inverse matrix is?

Is it a matrix that, when multiplied by the original matrix, gives you the identity matrix?

Exactly! The inverse of a matrix A is denoted as A⁻¹, and when we multiply A by its inverse, we get the identity matrix I. This property is crucial for solving systems of equations efficiently.

So, how does the inverse matrix help us find solutions to these systems?

Great question! If A is square and invertible, we can express the solution to the system Ax = b as x = A⁻¹b. But we should also be aware of some challenges.

What are those challenges?

Computing the inverse can be expensive for larger systems and may not be numerically stable. That's why in some applications, alternative methods may be preferred.

Can you give examples where using the inverse matrix is beneficial?

Certainly! It's particularly useful for symbolic solutions in design models or performing sensitivity analysis in stress computations. Let's remember that better alternatives exist for large systems.

To summarize today, inverse matrices provide a powerful tool for solving linear systems, but we must be mindful of their computational costs and precision issues.

Computational Costs and Alternatives

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Continuing our conversation, let’s discuss why calculating the inverse can be computationally intensive. What are your thoughts?

Is it because you have to perform a lot of mathematical operations?

Exactly. The computational complexity can increase rapidly, especially as the matrix size grows. Instead, what alternatives can we consider?

What about LU decomposition? Is that a good alternative?

Yes! LU decomposition factors a matrix into a lower triangular matrix L and an upper triangular matrix U, which is generally more efficient for solving linear systems without needing the inverse.

But why use LU decomposition instead of the inverse?

Using LU decomposition reduces numerical instability and computational load; it suits large and sparse systems better. Remember, our goal is stability and efficiency.

Are there situations where using the inverse matrix is still appropriate?

Yes, for smaller systems or when needing symbolic solutions in design models, using the inverse can be quite effective due to its clarity and directness.

In conclusion, while inverse matrices can be useful, utilizing methods like LU decomposition often provides a more robust strategy for larger systems.

Real-World Applications

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

As we wrap up, let’s look at how the understanding of inverse matrices extends to real-world applications. Can anyone think of examples?

Maybe in engineering for stress analysis?

Correct! Engineers use inverse matrices to conduct sensitivity analyses in stress computations, determining how changes impact stability.

Could this also apply to other fields?

Absolutely! Beyond engineering, inverse matrices are used in physics, economics, and data science to solve various optimization problems or system equations.

What about in computational models?

Great point! In computational modeling, systems often rely on the direct computation of solutions using matrices, with inverse matrices simplifying the mathematics involved.

So, in practice, understanding when to use inverse matrices is important for efficiency?

Exactly. Knowing when to apply these concepts and choosing the right methods maximizes our computational resources and ensures accurate results.

In closing, we appreciate the dual nature of inverse matrices in theory and practice, particularly their implications in various fields.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

When the matrix A is square and invertible, the system of equations can be efficiently solved using the formula x = A⁻¹b. However, calculating the inverse can be computationally expensive and may lead to numerical inaccuracies, necessitating alternative methods for larger systems.

Detailed

Role of Inverse Matrices in Solving Systems

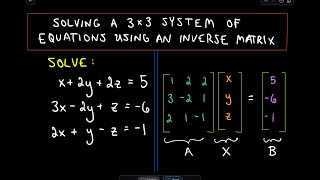

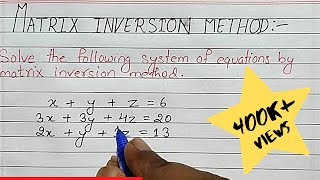

Inverse matrices are an essential tool in the solution of systems of linear equations, particularly when dealing with square and invertible matrices. The primary relation is expressed through the formula:

x = A⁻¹b

Where A is the coefficient matrix, b is the vector of constants, and x is the solution vector containing the unknowns. While this method provides a direct way to arrive at the solution, it has its downsides:

- Computationally Expensive: Inverting a matrix can be costly in terms of time and resources, especially for large systems.

- Numerical Instability: The computed inverse can be sensitive to perturbations in the matrix, impacting solution accuracy.

Due to these limitations, alternative methods such as LU decomposition or iterative approaches are often preferred for larger systems, where efficiency and stability are crucial. Despite these drawbacks, inverse matrices are particularly useful in symbolic solutions during design models and sensitivity analysis in stress computations, where understanding the behavior of systems under slight changes is vital.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Solving with Inverse Matrices

Chapter 1 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

If A is a square and invertible matrix, the system can be solved as:

x=A−1b

Detailed Explanation

In this chunk, we introduce the concept of using inverse matrices to solve systems of linear equations. When you have a square matrix A—that means the number of rows equals the number of columns—and this matrix is invertible (it has an inverse), you can find a unique solution for the variable vector x by rearranging the equation Ax = b to x = A⁻¹b. This is a direct method of finding solutions where we expand the left-hand side of the equation and rearrange it to express x in terms of the inverse of A and the vector b.

Examples & Analogies

Think of it like having a special key (the inverse of the matrix) that only works with a specific lock (the original matrix A). When you want to open the lock (find the solution x), you can use the key (the inverse matrix) to unlock it. Each key only works for its specific lock, ensuring that when you use it correctly, you can access the information you need.

Challenges with Inverse Matrices

Chapter 2 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

However:

- Computing the inverse is expensive for large systems.

- Not numerically stable; better alternatives include LU decomposition or iterative methods.

Detailed Explanation

While using inverse matrices is feasible, there are significant challenges, especially with large systems. Calculating the inverse of a matrix can be resource-intensive; it requires more computational power and time as the size of the matrix increases, making it inefficient for large systems. Additionally, numerical stability is another concern. Small errors in the input data can lead to large errors in the solution when using an inverse matrix. To overcome these issues, alternative methods such as LU decomposition or iterative methods are recommended, as they are generally more efficient and stable for solving larger systems.

Examples & Analogies

Imagine trying to bake a large cake from scratch. If the recipe requires many precise measurements and techniques, it might take a long time and be prone to mistakes, especially if you’re working alone. On the other hand, if you employ a more streamlined approach—like using a pre-mixed cake batter or a simplified recipe—you can achieve a delicious cake more efficiently with fewer chances of error. Similarly, using LU decomposition or iterative methods simplifies the process of solving complex systems, making it much easier and reliable.

Applications of Inverse Matrices

Chapter 3 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Used for:

- Symbolic solution in design models.

- Sensitivity analysis in stress computations.

Detailed Explanation

Despite the challenges, inverse matrices have important applications, particularly in specific types of problems. They are often used in design models where symbolic solutions are needed. For instance, engineers might derive formulas that depend on certain variables; having an inverse allows them to solve for these variables symbolically. Additionally, inverse matrices can play a vital role in sensitivity analysis, which assesses how the outcomes of a system are affected by changes in input data—particularly in stress computations where understanding the effects of varying loads or materials is crucial.

Examples & Analogies

Think of a designer creating a geometric pattern on a computer. They may want to know how changing one aspect of the design affects the overall look. By using models (the inverse matrices), they can quickly see the implications of these changes without having to recreate the entire design each time. This efficiency allows for rapid iterations and improved outcomes in their creative process.

Key Concepts

-

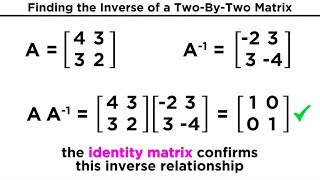

Inverse Matrix: A matrix that, when multiplied by the original matrix, results in the identity matrix.

-

Square Matrix: A matrix with an equal number of rows and columns, required for the concept of invertibility.

-

Computational Expense: The cost in computational resources and time required to compute the inverse of a matrix.

-

Numerical Stability: The degree to which an algorithm maintains accuracy while performing computations.

Examples & Applications

In a design model, if we have an equilibrium equation represented by Ax = b, and A is invertible, we can find the unknowns easily using x = A⁻¹b.

In sensitivity analysis, if minor changes in input data significantly affect the output, using inverse matrices to assess stability is crucial.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

To find the inverse, take the time, it’s not just simple; it takes some rhyme.

Stories

Imagine an engineer trying to solve a structure's stress. They calculate the inverse to see if their design passes the test!

Memory Tools

For remembering the properties of inverse matrices, think: I for Identity, U for Unique (solution), S for Square matrix, N for Numerically stable.

Acronyms

I-USE-N. I for Inverse, U for Useful, S for Square, E for Efficient, N for Numerical stability.

Flash Cards

Glossary

- Inverse Matrix

A matrix A⁻¹ that satisfies the condition AA⁻¹ = I, where I is the identity matrix.

- Square Matrix

A matrix with the same number of rows and columns.

- Identity Matrix

A square matrix in which all diagonal elements are 1 and all other elements are 0.

- Sensitivity Analysis

A method to determine how the variation in the output of a mathematical model can be attributed to different variations in its inputs.

- LU Decomposition

A method of decomposing a matrix into a lower triangular matrix and an upper triangular matrix to simplify computations.

Reference links

Supplementary resources to enhance your learning experience.