Future Trends in SIMD, Vector Processing, and GPUs

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Next-Generation SIMD Extensions

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today, we'll discuss next-generation SIMD extensions. For instance, AVX-512 allows us to utilize wider vector registers, providing more data processing power in parallel.

What's the main benefit of these wider vector registers?

Great question! Wider registers mean that we can process more data in a single instruction cycle, which is crucial for handling data-intensive tasks.

Are there specific applications that benefit the most?

Absolutely! Applications in AI and scientific simulations gain substantial performance boosts due to these enhancements.

Can you sum up how SIMD extensions help in these applications?

Certainly! SIMD extensions improve throughput by allowing operations on large data sets simultaneously, which is vital for efficiency in processing.

Machine Learning on GPUs

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Next, let's explore the growing use of GPUs for machine learning. Why do you think GPUs are preferred for these tasks?

Because they can handle many simultaneous operations exactly, right?

Exactly! Their architecture allows them to excel at parallel processing, which is ideal for training complex models like neural networks.

What about performance gains for training and inference?

Great inquiry! The parallel computing capabilities of GPUs lead to dramatic speed-ups in both training and inference phases, making them indispensable in AI research.

Quantum Computing and GPUs

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Finally, let's discuss the intersection of quantum computing and GPUs. What are your thoughts on how these technologies might work together?

Could GPUs help execute quantum algorithms in some way?

Precisely! Future GPUs may incorporate quantum processing elements or hybrid approaches, which could effectively address complex computational challenges.

That sounds revolutionary! But do we know when it will happen?

It's still early, but current advancements are promising. The fusion of classical and quantum computing could unlock unprecedented capabilities.

Can you recap the key points from today?

Sure! We discussed how SIMD extensions enhance performance in data-intensive applications, the growing role of GPUs in machine learning, and the promising future of quantum computing integration.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

As computational requirements continue to rise, future trends in SIMD, vector processing, and GPUs indicate that next-generation SIMD extensions will enhance performance for data-intensive applications, particularly in AI and machine learning domains. Moreover, the burgeoning field of quantum computing promises exciting possibilities for hybrid processing approaches.

Detailed

Future Trends in SIMD, Vector Processing, and GPUs

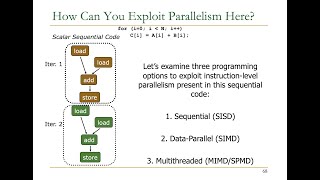

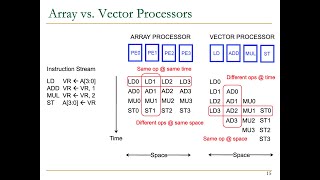

As computational demands grow, vector processing, SIMD (Single Instruction, Multiple Data), and GPUs (Graphics Processing Units) are rapidly evolving to support larger and more complex workloads. The trends include:

1. Next-Generation SIMD Extensions

New SIMD instruction sets, like AVX-512 in Intel CPUs, incorporate wider vector registers and advanced operations, significantly elevating performance for data-intensive tasks such as artificial intelligence (AI) and scientific simulations.

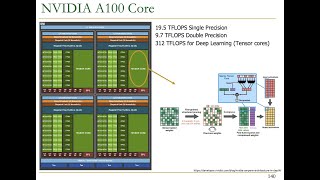

2. Machine Learning on GPUs

The increasing utilization of GPUs for machine learning and AI workloads is expected to spur further developments in SIMD and vector processing capabilities, focusing on optimizing deep learning training and inference processes.

3. Quantum Computing and GPUs

While still in developmental stages, the prospect exists for future GPUs to integrate quantum processing elements or hybrid approaches, enhancing their ability to tackle complex problems that traditional processors might struggle with efficiently.

These trends underscore the significant progress and innovations anticipated in the realm of parallel computing.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Next-Generation SIMD Extensions

Chapter 1 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

New SIMD instruction sets like AVX-512 in Intel CPUs provide wider vector registers and more advanced operations to improve performance for data-intensive tasks such as AI and scientific simulations.

Detailed Explanation

Future SIMD extensions, like AVX-512, are being developed to enhance data processing capabilities. These new instruction sets allow for the use of wider vector registers, meaning that more data can be processed in a single operation. This is particularly important for data-heavy applications like artificial intelligence (AI) and scientific simulations, as it allows for faster computation and more efficient use of processing resources.

Examples & Analogies

Think of SIMD extensions like adding wider lanes to a highway. If each lane can handle more cars at once (data), traffic can move more smoothly and quickly. In data processing, wider vector registers work the same way by allowing more information to be processed simultaneously, thereby speeding up calculations in complex tasks.

Machine Learning on GPUs

Chapter 2 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

The growing use of GPUs for machine learning and AI workloads is expected to drive further innovations in SIMD and vector processing capabilities, particularly for accelerating deep learning training and inference.

Detailed Explanation

As machine learning, especially deep learning, continues to expand, GPUs are becoming essential tools due to their ability to perform many calculations at once. This increased reliance on GPUs will push the development of new SIMD and vector processing technologies, as these advancements will help in efficiently handling the huge amounts of data and complex algorithms typical in machine learning tasks.

Examples & Analogies

Consider a chef preparing a multi-course meal for a large banquet. A traditional kitchen might get bogged down when trying to produce a lot of meals at once. However, a modern kitchen equipped with efficient gadgets acts like a GPU, allowing multiple tasks (like chopping, boiling, and baking) to happen simultaneously. This way, the chef can serve the guests faster, just as GPUs accelerate the processing speeds in machine learning workflows.

Quantum Computing and GPUs

Chapter 3 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

While quantum computing is still in its infancy, it is expected that future GPUs may incorporate elements of quantum processing or hybrid approaches to handle complex workloads that cannot be efficiently handled by classical processors.

Detailed Explanation

Quantum computing represents a new frontier in computing technology. It has the potential to solve certain types of problems much faster than classical (traditional) computers can. Researchers anticipate that future GPUs might develop hybrid capabilities that combine classical processing with quantum processing to tackle tasks that require immense computational power that classical GPUs alone cannot efficiently manage.

Examples & Analogies

Imagine trying to solve a complex jigsaw puzzle with thousands of pieces. Traditional methods of putting it together (classical processors) might take a long time, but a specialized method (quantum processing) could instantly recognize patterns and fit pieces together much quicker. Just like merging two methods can solve the puzzle faster, combining classical GPUs with quantum computing could revolutionize how we tackle challenging computational tasks.

Key Concepts

-

Next-Generation SIMD Extensions: Innovations like AVX-512 enhance data processing capabilities in CPUs.

-

Machine Learning on GPUs: GPUs are increasingly crucial for AI applications due to their parallel processing abilities.

-

Quantum Computing and GPUs: Future technologies may integrate quantum computing features in classical GPU architecture for superior performance.

Examples & Applications

The AVX-512 instruction set allows Intel CPUs to perform operations on 512 bits of data simultaneously, significantly speeding up AI computations.

GPUs enable deep learning frameworks like TensorFlow to perform fast matrix multiplications that are fundamental to training neural networks.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

When machines compute so quick, SIMD kicks, makes processing slick.

Stories

Imagine a busy chef preparing multiple dishes at once: that's SIMD—cooking many meals with one master recipe!

Memory Tools

AVX-512: A Very eXceptional 512-bit performance for high-intensity tasks.

Acronyms

G-P-Q

GPUs make tasks Faster

Powered by Quantum technology amidst future trends.

Flash Cards

Glossary

- SIMD

Single Instruction, Multiple Data; a parallel computing method where a single instruction processes multiple data points simultaneously.

- AVX512

Advanced Vector Extensions; a set of SIMD instruction sets provided in Intel CPUs that allows wider vector registers for enhanced parallel processing.

- Machine Learning

A subset of artificial intelligence that involves the use of algorithms to allow computers to learn from and make predictions based on data.

- Quantum Computing

A type of computing that leverages quantum mechanics to perform calculations at unprecedented speeds for complex problems.

Reference links

Supplementary resources to enhance your learning experience.