SIMD Instructions

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Understanding SIMD Basics

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today, we’re diving into SIMD, which stands for Single Instruction, Multiple Data. Who can tell me what that means?

It means one instruction is applied to multiple data points at once, right?

Exactly! This means we can process more data in less time. Think of SIMD as a way to achieve high throughput in data processing. Can anyone name an area where this is useful?

Like in video games, where lots of graphics need to be processed quickly?

Spot on! In graphics rendering, SIMD speeds up the computation remarkably. Remember, SIMD leverages data-level parallelism. Let's dive deeper into how that works.

Does it mean that SIMD only works with certain types of data?

Good question! Yes, SIMD is most effective on data that can be processed in a similar manner, like arrays or vectors. Let’s summarize: SIMD enables us to handle multiple data elements simultaneously, boosting performance in tasks like graphics and scientific simulations.

Element-wise vs. Gather/Scatter Operations

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now, let’s explore the types of operations SIMD instructions can perform. Can anyone explain what element-wise operations are?

They apply the same operation, like addition, to each element in a vector, right?

Precisely! For example, adding two arrays element-wise would be a fundamental use of SIMD. What about gather/scatter operations? Anyone know what these do?

Is it about accessing non-continuous memory locations? Like if data isn't arranged in a single block?

Exactly! Gather operations pull data from various memory locations into a single vector, while scatter operations do the opposite. This flexibility is crucial for optimizing memory access. Can we see how these operations boost performance in applications?

In tasks like machine learning, where we have a lot of data to analyze, right?

Yes! In deep learning, utilizing SIMD can drastically speed up operations like matrix multiplication. To summarize, element-wise operations are straightforward iterative computations while gather/scatter operations enable flexible memory management.

Performance Impacts of SIMD

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Let’s wrap up our SIMD discussion with its impact on performance. What do you think happens when we use these instructions?

We can process data faster because we’re executing one instruction with multiple data points!

Exactly! This is what we call increased throughput. This means that SIMD can drastically reduce the time for operations involving large datasets. Can you think of specific cases where this would apply?

In scientific simulations for weather modeling or in video encoding.

Exactly! Whenever we have tasks that process large amounts of similar data, SIMD makes them much faster. As a takeaway today, remember that SIMD is a core feature of modern processors, enabling efficient parallel data processing.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

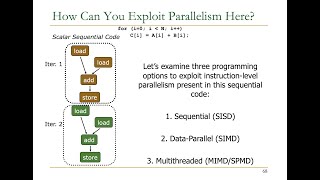

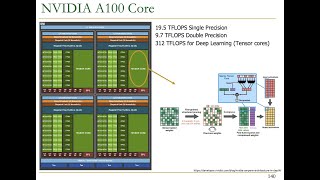

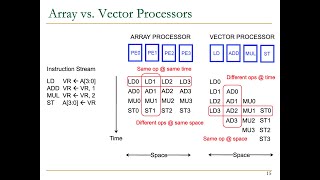

SIMD (Single Instruction, Multiple Data) instructions are essential for enabling fast parallel processing in modern CPUs and GPUs. These instructions facilitate element-wise operations, gather/scatter operations, and are crucial for achieving high performance when handling large datasets.

Detailed

SIMD Instructions

SIMD (Single Instruction, Multiple Data) instructions play a pivotal role in vector processing by allowing a single instruction to execute across multiple data elements simultaneously. This parallel execution model is highly beneficial for tasks that consist of repetitive operations over large datasets, making it integral in fields such as image processing, scientific simulations, and machine learning.

Key Points Covered:

- Element-wise Operations: These fundamental operations (like addition, subtraction, and multiplication) apply to each individual data element in a vector, vastly improving computational efficiency compared to traditional methods.

- Gather/Scatter Operations: These allow for non-contiguous memory management, facilitating more flexible access to data in memory, which is essential for irregular data structures.

- Performance Gains: By utilizing SIMD instructions, performance can greatly improve, as processing multiple data points with the same instruction significantly decreases overall execution time for vector tasks.

In summary, understanding SIMD instructions is crucial for leveraging parallel processing capabilities, especially in modern computational applications.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

Element-wise Operations

Chapter 1 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Operations that are applied to each data element in a vector, such as addition, multiplication, and logical comparisons.

Detailed Explanation

Element-wise operations refer to actions that are performed independently on each component of a vector. For example, in a vector that holds several numbers, if you want to add 5 to each number, the SIMD instruction would perform the addition simultaneously for all elements in the vector. This parallel processing can dramatically speed up calculations compared to processing each element one by one.

Examples & Analogies

Imagine you are making sandwiches for a party. If you have 10 slices of bread and you need to add butter to each slice, doing it one slice at a time would take longer. But if you have a wide enough table and can butter all the slices at once, it gets done much faster. This is similar to how SIMD performs element-wise operations in parallel.

Gather/Scatter Operations

Chapter 2 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Allows non-contiguous memory elements to be loaded into vector registers, enabling more flexible memory access patterns.

Detailed Explanation

Gather and scatter operations refer to reading from and writing to memory in a non-linear manner. For example, a gathering operation can collect non-adjacent bytes from memory into a single vector register. Conversely, a scattering operation spreads the data from a vector register to different, possibly non-adjacent memory addresses. This flexibility in accessing memory allows SIMD processors to optimize performance, especially when dealing with data that isn't stored in a continuous block.

Examples & Analogies

Think of gathering as a librarian collecting books from different shelves that are placed across the library, while scattering is like the librarian placing books back on different shelves after sorting them. This allows for quicker access to required information instead of moving linearly through shelves.

SIMD Performance

Chapter 3 of 3

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

SIMD achieves high performance by processing multiple data elements in parallel, significantly reducing the time required for operations on large datasets.

Detailed Explanation

The performance of SIMD stems from its ability to handle multiple data elements through a single instruction, making it exceptionally efficient for tasks that can be performed in parallel. This means, instead of a CPU executing a task for one data point and then another, a SIMD processor can work on a set of data points all at once. This parallelism significantly decreases the overall processing time, especially beneficial for applications like graphics rendering or scientific simulations where large quantities of data are processed.

Examples & Analogies

Consider a team of chefs working together in a kitchen to prepare a large meal. If each chef focuses on a specific dish simultaneously, the meal can be prepared much quicker than if one chef worked on each dish sequentially. This illustrates how SIMD operations can significantly cut down on processing time.

Key Concepts

-

Element-wise operations: Operations applied to each element in a vector.

-

Gather/Scatter operations: Memory operations that manage non-contiguous data efficiently.

-

Performance: SIMD significantly boosts throughput by processing multiple data points simultaneously.

Examples & Applications

Using SIMD for image processing where each pixel can be processed simultaneously.

Applying SIMD in scientific simulations for faster calculations on large datasets.

Vector addition or multiplication where each element of a vector is processed in parallel.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

SIMD cuts the time in line, / Processing data feels so fine. / With one instruction and many teams, / Quickly handling complex dreams.

Stories

Once in a land of data streams, there lived a wizard called SIMD. He could cast spells (instructions) that touched many elements at once, saving everyone time and making big tasks easier to manage, much like casting a spell over the whole kingdom at once!

Memory Tools

REMEMBER: SIMD stands for Single Instruction, Multiple Data. Think 'SIMMER' for a pot that cooks multiple ingredients at once — just like SIMD!

Acronyms

SIMD

Single Instruction

Multiple Data - Simplifying tasks for max data processing!

Flash Cards

Glossary

- SIMD

Single Instruction, Multiple Data; a parallel computing model where a single instruction operates on multiple data points simultaneously.

- Elementwise Operation

Operations such as addition or multiplication applied to each corresponding element in data vectors.

- Gather/Scatter Operations

Memory operations that allow non-contiguous data to be loaded or stored in vector registers.

- Throughput

The amount of processing that occurs in a given period of time, often increased through SIMD instructions.

Reference links

Supplementary resources to enhance your learning experience.