GPU vs. CPU

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Introduction to GPUs and CPUs

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Today, we're going to explore the differences between GPUs and CPUs. Who can tell me what a CPU is?

A CPU is the brain of the computer that handles general tasks, right?

Exactly! The CPU is designed for general-purpose computation and excels at single-threaded performance. Now, what about GPUs?

GPUs are for graphics processing, but I think they can do more than that?

Yes! GPUs are specialized hardware meant for massive parallelism, making them ideal for parallel tasks. Remember the acronym P for Parallelism in GPU!

Architectural Differences

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Let's dive into architectural differences. Can anyone explain what makes GPU architecture suitable for parallel tasks?

GPUs have many small processing cores that can work on tasks at the same time.

Right! This architecture allows GPUs to handle multiple operations simultaneously. In contrast, CPUs have fewer cores optimized for complex tasks. Remember the phrase 'Less is More' for CPU efficiency!

So, GPUs are better for tasks that can be split into smaller bits!

Exactly! That's why they're great for graphics rendering and deep learning tasks.

Use Cases for GPUs and CPUs

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now, let's talk about use cases. When would you choose a GPU over a CPU?

If I'm working with lots of images or doing machine learning, I'd use a GPU.

Correct! GPUs are excellent for tasks involving large datasets like those in graphics and AI. What about CPUs?

For regular computing tasks, like running applications or games that don’t need parallel processing.

Exactly! CPUs handle decision-making tasks effectively. Remember, 'General for CPU, Parallel for GPU'!

Performance Comparison

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Let's analyze performance. Why do you think GPUs outperform CPUs in certain scenarios?

Because they can do many calculations at once, while CPUs focus on single tasks!

Exactly! This makes GPUs far superior for tasks that involve repetitive operations. Remember P for Performance!

So, if I'm doing deep learning, I want to maximize GPU usage?

Yes! Using GPUs for deep learning accelerates the computation significantly.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

The section compares GPUs and CPUs, discussing the architectural differences that enable GPUs to perform massive parallel operations efficiently, making them suitable for high-throughput tasks like graphics rendering and machine learning. In contrast, CPUs excel in single-threaded tasks and general-purpose computing.

Detailed

GPU vs. CPU

Overview

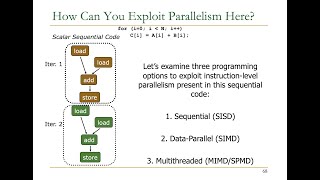

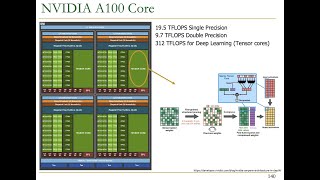

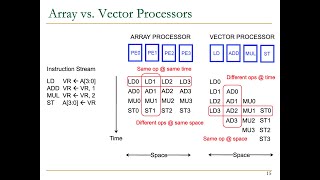

The comparison between Graphics Processing Units (GPUs) and Central Processing Units (CPUs) is crucial for understanding how different hardware is optimized for various computing tasks. CPUs are designed for general-purpose computation and single-threaded performance, while GPUs are designed for parallelism, capable of executing many threads simultaneously.

Architectural Differences

CPU Characteristics

- General-Purpose: CPUs manage a wide range of tasks and are optimized for a variety of computing operations.

- Single-Thread Performance: They excel in tasks that require complex decision-making and less parallelism.

GPU Characteristics

- Massive Parallelism: GPUs consist of thousands of small processing cores designed to handle multiple operations at once, making them ideal for tasks involving large datasets.

- Specialization in Parallel Tasks: They are particularly suited for operations that can be parallelized, such as those found in graphics rendering, matrix calculations used in deep learning, and scientific simulations.

Conclusion

Understanding these differences helps in selecting the appropriate hardware for specific applications, significantly impacting performance and efficiency.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

CPU vs. GPU Architecture

Chapter 1 of 2

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

CPUs are designed for single-threaded performance and general-purpose computation, whereas GPUs are designed for parallelism and can execute thousands of threads simultaneously.

Detailed Explanation

CPUs, or Central Processing Units, are typically optimized for tasks requiring complex calculations but handling a smaller number of operations at once. They excel at performing tasks that depend on a fast response time and need sequential processing. On the other hand, GPUs, or Graphics Processing Units, are engineered to manage multiple operations in parallel, making them ideal for tasks such as graphics rendering and machine learning that deal with large volumes of data simultaneously.

Examples & Analogies

Imagine a chef (CPU) preparing a gourmet meal. The chef focuses on individually plating each dish, paying attention to detail and presentation. In contrast, a fast-food restaurant kitchen (GPU) has many workers who can assemble sandwiches, fry fries, and blend milkshakes all at the same time, serving customers much faster without concerning themselves with the nuances of gourmet cooking.

Massive Parallelism of GPUs

Chapter 2 of 2

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

Massive Parallelism: GPUs can handle highly parallel tasks that involve simple operations on large amounts of data, making them ideal for vector and matrix computations in deep learning and graphics rendering.

Detailed Explanation

Massive parallelism refers to the ability of GPUs to perform numerous calculations simultaneously, effectively handling tasks that can be divided into smaller, independent operations. This makes GPUs exceptionally suited for vector and matrix computations, which are critical for graphics and machine learning applications. For example, when processing an image, each pixel can be adjusted independently, allowing many pixels to be processed at the same time.

Examples & Analogies

Think of this like an assembly line for packaging toys where each worker packs one toy independently. If you have enough workers (GPU cores), you can package hundreds of toys in the same time it would take a single worker (CPU) to package just a few.

Key Concepts

-

CPU vs. GPU: CPUs are optimized for sequential tasks, while GPUs excel in parallel processing.

-

Massive Parallelism: GPUs can process thousands of threads simultaneously, ideal for data-intensive applications.

Examples & Applications

In deep learning, GPUs can handle matrix calculations for neural networks more efficiently than CPUs.

Graphics rendering in video games utilizes GPUs to handle complex calculations for visuals.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

GPU and CPU, each has a role; CPU's the brain, GPU's the soul.

Stories

Once upon a time, there were two heroes: CPU the thinker, solving one puzzle at a time, and GPU the speedy, solving many puzzles all at once. Together, they made computing magical!

Memory Tools

C for Central in CPU, P for Parallel in GPU.

Acronyms

Remember RAMP

for Rendering (GPU)

for All tasks (CPU)

for Multi-thread (GPU)

for Processing (CPU).

Flash Cards

Glossary

- CPU (Central Processing Unit)

The main component of a computer that performs calculations and manages instructions.

- GPU (Graphics Processing Unit)

A specialized processor designed for rendering graphics and performing parallel computations.

- Parallelism

The ability to process multiple tasks or data points simultaneously.

Reference links

Supplementary resources to enhance your learning experience.