SIMD Performance

Enroll to start learning

You’ve not yet enrolled in this course. Please enroll for free to listen to audio lessons, classroom podcasts and take practice test.

Interactive Audio Lesson

Listen to a student-teacher conversation explaining the topic in a relatable way.

Introduction to SIMD Performance

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Welcome, everyone! Today, we're diving into SIMD performance. SIMD stands for Single Instruction, Multiple Data, and it dramatically increases how we can process information. Can anyone tell me why SIMD is significant?

I think it's important because it makes computing faster, right?

Exactly! SIMD allows a single instruction to be applied to many data points at once. This ability to process data in parallel offers significant performance enhancements, especially for large datasets.

So, it's like doing many tasks at the same time instead of one after the other?

Precisely! This concept is very useful in applications like image processing and scientific computations. Remember, we can think of SIMD as a team working together on a project, rather than one person doing everything alone.

Can you give us an example of where SIMD performance is critical?

Sure! In machine learning, particularly during the training of neural networks, SIMD allows large matrix operations to be executed faster, which is essential when dealing with extensive data inputs.

Will all processors use SIMD for high performance?

Not all processors do, but many modern CPUs and GPUs are designed with SIMD capabilities, showcasing how vital this is for current computing needs! Let's summarize: SIMD improves processing speed significantly by leveraging parallelism!

SIMD Architectures

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now let's explore SIMD architectures. The efficiency of SIMD is influenced by the architecture of the hardware. Can anyone name a few architectures that support SIMD?

I know Intel has something called AVX, right?

Correct! Intel's Advanced Vector Extensions are excellent examples, allowing very efficient processing of large datasets. ARM's NEON technology is another noteworthy architecture.

What about vector lengths? How do those work?

Great question! Vector length refers to the number of elements that can be processed in parallel. Wider vector lengths mean more parallelism, translating to faster processing.

Are there any limits to what kinds of operations SIMD can perform?

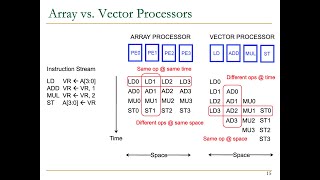

Yes, SIMD is best suited for tasks that involve identical operations across data elements. Examples include element-wise arithmetic or comparisons among large data arrays, but it may be less effective with tasks needing different operations for each data point.

So, in real-world applications, we could see significant differences in performance metrics?

Absolutely! Utilizing SIMD results in larger performance gains in applications that fit its execution model. Now to summarize: Architectures like AVX and NEON enhance SIMD operations by allowing multiple data element processing through specialized instruction sets.

Efficiency and Application of SIMD Performance

🔒 Unlock Audio Lesson

Sign up and enroll to listen to this audio lesson

Now that we've discussed SIMD architectures, let's analyze their efficiency and applications. Why do you think SIMD is becoming more prevalent in computing environments?

I guess because of the rise of large datasets in different fields like data science and AI?

Exactly! As the amounts of data grow, the need for efficient processing methods like SIMD becomes critical. It allows us to make sense of vast amounts of information quickly.

Could you mention a specific field where SIMD is essential?

Definitely. In the field of graphics rendering, SIMD processes multiple pixels in parallel, making rendering scenes much faster. It also plays a key role in video processing and editing.

What about deep learning? Are there roles for SIMD there as well?

Absolutely! SIMD greatly accelerates matrix multiplication, which is fundamental in many deep learning algorithms. By sharing identical operations across data, processing time is significantly reduced.

So the future of computing could heavily rely on SIMD's capabilities?

Certainly! Its efficiency in dealing with parallel tasks will continue to shape computer architecture and algorithms. In summary, SIMD not only enables faster data processing but is crucial for emerging technologies requiring efficient computation.

Introduction & Overview

Read summaries of the section's main ideas at different levels of detail.

Quick Overview

Standard

The SIMD performance section discusses how SIMD architectures enhance computing efficiency by allowing parallel processing of multiple data elements with a single instruction. It emphasizes the importance of specialized hardware and instructions in achieving high performance for various applications.

Detailed

SIMD Performance

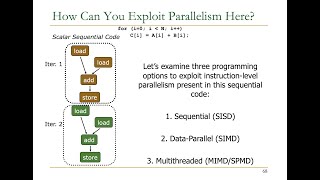

SIMD (Single Instruction, Multiple Data) is a computational paradigm that allows for the execution of a single instruction on multiple data elements simultaneously, significantly boosting performance in scenarios that involve repetitive tasks on large datasets. In modern CPU and GPU architectures, SIMD harnesses parallelism, enabling high throughput for operations that are suitable for optimization through vectorization.

Key Features of SIMD Performance:

- Parallel Processing: By applying one instruction to multiple data points, SIMD effectively doubles or triples the throughput of many applications such as image processing or scientific simulations.

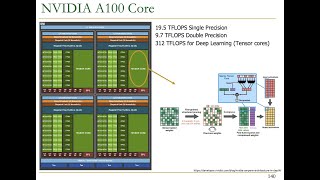

- Hardware Utilization: Modern processors include dedicated vector units designed for executing these SIMD instructions, allowing diverse operations like element-wise addition or multiplication to be performed concurrently across wide data segments.

- Architectural Support: SIMD performance is heavily dependent on the underlying architecture. Different systems support various vector lengths (e.g., 128-bit, 256-bit, or 512-bit) that determine how much data can be processed in one operation. The Advanced Vector Extensions (AVX) of Intel or NEON in ARM processors showcase examples of architecture design that enhances SIMD execution.

- Efficiency Gains: SIMD architectures are crucial for applications involving large datasets, as they considerably cut down processing times, making them ideal for use in high-performance computing, real-time graphics rendering, and machine learning tasks.

In conclusion, the SIMD performance section highlights the substantial impact of SIMD on computational efficiency, core design, and real-world applications in technology.

Youtube Videos

Audio Book

Dive deep into the subject with an immersive audiobook experience.

High Performance through Parallel Processing

Chapter 1 of 1

🔒 Unlock Audio Chapter

Sign up and enroll to access the full audio experience

Chapter Content

SIMD achieves high performance by processing multiple data elements in parallel, significantly reducing the time required for operations on large datasets.

Detailed Explanation

Single Instruction, Multiple Data (SIMD) enhances computing performance by allowing a single instruction to be applied to multiple data elements at the same time. This method increases the throughput of operations, especially when dealing with large datasets. For example, instead of processing one element at a time, which would require repeated instruction handling, SIMD can execute the same instruction on numerous elements in a single cycle. This parallelism leads to substantial reductions in the time needed for computations across various applications, such as image processing, scientific simulations, and data analysis.

Examples & Analogies

Think of SIMD like a chef preparing a large meal. Instead of cooking each dish one by one, the chef sets up multiple pans on the stove and cooks several dishes at the same time. This multitasking allows the chef to serve a meal much faster than if they prepared each dish sequentially.

Key Concepts

-

Parallel Execution: SIMD allows performing an operation across multiple data points at once, increasing processing speed.

-

Architectural Support: Key architectures such as Intel's AVX and ARM's NEON enhance SIMD performance through specialized instructions.

-

Data Processing Efficiency: SIMD is integral in efficiently handling tasks that involve large datasets across various applications.

Examples & Applications

In graphics rendering, SIMD processes multiple pixels or vertices simultaneously to enhance rendering speeds.

During machine learning model training, SIMD accelerates matrix multiplication, enabling faster computations.

Memory Aids

Interactive tools to help you remember key concepts

Rhymes

In SIMD's realm, fast data flows, one instruction, many bytes, how efficiency grows!

Stories

Imagine a pizza place where one cook prepares many pizzas at once with the same recipe—this is how SIMD cooks data quicker!

Memory Tools

SIP (Single Instruction, Parallel Processing) to remember how SIMD works.

Acronyms

S.I.M.D

Single Instruction

Many Data.

Flash Cards

Glossary

- SIMD

Single Instruction, Multiple Data; a parallel computing architecture that executes one instruction on multiple data elements simultaneously.

- Parallelism

The ability to perform multiple operations or tasks at the same time in computing.

- Vector Length

The number of data elements that can be concurrently processed by a SIMD instruction.

- AVX

Advanced Vector Extensions; a set of instructions for performing SIMD on Intel processors.

- NEON

An SIMD architecture commonly used in ARM processors aimed at accelerating multimedia applications.

Reference links

Supplementary resources to enhance your learning experience.